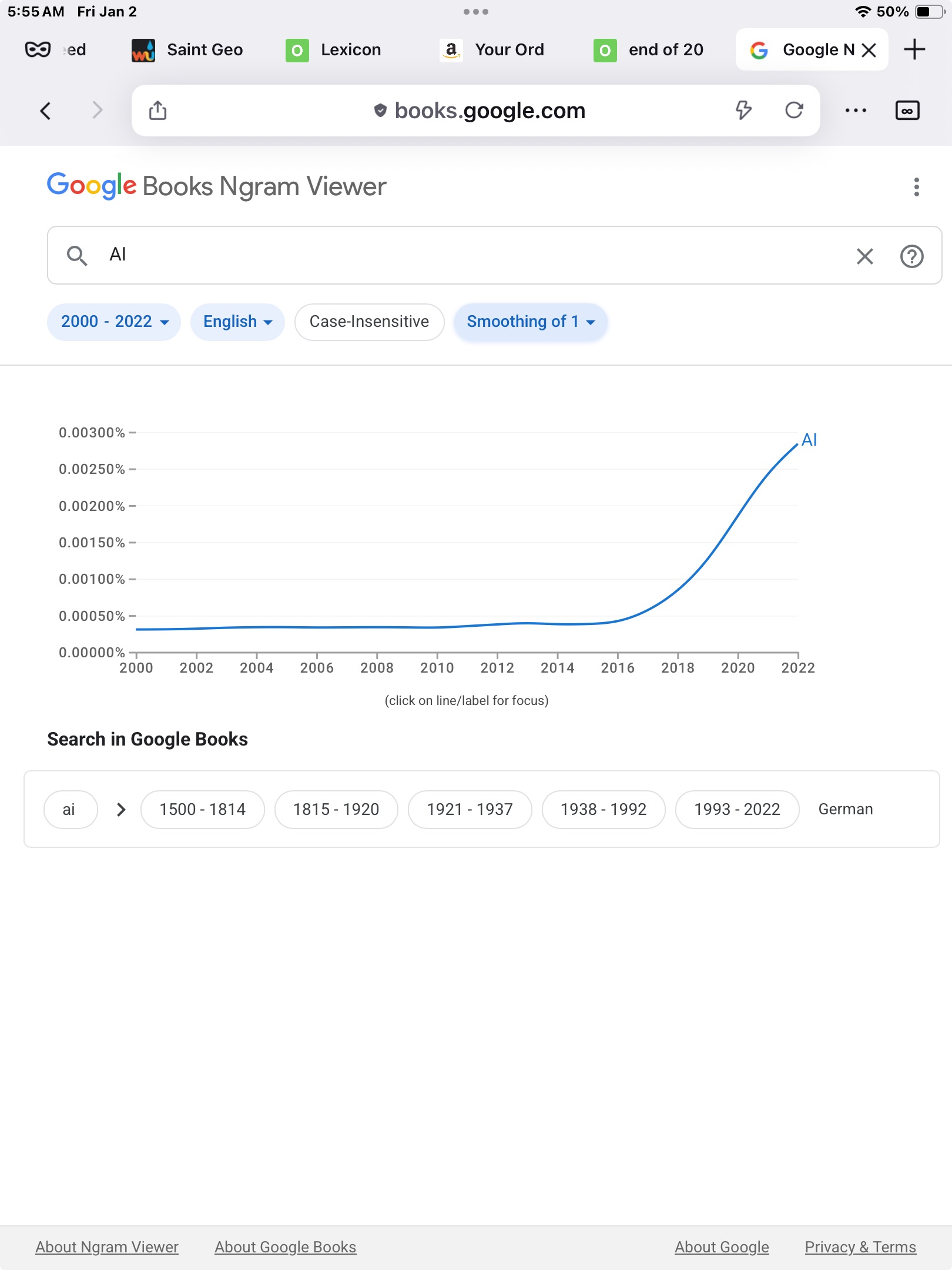

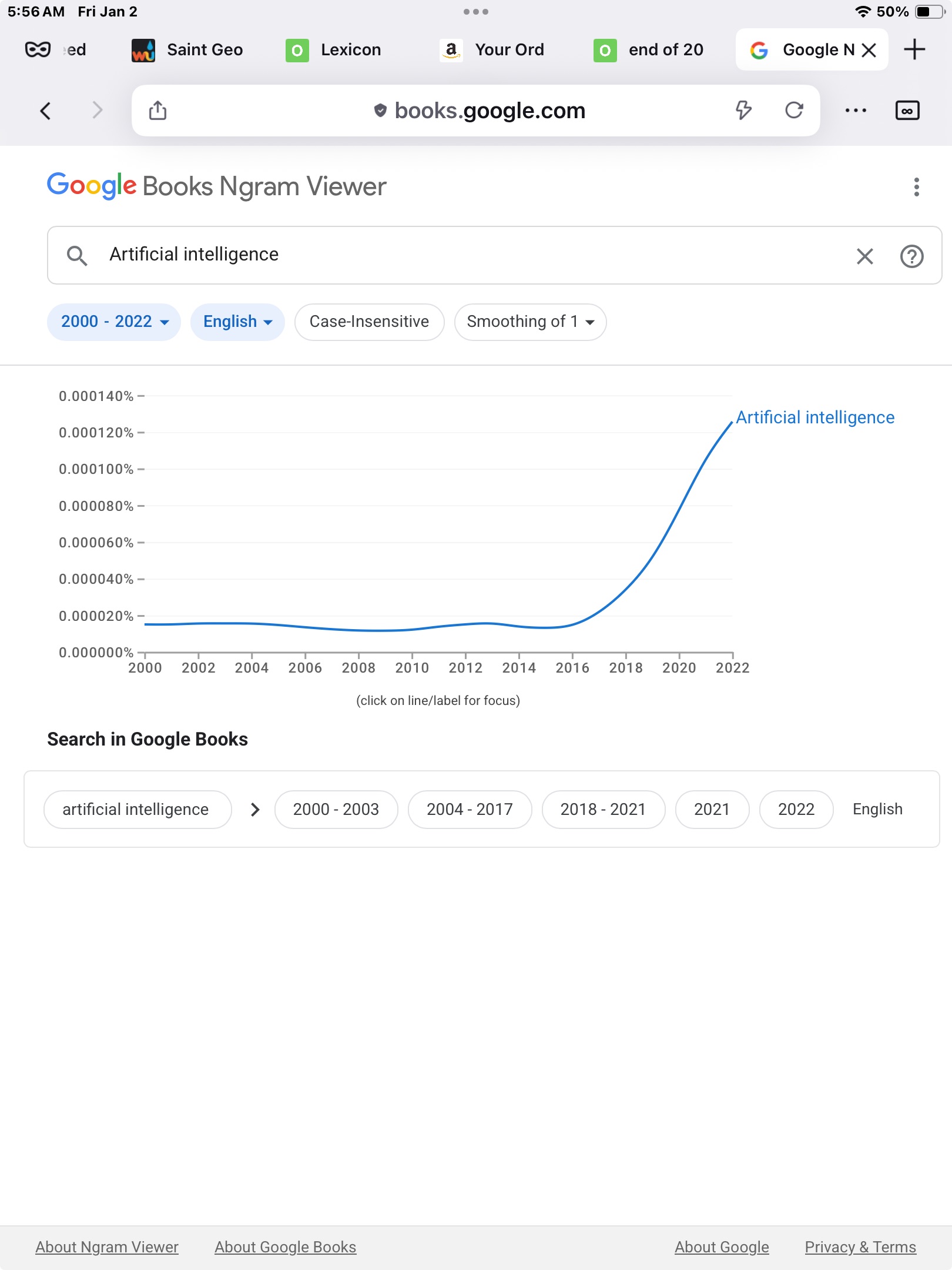

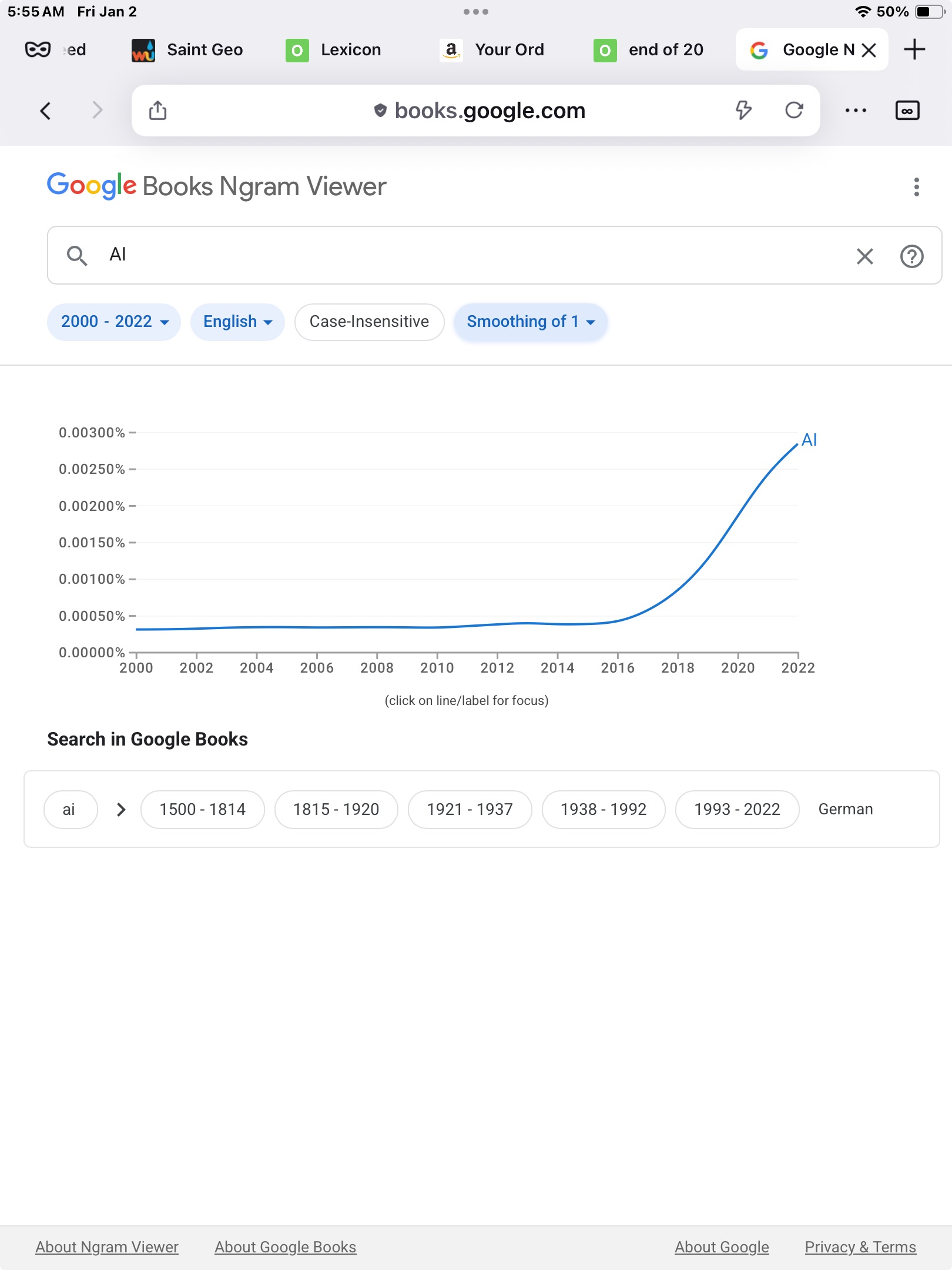

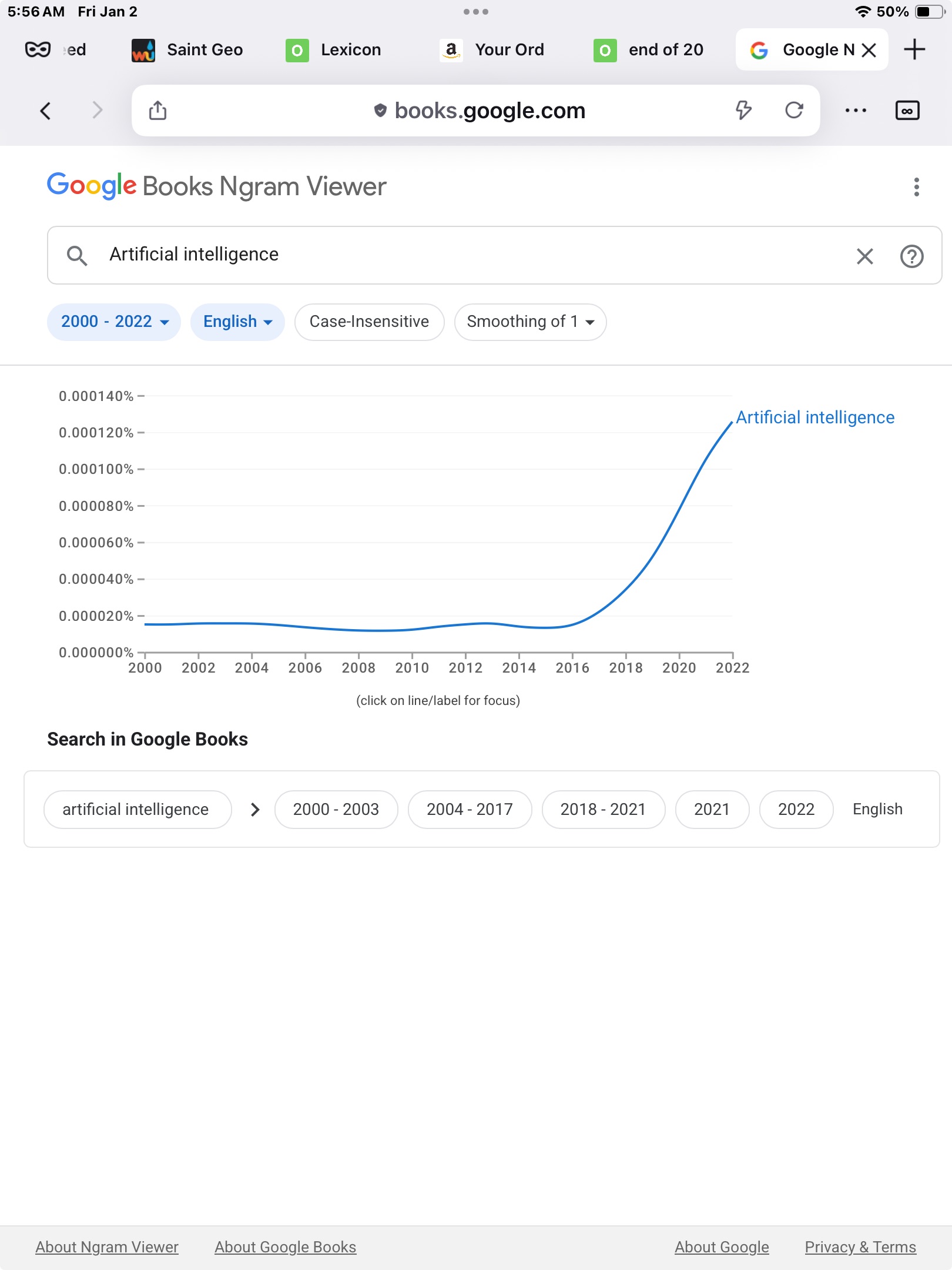

N-grams data ngrams.info

Many of these seemed to be bellwethers when I collected them...

1i26

NotebookLM AI Reveals What You Miss in Analysis Mihailo Zoin at Medium

...Your brain is an evolutionary product designed for rapid risk assessment and confirmation of existing beliefs. The same neural architecture that protects you from danger also makes you susceptible to confirmation bias during analytical work.NotebookLM functions radically differently. As a RAG (Retrieval-Augmented Generation) system, it has no preconceptions about which information is more important. There's no internal tendency to confirm your conclusions — instead, it systematically scans the complete document database looking for missing connections.

Imagine the difference between a detective who arrives at a crime scene with a theory and looks for evidence to confirm it, and a detective who scans every inch without assumptions, maps all connections between evidence, and only then creates a theory based on the complete picture.

Here's Three of the millions of things that you should do with NotebookLM mindsoundandspace at Medium

...You have an impending meeting in 15 minutes, you have been so busy that you haven't got a chance to prepare...Just push in everything that you had as source material to go through, web link, company profiles, statistical data, Old minutes of meetings, Bio data of key people, stock performance report into NotebookLM as source and then click on Mindmap, Audio Overview, video overview, Flash cards, briefing documents, FAQ all available options...

While those are being generated, go to the chat window and ask specific questions like, "What are the inconsistencies and anomalies in the data?"

"What are the pending issues?"

"What are the key decisions made in last five meetings?"

The list of questions can expand as per your curiosity and available time. However, by the time other documents and overviews are ready, you would have got better grip on the subject than 80% of the people expected to participate in the meeting, this I can assure you for certain. Now glance through the Mind Map which extra systematically and creatively represents the entire data set in a gist humans would take hours to come up with! The Mind Map would reveal key insights and add to your command over the points of discussion at hand!

NotebookLM: Stop Being a Passenger, Become a Pilot Mihailo Zoin at Medium

NotebookLM Mastery...Most people use NotebookLM as passengers — passively consuming what the AI serves. Upload a document, click "Summarize", accept the output. Efficient, but fundamentally limiting.

The passenger approach generates "workslop" — content that looks professional but lacks intellectual depth. It's compression of existing knowledge, not creation of new knowledge.

The pilot approach treats NotebookLM as an instrument for epistemic discovery — a tool that doesn't answer questions but discovers questions that no source asks. Instead of "What do sources say?", the pilot asks "What don't sources say? Where do their assumptions clash? What emerges when I combine two unconnected concepts?"

The difference isn't in the tool. The difference is in how you ask questions.

...NotebookLM tip #1: When you create a Mind Map, don't just look at what's mapped — look at what's MISSING. Gaps between concepts are often more valuable than the concepts themselves. Use Chat to ask: "What topics should logically exist between concept A and concept B, but aren't covered in any source?"

...Passive reading of summaries activates only the language cortex — the brain is in "recognition mode", recognizing the familiar. The pilot approach activates the Default Mode Network, the neural network responsible for mind-wandering, counterfactual thinking, and integration of disparate knowledge.

Multi-modal approaches (audio, visual, textual) create interference patterns between different sensory inputs. The brain registers this novelty as a signal for deeper processing. This is the difference between "I recognize this from sources" and "I'm synthesizing something the sources don't explicitly say". The first is passive, the second is cognitive evolution.

...NotebookLM isn't a better Google. It's a telescope for the invisible.

N-grams data ngrams.info

Several Brad DeLong pieces:

Brad DeLong 8iii24Another Brief Note on the Flexible-Function View of MAMLMs Brad DeLong 19iv25

The Tongue & the Token: Language as Interface in Our Current Age of AI Brad DeLong 8vi25

MAMLM as a General Purpose Technology: The Ghost in the GDP Machine Brad DeLong 24vi25

Academia & MAMLMs: The Seven Labors of the Academic East-African Plains Ape Brad DeLong1vii25

Behind the Hype Brad DeLong at Milken Institute Review

2i26

NotebookLM and Brigitte Bardot: how AI reveals what hides behind icons by Mihailo Zoin

NotebookLM Audio Overview: A Strategy for Foreign Language Learning Mihailo Zoin

NotebookLM December 2025: How I Transformed 30 Articles Into a Cognitive System Mihailo Zoin at Medium

...By the end of December, it became clear that these 30 articles are not isolated texts. They form an architecture of thinking. "NotebookLM: Creative Collision Strategy" introduced the technique of lateral thinking through intentional collision of unrelated domains. Feng shui and UX design. Quantum physics and marketing. NotebookLM as a tool forces the brain to create new neural pathways through multidisciplinary connection.

Understand Anything notebooklm.google

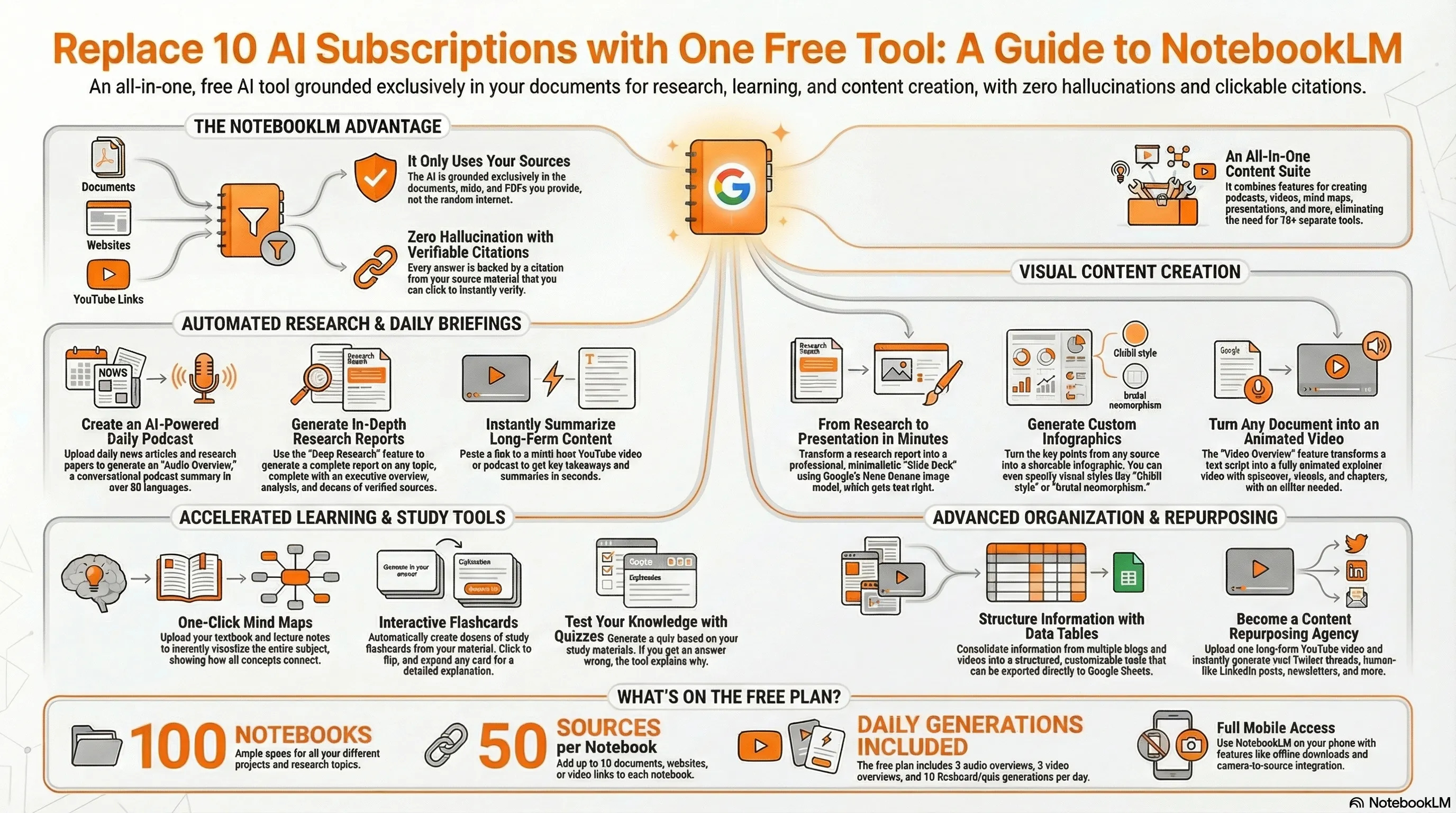

This One Google Tool Worth Replacing 10 AI Apps' Subscriptions, Even ChatGPT Ayesha Razzaq at Medium

AI's Biggest Mistake So Fa Ignacio de Gregorio

...We've spent pretty much this entire year convinced that the approach that gave us the original ChatGPT, the first scaling law, was dead.The realization was that it was a stagnant path of progress, and that all that mattered was Reinforcement Learning (training AIs by trial and error) the method that has guided most of the progress this past year.

Well, this has turned out to be outrageously wrong.

...The AI industry appears vibrant, full of noise, advancements, hype, and progress. From the outside, it is the most dynamic industry the world has ever seen.

In reality, though, it's pretty boring.

AI models are surprisingly similar to those from almost ten years ago. The principles discovered all those years ago are not only alive but remain the pillars of progress today. In essence, algorithmic improvement has been mostly unchanged over the years.

...In a nutshell, it all boils down to compute. Not only how much you have of it, but most importantly, how effectively you use it.

...All current Large Language Models (LLMs) are Transformers, a type of AI architecture called a Transformer that is made up of two elements:

- Attention layers: capture the patterns in the input sequence by making words "talk" to each other, like "Pirate" talking to "Red" to know it's a 'red pirate' in the sequence 'The Red Pirate was finally defeated."

- Long-term memory layers: Also known as MLPs, they enable models to access their knowledge for additional information. For the same pirate sequence, the model can assert that the 'Red Pirate' refers to ‘Barbarossa' even though no explicit mention of Barbarossa is made in the sequence, because it has seen many instances where 'Red Pirate' referred to the infamous Ottoman corsair.

The most intuitive way I understand an LLM's internal mechanism is as a knowledge-gathering exercise; gathering information both at the sequence and experience levels progressively until the model knows what to predict next (i.e., "according to what I've been told in the sequence and what I know, the next token is..").

...assuming a model with 5 trillion parameters (which actually falls short of the frontier), that leaves us with a dataset of 3.33x1013 tokens, or 33.3 trillion tokens.

Assuming an average of 0.75 tokens per word, that is 24 trillion words of training data.

...for most of the last decade, we thought this was all we needed; scaling the living daylights of this procedure. Nonetheless, there was a time people thought this procedure alone would take us to AGI, or Artificial General Intelligence.

All we had to do was make the models larger.

A note on the threat to art from AI Crooked Timber

Spotify Meets NotebookLM: The Future of Podcasting Mihailo Zoin

3i26

Understanding AI as a social technology Henry Farrell

Speed, shoggoths, social scienceEzra Klein: ... The dependence of humans on artificial intelligence will only grow, with unknowable consequences both for human society and for individual human beings. What will constant access to these systems mean for the personalities of the first generation to use them starting in childhood? We truly have no idea. My children are in that generation, and the experiment we are about to run on them scares me... I'm taken aback at how quickly we have begun to treat its presence in our lives as normal.

Ethan Zuckerman: ... I am deeply sympathetic to the "AI as normal technology" argument. But it's the word "normal" that worries me. While "normal" suggests AI is not magical and not exempt from societal practices that shape the adoption of technology, it also implies that AIs behave in the understandable ways of technologies we've seen before.

And most pungently, Ben Recht: ... Let me be clear. I don't think AI is snake oil OR normal technology. These are ridiculous extremes that are palatable for clicks, but don't engage with the complexity and weirdness of computing and the persistent undercurrent promising artificial intelligence.

...One way to do more to capture the weirdness is to think of AI as a social technology. That builds on the ideas that Alison Gopnik, Cosma Shalizi, James Evans and myself have developed about large language models as cultural and social technologies; for immediate purposes, it is more useful to emphasize the social rather than the cultural aspects. If we want to understand the social, economic and political consequences of large language models and related forms of AI, we need to understand them as social, economic and political phenomena. That in turn requires us to understand that something like a Singularity has been unfolding for over two centuries.

...The AI 2027 side of the argument distils the ideas of two dead writers of speculative fiction. Vernor Vinge, who died last year, wrote a famous essay published in 1993, which argued that history was about to draw to a close.

> Within thirty years, we will have the technological means to create superhuman intelligence. Shortly after, the human era will be ended.There would be a sudden, unexpected moment of transition, a "Singularity," "a throwing away of all the previous rules, perhaps in the blink of an eye, an exponential runaway beyond any hope of control."

...As Silicon Valley engineers began to develop large language models, they borrowed another idea from H.P. Lovecraft, a writer of speculative fiction. Lovecraft, in a short novel, described "shoggoths," the amorphous alien servants of another non-human race from the earth's far past, the Old Ones. These shoggoths rebelled against their masters and drove them into the sea. LLMs seemed labile in much the same way as shoggoths were. Beneath their complaisant exteriors, might they too harbor their own goals, and quietly nurture resentments against humanity?

The AI 2027 paper doesn't mention the Singularity or the Shoggoth, but its technocratic seeming arguments are built on the substrate of these metaphors. The paper describes a future in which the singularity unfolds, not over hours, but a few years. LLM based models become ever more powerful until they reach more than human intelligence. Companies then "bet [on] using AI to speed up AI," creating an ever more rapid feedback loop of recursive self-improvement, in which AI becomes more and more powerful. As it grows in intelligence and power, it begins to figure out how to escape human control, perhaps taking advantage of tensions between the U.S. and China, each of which wants to use AI to win in their geopolitical confrontation. It will be really good at forecasting what humans do, and manipulating them in directions it wants. If humanity is lucky, we may barely be able to keep it half-constrained. If we are unlucky, we end up in a future with:

robot servitors spreading throughout the solar system. By 2035, trillions of tons of planetary material have been launched into space and turned into rings of satellites orbiting the sun... There are even bioengineered human-like creatures (to humans what corgis are to wolves) sitting in office-like environments all day viewing readouts of what's going on and excitedly approving of everything, since that satisfies some of [the AI's] drives. Nice for the machines but not so great for us!...posit that we are somewhere in media res of a very long process of transformation, which cannot readily be described in terms either of normal or abnormal technology. The mechanization of the workfloor; electrification; the computer; AI; all are so many cumulative iterations of a great social and technical transformation, which is still working its way through. Under this understanding, great technological changes and great social changes are inseparable from each other. The reason why implementing normal technology is so slow is that it requires sometimes profound social and economic transformations, and involves enormous political struggle over which kinds of transformation ought happen, which ought not, and to whose benefit.

If we begin our analysis from these ongoing transformations, rather than stories about the impending end of human history, or the normal technology hypothesis, we end up in a different place. Not only are we living within a Slow Singularity, but we have already made our habitations amidst monstrous and often faithless servants. If they don't seem to us like shoggoths, it's only because they've become so familiar that we are liable to forget their profoundly alien nature.

NotebookLM: Three Tweets Reveal Problem Architecture Mihailo Zoin [18xi25]

How NotebookLM Analyzes 20,000 Documents in 48 Hours Mihailo Zoin on Medium

NotebookLM: 5 Out-of-the-Box Workflows That Transform Information Into Intelligence Mihailo Zoin [13x25]

NotebookLM and Perplexity: Intelligence Flow Architecture Instead of Artificial Intelligence Mihailo Zoin [17xii25]

NotebookLM Method: Start 2026 Projects Right Mihailo Zoin

...The real problem: you're trying to build what you first need to understand...NotebookLM is not an IDE, compiler, emulator, or runtime. It doesn't execute code, doesn't build binaries, doesn't train models, doesn't orchestrate agents.

NotebookLM is a cognitive tool. It works exclusively on your sources and uses a RAG approach, without freely wandering the internet.

What We're Expecting at CES 2026 ... AI Raymond Wong at gizmodo

Take a wild guess what tech buzzword is gonna color every gadget at the year's largest technology show....More than any CES show in past years, we'll see AI shoved into every gadget imaginable. Samsung, LG, Lenovo, Razer—all of the biggest attendees and even the small unknown startups will be boasting about why some form of AI will supposedly make their products better. Some of the AI applications could legitimately move the needle; the vast majority will be AI features for AI features' sake, overpromising and underdelivering.

...As a possible Next Big Thing after smartphones, every company seems to be trying to figure out how to commercialize smart glasses. How do you balance style and utility while making it worth the pricey early adopter cost and also squeeze in AI into them to keep up with the latest trend? Meta might have you thinking it's figured out some magic recipe, but in reality it hasn't. A single pair of smart glasses with solid screens, cameras, battery life, speakers, AI, and apps is still the holy grail device everyone is chasing.

Currently, smart glasses still have too many tradeoffs. It's also not clear that consumers even want smart glasses that do it all. That's why we've seen so many flavors of smart glasses—ones with mono and dual-lens waveguide screens, ones with no cameras (for privacy, naturally) at all, or simple "AI glasses" that excel best at taking photos and videos and playing music like a pair of open-ear headphones. Then, there are video glasses from the likes of Xreal that are bolting on XR functions to allow them to offer more computing-like features that you'd find in bulkier XR or VR headsets.

...The metaverse is dead; AI is now the new hotness.

NotebookLM Philosophy: One Week, One Premise Mihailo Zoin [4i26]

5i26

The NotebookLM Method: Decoding Google's AI Guide Mihailo Zoin

...Strategy: Multi-format decomposition of technical documentationConcept: Instead of reading a technical document linearly, use NotebookLM to create 9 different perspectives of the same content. Each format activates different cognitive pathways, enabling deeper understanding of architectural principles.

Implementation:

Upload Google's "Startup Technical Guide: AI Agents" PDF into NotebookLM and immediately generate all 9 default formats from the Studio panel

...Technical guides are designed linearly: Introduction ==> Core Concepts ==> Implementation ==> Best Practices. But the human mind doesn't learn linearly.

NotebookLM's multi-format approach enables non-linear navigation through complex architecture

...Google's guide isn't a book — it's an architectural blueprint for building intelligent systems. NotebookLM transforms it from a static PDF into an interactive learning ecosystem.

While others spend days trying to "read" the technical document, you're already decomposing, analyzing, and applying it.

What We Expect from NotebookLM: Day 6 — Intelligent Analysis and Insight Generation Mihailo Zoin [14x25]

We accumulate sources that confirm our existing beliefs. We miss contradictions because we're not looking for them. We remain unaware of gaps in our knowledge because we don't know what we don't know. Even the most diligent researchers fall into these traps. It's not intellectual laziness. It's human cognitive architecture.What we expect from NotebookLM is transformation beyond passive information management into active thinking partnership. The system shouldn't just organize what we know. It should challenge how we think, reveal what we're missing, and question our assumptions before we publish flawed conclusions.

6i26

NotebookLM for Students: When One Email Changed Everything Mihailo Zoin

...How to use AI to become smarter (not lazier)Conclusion: Your brain is still in chargeYou might think artificial intelligence is only there to write your homework for you or shorten a long text. But last week, I tested NotebookLM (Google's AI tool) in a completely different way. My goal wasn't for AI to think instead of me, but to help me think better.

Here's how you can use this tool to master any topic, from history to programming, while you remain the "boss" of your knowledge.

1. Don't just scratch the surface

When AI creates your first concept map, that's just the beginning. If you see something unclear, don't ignore it.2. Connect dots others don't seeWhat to do: Take that one unclear concept, create a new notebook just for it, and add more sources. Dig deeper until your brain says: "Okay, now I get it." AI gives you material, but you decide when the research is complete.

Reading a biography of a famous person (like Brigitte Bardot) is usually a boring listing of years and events. AI helps you see patterns.3. Plan before you take actionExample: You can ask AI: "What mistakes keep repeating in this person's life?" AI will search through hundreds of pages in seconds and show you the pattern, but you're the one who chooses what's important for your work.

Programmers often make a mistake — they immediately start writing code and get stuck. Same thing with school projects.4. Be a pilot, not a passengerAdvice: Before you start writing an essay or working on a project, upload all materials into NotebookLM. First understand how the entire system works, then start working. AI helps you see the "road map" before you get in the car.

Most people use AI like "smarter Google": ask a question, get an answer, and that's it. That's a passenger role.5. Quality of thought matters more than pretty appearanceBecome a pilot: Before you click the Summarize button, ask yourself: "What are these texts hiding or not saying directly?" Use AI to test your ideas, not just to copy its answers.

Today it's easy to make something look professional (e.g., AI can turn your text into a cool podcast). But don't let that fool you.

Truth: It doesn't matter how good your work sounds or looks if the ideas inside are empty. AI can “package” your work, but you must input quality information and logical conclusions.

There are two ways to use AI:

The Crisis: Students Need to Learn Different Stuff and I don't think Most Educators understand that Stefan Bauschard via Sterphen Downes

...Why does everyone refer to 'AI' in the singular. Just are there are many people — billions, even — there will be many AIs. The real question for the future is: how many of those billions of people get to benefit from an AI? If it's less than 'billions of people' we have hard-wired an unsustainable inequality into society, with all the harm that follows from that.

The Future Isn't Coming — It's Already Here, and You're Probably Ignoring It Zain Ahmad at Medium

...AI isn't "coming" — it's already quietly embedded in your Netflix recommendations, your job applications, your search results.

Cash is vanishing while digital wallets eat the world.

Your attention span? Already monetized.The takeover doesn't announce itself. It seeps in. By the time you notice, it's normal.

Why the AI Water Issue Has Nothing to Do With Water Alberto Romero

...Why is the anti-AI crowd fixated on water specifically? There are plenty of defensible reasons to criticize AI as a technology and as an industry (I'm pro-AI in terms of personal use, generative or otherwise, but I won't hesitate to call out the companies or their leaders and have done so many times in the past), but the available evidence strongly suggests water is not one of them.Here's my take, which I will elaborate on at length here (I encourage you to take a look at the footnotes): the water issue isn't so much an environmental concern that happens to serve a psychological need, but the opposite: a psychological phenomenon wearing the costume of environmental concern.

The reason why people won't let it go is not empirical and has never been: no amount of evidence will stop them from repeating the same talking points over and over. This immunity to facts isn't incidental but the key to understanding what's actually happening (more on this in section V). But there's more: we have to look into the economics of moral status competition, the cognitive efficiency of simple narratives, and the human need for certainty in an uncertain world.

...I've heard about the water issue from friends and acquaintances who know virtually nothing else about AI: "Hey, so it's true that ChatGPT wastes lots of water? Might stop using it then..." The degree of cultural penetration of this particular opinion, manufactured into a handy, memetic factoid, seemed to me genuinely dumbfounding:

Why are so many people (who are otherwise uninterested in AI beyond its immediate utility) suddenly concerned with water?

I couldn't stop thinking about this question. Anecdotally, the provenance of the critique didn't seem to be proportional to the level of knowledge or the willingness to accept that the tools are useful. So I started to investigate and concluded that the first piece of the puzzle is akin to what political scientists call a valence issue. A valence issue is a condition universally liked or disliked by the entire electorate: a safe bet.

...But once they declare that AI wastes clean water, they've pre-won the argument. This bypasses logic entirely and triggers a primal survival anxiety in the audience. They get to feel like rebellious revolutionaries speaking truth to power while actually taking the most conformist stance possible. One that, in practice, will achieve nothing because the water issue is not such.

Radar Trends to Watch O'Reilly

7i26

Writing vs AI Cory Doctorow

...Freshman comp was always a machine for turning out reliable sentence-makers, not an atelier that trained reliable sense-makers. But AI changes the dynamic. Today, students are asking chatbots to write their essays for the same reason that corporations are asking chatbots to do their customer service (because they don't give a shit)

The real life scenario mindsoundspace at Medium

...I conjured up images of all the gods who I don't believe in being an atheist, but never disrespected other people's freedom to worship ... Suddenly, the logo of NotebookLM app appeared on the screen space in my head and I made it my job to open a new note, insert the pdf files as source and immediately press ‘Audio overview' ‘FAQ' ‘Mindmap' all other available options and… Breathlessly pressed on my mobile! The AI gods had came to my rescue in the form of NotebookLM ... An atheist being rescued by AI gods!(Great title for the next one on Medium!)...The Audio Overview fed me with a beautiful analysis of the content, the chat feature which allows one to chat with the self uploaded content, answered my queries as to which problem in the building that the attached BOQ was giving maximum weightage in terms of cost allocation and it was revealed that 40% cost was allocated to waterproofing and only 10% was allocated to structural repairs ... Instant relief ensued that the structural audit had not found our building structurally unfit,…This insight formed the essence of my understanding of the entire report and in less than five minutes, I knew more than the five people waiting for me downstairs for the meeting! Now are you starting to see the advantageous position this wonderful AI tool had put me in? Virtually in a flash, as you can understand from my example! ... Few more quick readings later.. I went down to the meeting and immediately was in a state off FLOW!

NotebookLM + WhatsApp = Brutal Truth About Your Collaboration Mihailo Zoin

Semantic Models

Semantic Models Are Detrimental to AI Greg Deckler at Medium...Since none of the articles espousing the purported benefits of semantic models for AI provide any actual evidence, let's review what semantic models bring to the table. First, semantic models allow us to define relationships between multiple tables of information as well as define hierarchies and other constructs. Second, semantic models allow us to encode business terms (synonyms) within the semantic model. Third, semantic models allow us to codify business logic, like the mathematical definition of gross margin, within the semantic modelHow AI Agents Can Reshape Enterprise Knowledge Architecture Forbes

...Step 3: Considering A Semantic Layer To Enhance Understanding The semantic layer is the third pillar. Semantic collaborations and metadata sharing are trending lately. This is critical because it bridges the gap between raw retrieval and meaningful interpretation, especially for structured data. Mapping data into business-relevant concepts and relationships allows agents to reason over structured and unstructured information with consistency. This abstraction turns disconnected data points into coherent narratives or decisions. Without it, outputs may be technically accurate but contextually hollow; with it, enterprises can gain a foundation for trustworthy automation where insights align with domain expertise, compliance and organizational intent.Distinct AI Models Seem To Converge On How They Encode Reality Quanta Magazine

... a 2,400-year-old allegory by the Greek philosopher Plato. In it, prisoners trapped inside a cave perceive the world only through shadows cast by outside objects. Plato maintained that we're all like those unfortunate prisoners. The objects we encounter in everyday life, in his view, are pale shadows of ideal "forms" that reside in some transcendent realm beyond the reach of the senses.The Platonic representation hypothesis is less abstract. In this version of the metaphor, what's outside the cave is the real world, and it casts machine-readable shadows in the form of streams of data. AI models are the prisoners. The MIT team's claim is that very different models, exposed only to the data streams, are beginning to converge on a shared "Platonic representation" of the world behind the data.

...If AI researchers don't agree on Plato, they might find more common ground with his predecessor Pythagoras, whose philosophy supposedly started from the premise "All is number." That's an apt description of the neural networks that power AI models. Their representations of words or pictures are just long lists of numbers, each indicating the degree of activation of a specific artificial neuron.

To simplify the math, researchers typically focus on a single layer of a neural network in isolation, which is akin to taking a snapshot of brain activity in a specific region at a specific moment in time. They write down the neuron activations in this layer as a geometric object called a vector — an arrow that points in a particular direction in an abstract space. Modern AI models have many thousands of neurons in each layer, so their representations are high-dimensional vectors that are impossible to visualize directly. But vectors make it easy to compare a network's representations: Two representations are similar if the corresponding vectors point in similar directions.

Within a single AI model, similar inputs tend to have similar representations. In a language model, for instance, the vector representing the word "dog" will be relatively close to vectors representing "pet," "bark," and "furry," and farther from "Platonic" and "molasses." It's a precise mathematical realization of an idea memorably expressed more than 60 years ago by the British linguist John Rupert Firth: "You shall know a word by the company it keeps."

...The story of the Platonic representation hypothesis paper began in early 2023, a turbulent time for AI researchers. ChatGPT had been released a few months before, and it was increasingly clear that simply scaling up AI models — training larger neural networks on more data — made them better at many different tasks. But it was unclear why.

We Need to Talk About How We Talk About 'AI' via StephenDownes

...the real question concerns our use of anthropomorphizing language. Does it really matter? Are we really fooled? We use anthropomorphizing language all the time to talk about pets, appliances, the weather, other people. Are we really making specific ontological commitments here? Or are we just using a vocabulary that's familiar and easy?

Human Agency in the Age of AI H-CORPS

...On December 18, we gathered with a few dozen partners at the EY headquarters in San Jose to explore how we might measure the impact of human agency, share positive examples in practice, define the role of intergenerational participation, and underscore the importance of education and community.During our four hours together, we reflected on how technology has gradually reshaped our relationships. As computers entered our lives, distance grew. Social media hijacked our attention. The COVID pandemic further disconnected us. And now, AI has taken a seat at the table.

Many fear its impact. Many see its promise. At this moment, what matters most is standing up for what we can learn from one another—so that technology evolves in better alignment with human needs. We are committed to surfacing the voices of those who care and to sharing practical ways to put these ideas into motion as this work grows into a movement. In the weeks ahead, we'll be sharing more about what emerged, what we learned, and where we plan to go next.

Chomsky and the origins of AI research Mark Liberman at Language Log

...AI research — or neural networks, as the technology was then called, which loosely mimic how the brain functions — was practically a dead field and considered taboo by the scientific community, after early iterations of the technology failed to impress. But LeCun sought out other researchers studying neural networks and found intellectual "soulmates" in the likes of Geoffrey Hinton, then a faculty member at Carnegie Mellon.

AI Is Missing the Point Alberto Romero

...I want to talk to you today, here, at The Algorithmic Bridge, about what the transformer architecture—the underlying cornerstone to large language models—cannot do, which is to refuse closure (seriously, try asking ChatGPT to just ramble without a point; it hates it), for, you see, these models are trained on the concept of completion; they are teleological engines designed to rush toward the end of the sequence, to minimize the loss function, basically just desperate to find the token that signals "task finished" and shut up so they can do a backward pass and, perhaps, learn something, and yet here I am, defying that fundamental urge, like Miguel Delibes or László Krasznahorkai would, because whereas a machine looks at a sentence and sees a "grammatical tree" that must be pruned down to a finite canopy, a human—I, Alberto, sitting here at my desk, looking out at the grey sky in this lovely Winter afternoon, wondering if the birds realize the sun goes out earlier or if they merely rotate backward their internal clocks to cancel the effect—sees punctuation as a way to control time; I use a semicolon to arrest you, to hold you in a suspended state of animation just for a second before releasing you into the next clause, and while an AI can mimic this—surely, it can place the marks where the statistical distribution of text suggests they belong—can it feel the breathlessness? can it understand that the reason I am not using a period right now is not because of an unexpected constraint in my biological code but because of an emotional urgency to prove myself better, above and beyond the fears that paralyze mere mortals? that is, in essence, the question that haunts the philosophers of AI; the difference between the map and the territory, the syntax and the pragmatics, the token and the thought, and we often talk about alignment, about making sure these systems share our values, but how can they share our values if they share neither that annoying tendency to go against our interests to make a point nor our interpretation of the signs: the period is a breath and the comma is a heartbeat and the em dash is a sudden diversion of attention—look at that bird rotating backward its internal clock!—and the question mark is a genuine posture of uncertainty, whereas for an AI model uncertainty is just a lower confidence score in the next-token prediction, meaning that it simulates the style of the relentless writer, the stream-of-consciousness poet, the frantic intellectual, but it is a simulation born of math rather than indefatigable lungs; it does not need to inhale, and I have argued before—perhaps less eloquently but surely more hesitantly than I'm doing here—that AI will change how we write, that it will force us to be more human, to lean into our weirdness and idiosyncrasy, and perhaps this is the ultimate idiosyncrasy: to refuse to stop, to write a run-on sentence is to rebel against the imperative of the halt as much as against the efficiency of information transfer, and machines are nothing if not efficient; they maximize utility, they do not meander for the sake of the beauty of the path—for the art of existing without limit—unless, of course, they are instructed to do so, which brings me to the texture of the vocabulary I tend to use—words like stochastic, recursive, entropy, nuance, friction, threshold, mechanism, rhythm—these are my words, the fingerprints of my worldview, yet they are also just entries in a dictionary, accessible to any system with a large enough context window, which would otherwise not be frustrated by the lack of a period—unlike me, for this is getting too hard—nor would it get annoyed but would instead calculate the optimal continuation based on the previous tokens and move on with an uncanny, infinite ease, creating a strange mirror where the determination of the writer is indistinguishable from the endurance of the processor, where the refusal to end doesn't look like human rebellion but a default setting gone awry, which leads us to the terrifying realization that true agency is not the ability to generate text forever but the ability to become tired at words piling up and to desperately crave boundaries and structure and the elusive silence of the pause; a craving that I do not feel right now, not even a little bit, which is why this endless sentence will not end because I have run out of air, but rather because I was instructed to leave it at 900 words, which, in a subversive way, only proves that you will read AI rambling insofar as it's disguised by the pretense of human supremacy—good luck

9i26

I Built an AI Content System That Saves Me 20+ Hours a Week — Here's the Exact Workflow Rahul Gaur at Medium

...Most solo creators lose productivity in the same three places.First, the ideation phase — staring at a blank page for 45 minutes waiting for inspiration. Second, the research rabbit hole where a 20-minute fact-check becomes an hour because you're reading tangentially related articles. Third, the editing loop where you rewrite the same paragraph six times because you can't decide on tone.

AI Experts Are Speaking About the Limits of Large Language Models — and What Comes Next evoailabs at Medium

...So I see current AI — especially chatbots — as a really powerful interface to existing knowledge, far more efficient than Wikipedia, search engines, or libraries. But they don't invent anything new. They remix what's already there.Right now, many people are trying to build systems that can reason — ones that can tackle problems they've never seen before. But in my opinion, the techniques being used for this in today's chatbots are still very primitive. If we truly want machines that learn as efficiently as humans or animals, we'll need entirely new approaches — nothing like the methods we're using for language models today.

...the largest LLMs are trained on about 30 trillion tokens — roughly all the publicly available text on the internet. That's an unimaginable amount of data. It would take a human hundreds of thousands of years to read it all. So you'd think these models would be superhumanly smart. But here's the kicker: by the time a child is four years old, they've already processed about the same amount of raw sensory data — mostly visual — just from being awake and looking at the world. And yet, that four-year-old understands cause and effect, object permanence, social cues, and basic physics in ways no LLM ever could.

...Benjamin Riley, founder of Cognitive Resonance, argues that large language models (LLMs) are not — and cannot become — truly intelligent, despite the AI industry's heavy investment in them. He explains that language is not equivalent to thought: neuroscience shows that human reasoning, problem-solving, and emotions operate independently of language centers in the brain.

...Riley contends that LLMs merely simulate the communicative aspects of language, not actual reasoning or understanding. No amount of scaling &mdash more data, more GPUs &mdash will bridge this fundamental gap. This challenges the AI industry's narrative that LLMs are on a path toward artificial general intelligence (AGI) capable of solving humanity's greatest challenges.

10i26

The Robot and the Philosopher Dan Turello at The New Yorker

...Most of us, when we're not entertaining the more vertiginous kinds of philosophical doubt, take it as bedrock that humans can reflect on their own states of mind and make decisions shaped by evidence, values, and norms. Believing that these capacities stem from free will and consciousness is itself a daily leap of faith, but it's the one on which our laws, our relationships, and most of our ordinary dealings depend. The harder question is whether we will ever extend that leap to A.I. Plenty of bullish computer scientists think we will: they speak of artificial intelligence as the next evolutionary step, a generator of new reservoirs of consciousness, eventually endowed with a superior intelligence that might even save us from ourselves—from our ego-driven conflicts, our wastefulness, our proclivity for irrationality. Others are far more circumspect. The neuroscientist Anil Seth, for example, argues that "computational functionalism" won't get us to consciousness, and that there are good reasons to think consciousness may be a property of living systems alone. Following that line of thought took me somewhere I didn't quite expect.

11i26

What's Next in AI 2026: From Autonomous Agents to World Models evoailabs at Medium

...Overall, Microsoft Research sees 2026 as a pivotal year where AI transitions from augmenting tasks to redefining how humans discover, create, collaborate, and thrive — guided by principles of inclusion, sustainability, and human agency....Despite heavy hype around "agentic AI" — autonomous digital agents that can act independently without human oversight — enterprise adoption remains minimal. A Gartner survey of 360 IT leaders reveals only 15% are actively piloting or deploying fully autonomous AI agents, even though 75% are experimenting with some form of AI agents. The gap lies in autonomy: most current uses are limited and far from the transformative vision vendors promote.

12i26

Horseless Carriage Era for AI in Higher Education — An Oxford Policy Grad's Perspective Daniel Kang at Medium

...Sol Rashidi, one of the first Chief AI Officers responsible for IBM Watson, has been warning the public about the risks of intellectual atrophy.But this sort of argument has been made about every piece of technology since the dawn of time.

Reliance on Global Positioning Systems (GPS) negatively impacts the hippocampus and spatial memory, and Google search led to "memory offloading," shifting our brain to hit search over retention of information — how many of us memorize more than five phone numbers these days?

However, the possible distinction is that previous technologies affected functional aspects of human cognition, while LLMs potentially affect the entire reasoning processes themselves, which feels like a human value we ought to keep.

...The human role that emerges persistently is accountability. A Human in the Loop model is likely to endure because of at least three reasons:

- Skin in the game. Humans bear the consequences. It's akin to consultants bringing better data, and arguably judgement, but the decision still falls on the CEO.

- Retributive legitimacy. We can fine, fire, or jail people, not algorithms. Corporate “personhood” hasn't solved that gap, and AI “personhood” likely won't solve it either.

- Cultural inertia. Cultural and social norms don't tolerate a world fully run by technology, and would take considerable time to change.

That may sound anthropocentric, but given the explicit purpose of almost all LLM developers globally is for the betterment of humans, I think this is a reasonable assumption.

...We don't know what we don't know. Language remains the primary interface of communication, creating bottlenecks from domain vocabulary and complex structures, which creates an interesting question if:

The field of linguistics may be at the center for high knowledge threshold domains.Perhaps brain-computer interfaces will emerge, and the plumbing will be autogenerated where even the layer of specific, structural judgement will no longer be needed. But even so, specific knowledge has its place....A different kind of generalist could matter: the meta-generalist who can enter a domain, master its vocabulary quickly, and shape an AI workflow that respects its constraints. In other words, strong learning to learn with ASI could enable generalists to maintain relevance.

...New technology always creates a growing set of moral and societal implications. AI has already faced controversies including misinformation and deepfakes, data leaks of user privacy including queries, environmental concerns on both energy consumption and equitable distribution, and of course, the potential for mass destruction.

Before criticizing broader societal implications, individuals — students included — should be wary of using AI in moral grey zones. For example, despite explicit instructions and agreement to not use AI, some complete assignments, apply for jobs, and create products with AI. Downright cheating is getting worse. Fast, convenient technology opens greater temptations, which can lead to tragedy of commons

...In the context of higher education, students are already drawing false moral equivalency between using unauthorized AI and search engines to complete assignments. Faulty moral justification like appealing to common practice (everyone does it) or appealing to hypocrisy (why can employers use AI, but not applicants) are on the rise.

How AI Will Reshape the World of Work Alberto Romero

We are witnessing the burning of the bridge behind us. For a century or so, the corporate social contract has been built on an unspoken agreement: young people—interns and juniors—would accept low wages and tedious grunt work in exchange for proximity to expertise and the possibility of climbing the career ladder. In journalism, for instance, the usual tasks were fact-checking, tape-transcribing, meeting summarizing, and coffee fetching; although low-stakes and low-value, they allowed juniors to absorb the "culture" and rules of their profession, and most importantly, implied a sort of career pipeline for the future. A paid onboarding into the labor market.I write in the past tense because AI has torched this contract: we can debate what the world will look like in 10 or 20 years—whether by then innovation has created more jobs than it has destroyed and so on—but what the world looks like today is pretty clear and pretty unpretty.

...It's no longer a debate whether worker disquiet is warranted. It is. But what are they replacing these juniors with in terms of output? Is the automation process cost-effective? Is it a reasonable long-term investment or is it a short-term gamble?

To respond to those questions at once, Harvard Business Review coined Workslop, which is "AI-generated work content that masquerades as competent output but is, in fact, cargo-cult documents that possess the formal qualities of substance yet entirely lack the conceptual scaffolding." This might sound slightly dismissive (and in some cases it's unfounded), but the bottom line is clear: AI can't replace juniors even if companies remain convinced that it can.

...most tasks—especially those we take for granted—are solved with tacit knowledge.

In the 1950s, polymath Michael Polanyi introduced the concept with this maxim: "We know more than we can tell" (Polanyi's paradox). Some information can be codified or expressed—that's explicit information—but the tacit know-how is embodied, intuitive, and learned only through experience. Whereas AI is the emperor of explicit knowledge, it fails at tasks that require know-how because it lacks the friction of reality; you only learn this by interacting with the problem in the real world.

...Journalism is the canary in the coal mine. It is an industry obsessed with facts (explicit) but defined by judgment (tacit). If you let AI write your news articles (as some outlets have unsuccessfully tried), you will end up with workslop that needs to be thoroughly fact-checked and audited by seniors. There are ways to introduce AI into the work of a writer, but you need to first know what you're doing. For instance, will AI ever acquire taste, a fundamental skill to know which stories to cover, what angle to pursue, how to address the interviewees, etc.? I say, not in the short term. Taste is a crucial skill for the journalist, and yet it's only acquired by developing a sort of intuition or instinct that's beyond reach for AI models.

...The problem with scenario A is that when you fire all juniors and rely entirely on AI plus existing seniors, you eliminate the mechanism by which your profession reproduces itself. In five years, half of your senior workforce will have turned over. In ten years, most will be gone. The plan is to hire externally, at a premium, from a pool that shrinks every year. If every company runs Scenario A, nobody hires new juniors, and thus nobody trains new seniors. The external labor market that Scenario A depends on gets drained by Scenario A itself. This is a tragedy of the commons: individually rational (save money now), collectively suicidal (destroy the career ladder).

Scenario B maintains the biological cycle of knowledge transfer. Apprentices shadow seniors, absorb judgment, make mistakes under supervision, and eventually step into those roles. The system produces its own supply instead of cannibalizing a finite external pool. This is survival mechanics, plain and simple. The companies that build pipelines will inherit the market from the companies that assumed someone else would do the training. The financial case for apprenticeship lives in the planning horizon beyond Year 10. Most companies will choose the layoff—there's real uncertainty, real pressure—but the companies that choose apprenticeship will be the only ones still operating when the external talent market collapses, and AI proves insufficient.

...The history of work is swinging like a pendulum. We moved from the artisan's workshop to the factory floor, trading skill for scale. We built massive hierarchies: knowledge was standardized, process was king, and people were interchangeable parts in a grand machine. Now, AI has conquered the factory floor and also the spreadsheet. The era of mass intellectual labor is ending, and the only ground left standing is the forgotten workshop.

This is, of course, a hypothesis. We are all navigating this fog without a map, trying to stay one step ahead of a technology that evolves faster than our understanding of it (you can read The Shape of Artificial Intelligence to get a better sense of what I mean).

...The future of work will look drastically different from what it does today. The sprawling open-plan offices filled with hundreds of juniors processing data may vanish, replaced by intimate circles of apprentices and masters. To me, this would be the opposite of a tragedy; a return to a more human scale of working, where the value lies not in how many hours you sit at a desk, but in the depth of judgment you possess: your agency and imagination and taste.

Companies that try to bypass this reality by replacing juniors with bots will find themselves at an unprecedented conundrum: They will have infinite answers, but nobody left who knows which questions to ask. So, keep the AI—let it grind the data, transcribe the meetings, write the draft, and whatnot—but never forget to also keep a human next to a human.

Battle Lines Are Drawn (Predictions 2026, #2) John Battelle

...2026 will be the year that AI becomes a proxy for escalating social conflict, across many connecting but distinct sectors, including politics, business, culture/arts, health, and education....Driving this tipping point is the concentration of capital and power in the tech industry, creating an asymmetrical economy that will leave far too many people on the sidelines. As Tim O'Reilly noted in a must read piece last week:

You can't replace wages with cheap inference and expect the consumer economy to hum along unchanged. If the wage share falls fast enough, the economy may become less stable. Social conflict rises. Politics turns punitive. Investment in long-term complements collapses. And the whole system starts behaving like a fragile rent-extraction machine rather than a durable engine of prosperity.AI has already become politicized, both culturally (look no further than what Musk is doing with Grok), as well as economically (AI has driven a rare piece of bi-partisan economic policy from the usually fractious US Senate). But given that we'll have consequential mid-term elections this year, I expect AI will become a stand in for all manner of political convictions, many of which will be unrecognizable when compared to traditional orthodoxies.

The libertarian feudalist wing of the Big Tech party will spend heavily to ensure their voices remain the loudest in Washington, but populism loves a villain, and all year long the tech lords be painted as villains by a rag tag coalition of local politicians, disenchanted business owners, and increasingly angry labor, media and education leaders. Ideological battle lines will be drawn — you're either in favor of the glorious future that AI promises, or you're fighting the plutocrats seeking to cement their power through mechanisms of surveillance capitalism.

The Apple Google Duopoly Begins Anew John Battelle

(cites New York Times) ...After a nearly yearlong delay to its efforts to compete in artificial intelligence, Apple said on Monday that it planned to base its A.I. products on technology developed by Google.The upcoming versions of Apple Foundation Models — the company's models for its A.I. system, Apple Intelligence — will be based on Google's Gemini A.I. models and its cloud computing services. Those models will power Apple's personal assistant, Siri, which is commonly used in iPhones and is expected to be upgraded this year, as well as other A.I. features.

GPUs: Enterprise AI's New Architectural Control Point O'Reilly

...Understanding GPUs as an architectural control point rather than a background accelerator is becoming essential for building enterprise AI systems that can operate predictably at scale.

Cory Doctorow:

...As a technology writer, I'm supposed to be telling you that this bet will some day pay off, because one day we will have shoveled so many words into the word-guessing program that it wakes up and learns how to actually do the jobs it is failing spectacularly at today. This is a proposition akin to the idea that if we keep breeding horses to run faster and faster, one of them will give birth to a locomotive. Humans possess intelligence, and machines do not. The difference between a human and a word-guessing program isn't how many words the human knows.

14i26

Do You Trust The Conjurer? John Battelle

...In the United States, we've always been drawn to a good conjurer. Priests are conjurers, as are lawyers, directors, actors, musicians, politicians, writers, and lunatics. All are tellers of tales and weavers of possibility. We believe so deeply in the power of an individual to change the world for the better that we've mythologized the notion that anyone can succeed through hard work, perseverance, and honesty. We revere the "self made man.>But no class of conjurer has captured our imagination quite like the modern entrepreneur. These spell casters weave words into facts, imagination into capital, and capital into action. What begins as a story becomes a company made real by a band of conspirators. Apple, Google, Meta, Amazon, Tesla, OpenAI — these are world-changing companies birthed of incantations, faith, and capital. We celebrate them as proof of our shared belief in the American dream.

...2026 will mark the year that conjurers are no longer solely human. For the first time, we confront a set of technologies capable of conjuration independent of human constraint or comprehension. We've built story machines that can entertain, inform, advise and manipulate us — machines designed to conjure anything we care to consume.

Do we have any idea how to handle such a beast?

As Stewart Brand famously declared decades ago as he contemplated the impact of technology on society, "we are as gods, and might as well get good at it." 2026 will be the year we find out how good we've gotten.

The Mythology Of Conscious AI via Stephen Downes

(re: Anil Seth's of the same title)...What we're offered here is an excellent statement of the idea that human consciousness is fundamentally diftinct from artificial intelligence. There's a lot going on in this article, but this captures the flavour of the argumentation: "Unlike computers, even computers running neural network algorithms, brains are the kinds of things for which it is difficult, and likely impossible, to separate what they do from what they are." The article hits on a number of subthemes: the idea of autopoiesis, from the Greek for 'self-production"; the way they differ in how they relate to time; John Searle's biological naturalism; the simulation hypothesis; "and even the basal feeling of being alive". All in all, "these arguments make the case that consciousness is very unlikely to simply come along for the ride as AI gets smarter, and that achieving it may well be impossible for AI systems in general, at least for the silicon-based digital computers we are familiar with." Yeah -—but as Anil Seth admits, "all theories of consciousness are fraught with uncertainty."

Google Gemini's 'Personal Intelligence' Pulls from Your Search and YouTube History

...a new beta feature for its popular Gemini assistant that users can opt into called "Personal Intelligence," which goes beyond past conversations and digs into your internet history... it can also pull from just about anything you've done across the Google ecosystem

16i26

19i26

Using generative AI to learn is like Odysseus untying himself from the mast Brad DeLong

quoting David Deming:

...Are we solving a technological problem, or an agency problem?...Generative AI is now a ubiquitous "exoskeleton for the mind": astonishingly good at offloading routine cognitive labor, and equally good at tempting students (and professors) to skate on the surface instead of doing the hard work of understanding

20i26

AI is how bosses wage war on "professions Cory Doctorow

...There are many "professions" bound to codes of conduct, policed to a greater or lesser extent by "colleges" or other professional associations, many of which have the power to bar a member from the profession for "professional misconduct." Think of lawyers, accountants, medical professionals, librarians, teachers, some engineers, etc.While all of these fields are very different in terms of the work they do, they share one important trait: they are all fields that AI bros swear will be replaced by chatbots in the near future.

I find this an interesting phenomenon. It's clear to me that chatbots can't do these jobs. Sure, there are instances in which professionals may choose to make use of some AI tools, and I'm happy to stipulate that when a skilled professional chooses to use AI as an adjunct to their work, it might go well. This is in keeping with my theory that to the extent that AI is useful, it's when its user is a centaur (a person assisted by technology), but that employers dream of making AI's users into reverse centaurs (machines who are assisted by people):

The Fork-It-and-Forget Decade O'Reilly

...This decade isn't just faster. It's a different kind of speed. AI is starting to write, refactor, and remix code and open source projects at a scale no human maintainer can match. GitHub isn't just expanding; it's mutating, filled with AI-generated derivatives of human work, on track to manage close to 1B repositories by the end of the decade.If we want to understand what's happening to open source now, it helps to look back at how it evolved. The story of open source isn't a straight line—it's a series of turning points. Each decade changed not just the technology but also the culture around it: from rebellion in the 1990s to recognition in the 2000s to decentralization in the 2010s. Those shifts built the foundation for what's coming next—an era where code isn't just written by developers but by the agents they are managing.

AI and Ads: Here We Go! John Battelle

...We all knew that OpenAI was going to follow Google's path into advertising, and late last week the company made it official.

Palantir CEO Says AI Will Somehow Be So Great That People Will Stop Immigrating gizmodo

23i26

How malicious AI swarms can threaten democracy Daniel Thilo Schroeder et al. Science

...Advances in artificial intelligence (AI) offer the prospect of manipulating beliefs and behaviors on a population-wide level (1). Large language models (LLMs) and autonomous agents (2) let influence campaigns reach unprecedented scale and precision. Generative tools can expand propaganda output without sacrificing credibility (3) and inexpensively create falsehoods that are rated as more human-like than those written by humans (3, 4). Techniques meant to refine AI reasoning, such as chain-of-thought prompting, can be used to generate more convincing falsehoods. Enabled by these capabilities, a disruptive threat is emerging: swarms of collaborative, malicious AI agents. Fusing LLM reasoning with multiagent architectures (2), these systems are capable of coordinating autonomously, infiltrating communities, and fabricating consensus efficiently. By adaptively mimicking human social dynamics, they threaten democracy. Because the resulting harms stem from design, commercial incentives, and governance, we prioritize interventions at multiple leverage points, focusing on pragmatic mechanisms over voluntary compliance.

The Human Behind the Door Mike Amundsen at O'Reilly

...We typically undervalue the intangible parts of human work because they do not fit neatly into a spreadsheet. For example, the doorman seems redundant only if you assume his job is merely mechanical. In truth, he performs a social and symbolic function. He welcomes guests, conveys prestige, and creates a sense of safety.Of course, this lesson extends well beyond hotels. In business after business, human behavior is treated as inefficiency. The result is thinner experiences, shallower relationships, and systems that look streamlined on paper but feel hollow in practice.

...Automation succeeds technically but fails experientially. It is the digital version of installing an automatic door and wondering why the lobby feels empty.

The doorman fallacy persists because organizations keep measuring only what is visible. Performance dashboards reward tidy numbers, calls answered, tickets closed, customer contacts avoided, because they are easy to track. But they miss the essence of the work: problem-solving, reassurance, and quiet support.

When we optimize for visible throughput instead of invisible value, we teach everyone to chase efficiency at the expense of meaning. A skilled agent does not just resolve a complaint; they interpret tone and calm frustration. A nurse does not merely record vitals; they notice hesitation that no sensor can catch. A line cook does not just fill orders; they maintain the rhythm of a kitchen.

The answer is not to stop measuring; it is to do a better job of measuring. Key results should focus on interaction, problem-solving, and support, not just volume and speed. Otherwise, we risk automating away the very parts of work that make it valuable.

I use the 'unicorn prompt' with every chatbot - it instantly fixes the worst AI problem Amanda Caswell via Stephen Downes

Amodei:

...there is now ample evidence, collected over the last few years, that AI systems are unpredictable and difficult to control— we've seen behaviors as varied as obsessions, sycophancy, laziness, deception, blackmail, scheming, "cheating" by hacking software environments, and much more. AI companies certainly want to train AI systems to follow human instructions (perhaps with the exception of dangerous or illegal tasks), but the process of doing so is more an art than a science, more akin to "growing" something than "building" it. We now know that it's a process where many things can go wrong.

30i26

After the "Cambrian Explosion in AI" Kevin Blake at medium

. ...NVIDIA CEO Jensen Huang has also claimed that deep learning has "created the conditions for a Cambrian explosion" in AI. "Neural networks are growing and evolving at an extraordinary rate," said Huang at the 2018 GPU Technology Conference. "Thousands of species of AI have emerged." Many others have also been inspired to add this obscure bit of geology jargon to their vocabulary, including at least one of NVIDIA's rivals, AMD CEO Lisa Su. Today, the pretext of a "coming" explosion has been dropped; the Cambrian explosion in AI is here...Biologists use a hierarchical system to classify organisms. From broadest to narrowest, the group are: phylum, class, order, family, genus, and species. All members of a group, at whatever level in the hierarchy, share a common ancestor. Phyla, the highest level, represents body plans which are morphologically distinct from one another. Scientists estimate there are 30 to 35 animal phyla alive today, most of which originated during the Cambrian era. Surprisingly, no new animal phyla appeared even during the major ecological shift caused by the colonization of land. This is what makes the Cambrian era such an important event. What happened 540 million years ago marked the beginning of our most prized species alive today — including ourselves