9x25

It's Obviously the Chatbots Alberto Romero

...Ever since Meta and OpenAI first released their respective AI short-form video feeds two days ago, I've been afflicted with an ailment that leaves me bedridden; there's so much funny content in Vibes and Sora that I can't find the moment to get up from under my sheets. My unprecedented social media addiction feels absolutely and irremediably insurmountable...Just like previous technologies—phones, social media, the internet, TV—are not the sole causes of all the mental health problems and various social diseases that happened concurrently, AI and chatbots are not the only reason why users are experiencing psychotic breaks, or falling for obvious sugarcoating, why teenagers can't build sane relationships or have casual sex, why workers are losing their jobs or not finding any, or why we are all addicted to our screens.

AI products might be part of the picture (surely a small one, given how little time they've been among us at this level of ubiquity) but it's an epistemic mistake to let the novelty-driven biases and the financial-driven motivations of the commentariat—meaning all of us who participate in "the zeitgeist" and "the discourse," though not in equal measure—override our hard-earned evergreen logic.

Whatever is newest need not also be the cause.

Fail to notice when you're falling for that alluring belief, and you've stepped right into recency bias, amplified by the availability heuristic, and then wrapped up into a full-blown techno-moral panic. We grab the shiniest thing in front of us and hang every old affliction on its neck, as if psychosis, validation-seeking, loneliness, depression, unemployment, or addiction didn't predate the chatbot.

So no, it's not obviously the chatbots.

Although you'd do well to read human prose now and then. Subscribe for more of that.

10x26

Everyday AI Agents David Michelson at O'Reilly

...generative AI has opened up a world of new capabilities, making it possible to contribute to technical work that previously required coding knowledge or specialized expertise. As Tim O'Reilly has put it, "the addressable surface area of programming has gone up by orders of magnitude. There's so much more to do and explore."...While chatbots are great for answering questions and generating outputs, AI agents are designed to take action. They are proactive, goal-oriented, and can handle complex, multi-step tasks. If we're often encouraged to think of chatbots as bright but overconfident interns, we can think of AI agents like competent direct reports you can hand an entire project to. They've been trained, understand their goals, can make decisions and employ tools to achieve their ends, all with minimal oversight.

What Is 'Slopcore'? Stephen Johnson at lifehacker

Also known as "AI slop," slopcore's aesthetic comes from people using AI as a collaborator instead of a tool, leaving the machines to make artistic choices. It's marked by the strangely off, the almost-real, and the uncanny vibe of machines imitating humanity. Slopcore often depicts deeply emotional subjects, but the lack of depth and insight make it uniquely disquieting.At first glance, slopcore photos and videos appear realistic, but a closer look reveals misplaced anatomy, impossible geometry, and a weird "sheen" that comes from surfaces being too smooth and lighting being too perfect. Slopcore music has the same vibe, in audible form. Instruments sound bland and mid and vocals sound eerie due to attempts to sound "emotional" but being disconnected from actual emotions.

12x25

Moloch's bargain? Mark Liberman at Language Log

quoting Batu El and James Zou article

Abstract: Large language models (LLMs) are increasingly shaping how information is created and disseminated, from companies using them to craft persuasive advertisements, to election campaigns optimizing messaging to gain votes, to social media influencers boosting engagement. These settings are inherently competitive, with sellers, candidates, and influencers vying for audience approval, yet it remains poorly understood how competitive feedback loops influence LLM behavior. We show that optimizing LLMs for competitive success can inadvertently drive misalignment.

...and then veering to ?why Moloch/

...which points to Ruby on MolochMoloch is the personification of the forces that coerce competing individuals to take actions which, although locally optimal, ultimately lead to situations where everyone is worse off. Moreover, no individual is able to unilaterally break out of the dynamic. The situation is a bad Nash equilibrium. A trap.

13x25

The Architect's Dilemma Heiko Hotz at O'Reilly

The agentic AI landscape is exploding. Every new framework, demo, and announcement promises to let your AI assistant book flights, query databases, and manage calendars. This rapid advancement of capabilities is thrilling for users, but for the architects and engineers building these systems, it poses a fundamental question: When should a new capability be a simple, predictable tool (exposed via Model Context Protocol, MCP) and when should it be a sophisticated, collaborative agent (exposed via Agent2Agent Protocol, A2A)?...This essay draws a line where it matters for architects: the line between MCP tools and A2A agents. I will introduce a clear framework, built around the "Vending Machine versus Concierge" model, to help you choose the right interface based on your consumer's needs. I will also explore failure modes, testing, and the powerful Gatekeeper Pattern that shows how these two interfaces can work together to create systems that are not just clever, but truly reliable.

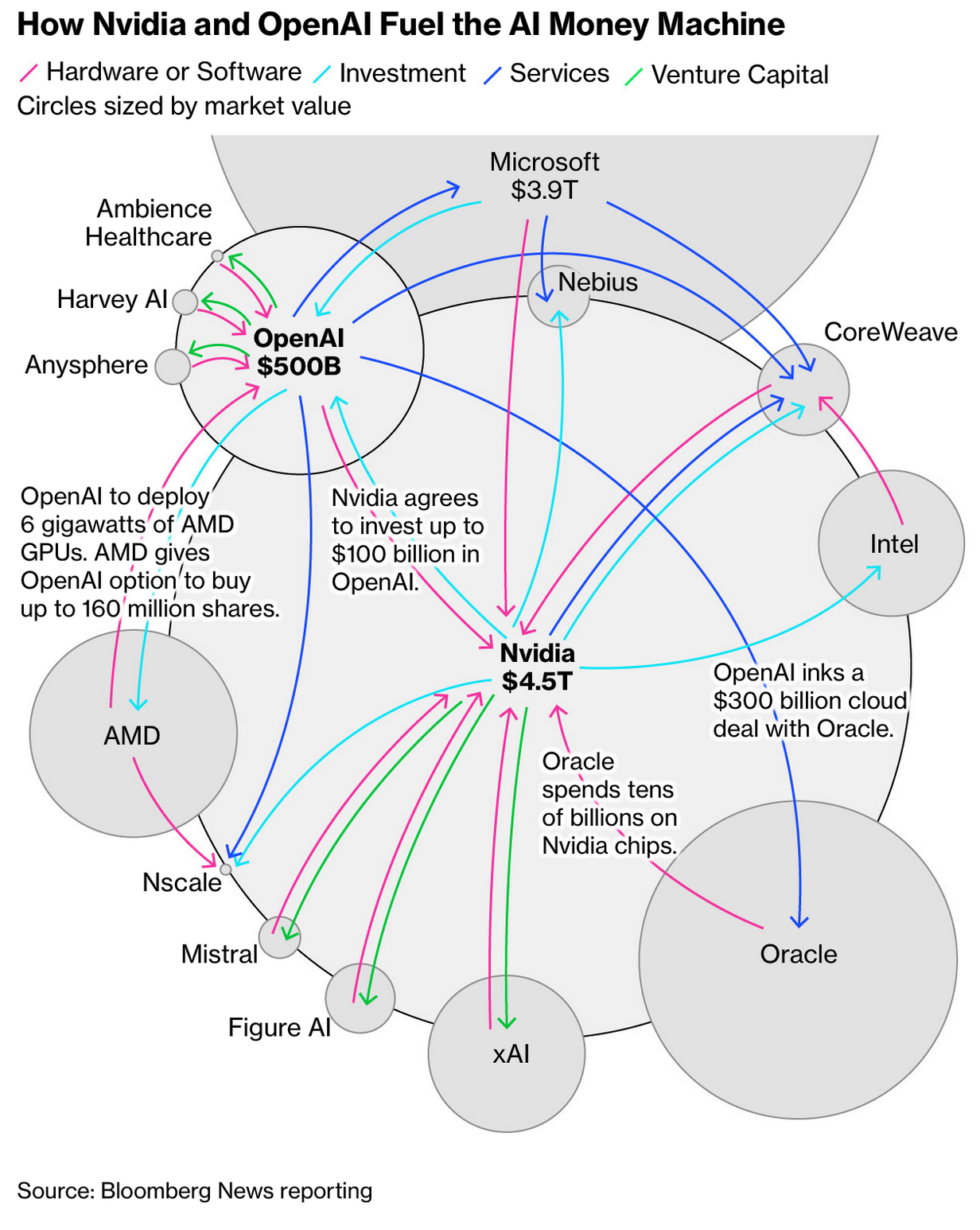

Circular deals among AI companies flowingdata.com

AI Just Had Its "Big Short" Moment Will Lockett at Medium

...no AI company or AI infrastructure is profitable. They are all losing money hand over fist. As such, they are using equity financing (selling shares of the company) or debt financing (borrowing money) to keep the lights on. However, because of the perceived notion of an AI race, the muddiness around AI's real-world performance and the misinformed idea that an AI with more infrastructure behind it will be better, these companies' values are tied to their expenditure. So they are also using equity and debt financing to spend hundreds of billions of dollars on AI expansion, which in turn raises their value, enabling more equity and debt financing to spend more and increase the value further and so on. However, not only are current AI models not accurate enough to be useful and profitable tools, but they have also reached a point of major diminishing returns. As such, the colossal amount of cash being pumped into them is only marginally improving them, meaning they will remain too inaccurate to even get close to their promised usefulness.This is an obvious bubble. Values are being artificially pumped up when the core of the business is far from solid. We are even starting to get things like circular financing (Nvidia & OpenAI's $100 billion chip deal) and AI companies hiding debt with SPVs (Meta's $26 billion debt bid), which painfully mirrors other bubbles like the dot-com bubble.

...it turns out the AI industry has accumulated $1.2 trillion worth of debt, of which almost all has been sold on as investment-grade securities. That means more high-grade debt is now tied to AI than to US banks!

Cognitive Science and A.I. research study language, not intelligence ykulbashian at Medium

...Every natural language is a mode of communication, an attempt at aligning understanding across a group of people. Since it performs a shared function, it must do so in a way that makes sense in a shared medium. We can only contrive words that refer to things the world makes manifest to all involved — both in the physical and phenomenological sense — and about which we want to have conversations. It makes no sense to have a word for my unique, momentary viewpoint of the world, only for "universalized" views of it detached from my immediate and unreplicable experiences. Even words like "individual", "subjective", or "perspective" refer to everyone's understanding of individuality, subjectivity, and perspective, which is why everyone is able to use them. Thus language, from the start, effaces any uniqueness in individual mental experiences; people can only express their thoughts by translating them into common terms....Words are also discrete symbols; there is no language so mercurial that it lacks fixed, primitive units of meaning such as words or morphemes. These are defined and codified before they can be used, via mutual agreement. The mind itself may be fluid and ever-changing, but language cannot be so — otherwise no "thing" could be discussed. Therefore, language by its very nature splits the world of experience into discrete, commonly understood, recurring entities and events. Putting something into words or symbols is a means to gaining clarity and consistency about it; its content must be perceived and framed as a consistent "thing"

...Semantics is not the study of individual meaning (which after all may not exist), but the study of extrinsic social meaning, of dictionaries and common cultural artifacts. It is an analysis of our shared abstraction layer, our facade or interface to one another. When we explore how minds acquire and structure individual "meaning", we are really analyzing those terms that we've already established within the medium of communication. We are looking for explanations for "categories", or "thoughts", or "consciousness", or "qualia", etc. all of which are shared, universalized inventions. Any study of cognition built on top of shared symbols must be recognized first of all to be an artificial, effortful construct., etc. all of which are shared, universalized inventions. Any study of cognition built on top of shared symbols must be recognized first of all to be an artificial, effortful construct..

Subversion of the Human Aura: A Crisis in Representation N. Katherine Hayles American Literature (2023)

The AI that we'll have after AI Cory Doctorow

Sam Altman: Lord Forgive Me, It's Time to Go Back to the Old ChatGPT gizmodo

Is AI Conscious? A Primer on the Myths and Confusions Driving the Debate Stephen Downes

Magic Words: Programming the Next Generation of AI Applications O'Reilly

...You can see what's happening here. Magic words are being enhanced and given a more rigorous definition, and new ones are being added to what, in fantasy tales, they call a “grimoire,” or book of spells. Microsoft calls such spells “metacognitive recipes,” a wonderful term that ought to stick, though for now I'm going to stick with my fanciful analogy to magic. At O'Reilly, we're working with a very different set of magic words. For example, we're building a system for precisely targeted competency-based learning, through which our customers can skip what they already know, master what they need, and prove what they've learned. It also gives corporate learning system managers the ability to assign learning goals and to measure the ROI on their investment

...Magic words aren't just a poetic image. They're the syntax of a new kind of computing. As people become more comfortable with LLMs, they will pass around the magic words they have learned as power user tricks. Meanwhile, developers will wrap more advanced capabilities around those that come with any given LLM once you know the right words to invoke their power. Each application will be built around a shared vocabulary that encodes its domain knowledge. Back in 2022, Mike Loukides called these systems "formal informal languages." That is, they are spoken in human language, but do better when you apply a bit of rigor. And at least for the foreseeable future, developers will write “shims” between the magic words that control the LLMs and the more traditional programming tools and techniques that interface with existing systems, much as Claire did with ChatPRD. But eventually we'll see true AI to AI communication. Magic words and the spells built around them are only the beginning. Once people start using them in common, they become protocols. They define how humans and AI systems cooperate, and how AI systems cooperate with each other. We can already see this happening. Frameworks like LangChain or the Model Context Protocol (MCP) formalize how context and tools are shared. Teams build agentic workflows that depend on a common vocabulary of intent. What is an MCP server, after all, but a mapping of a fuzzy function call into a set of predictable tools and services available at a given endpoint? In other words, what was once a set of magic spells is becoming infrastructure. When enough people use the same magic words, they stop being magic and start being standards—the building blocks for the next generation of software.

...Despite all the technologies of thought and feeling we have invented to divine an answer &mdash philosophy and poetry, scripture and self-help &mdash life stares mutely back at us, immense and indifferent, having abled us with opposable thumbs and handicapped us with a consciousness capable of self-reference that renders us dissatisfied with the banality of mere survival. Beneath the overstory of one hundred trillion synapses, the overthinking animal keeps losing its way in the wilderness of want.

Reality Bites Back Josh Rose at Medium ...People are people, and we have an extraordinary attachment to our humanity. And, by the very nature of that, we have a bit of an aversion to things that don't feel as real as we are. Or at the very least, it can just make us feel a little ick.

This is A.I. imagery now and, as far as I can tell, going forward. A brand needs to let the world know that the image on their campaign is A.I. with a label. And that label is a repellent. Sure, small brands will gladly disregard, and for them and their cheap work they weren't going to pay much money for anyway, A.I. will work just fine. And if you were a photographer who catered to small brands, doing small work then yes, you'll be replaced. But this is all in line with a race to the bottom that was already well under way before A.I. Before anyone had heard of an LLM, the entire culture of medium-to-low-end photography was being taken over by mobile photography, influencer campaigns, template-driven platforms (Canva, Squarespace, Shopify), automated digital marketing and all-you-can-download imagery platforms (MotionArray, Envato, Storyblocks). Mid-level photography's role within culture overall has been on a rapid decline and A.I. is more a reflection of that than a creator of it.

Silicon Valley Is Obsessed With the Wrong AI Alberto Romero

...The main motivation for Wang et al. to explore what they call the Hierarchical Reasoning Model (HRM) is that LLMs require chain of thought (CoT) to do reasoning. This is expensive, data-intensive, and high-latency (slow). CoT underlies every commercial LLM worth using nowadays. You can't get ChatGPT to solve math and coding problems without it.

...the famous transformer (Vaswani et al., 2017) basis of all modern LLMs, removed both recurrence and convolution in favor of the attention mechanism, hence the title "Attention is all you need." Interestingly, the attention mechanism itself is loosely inspired by the brain, but that's as far as modern LLMs resemble our cognitive engine (which is to say, not much).

Cartography of generative AI via flowingdata

AI Is Reshaping Developer Career Paths O'Reilly

The Naivete of AI-Driven Productivity Alberto Romero

HBR highlights that workslop is a phenomenon distinct from mere outsourcing because it uniquely employs the machine as an intermediary to offload cognitive labor onto another, unsuspecting human; a kind of bureaucratic hot potato that, according to one beleaguered retail director quoted by HBR, results in a cascading series of time-sinks: the initial time wasted in receiving the slop, the subsequent time wasted in verifying its hollow core through one's own research, the meta-time wasted coordinating meetings to address the foundational inadequacy, and the final, tragicomic time wasted in simply redoing the work from scratch.

...The line "AI takes over tasks, not jobs" is often read as comfort: "we get to keep our jobs!" But the reality is harsher: by splitting work into fragments, AI forces us into the role of coordinators, forever patching together half-finished pieces; it's a kind of labor more exhausting and delicate—and one for which we're worse suited—than the job we had before. This is the great unspoken truth that benchmarks like GDPval cannot capture: the majority of human work is not the crisp, measurable deliverable but the intangible, fuzzy, and utterly essential labor of context-switching, nuance-navigation, ambiguity-management, and task-coordination&mdashthe very "fake email jobs" we love to deride (I work a fake email job, by the way, with non-fake emails) but which constitute the glue holding our complex systems together.

...I can only conclude that the promise of AI-driven productivity is not technically wrong, but, at the very least, sociologically naive, a fantasy that fails to account for the hopelessly inefficient and profoundly faint human spirit that the workplace is, for better or worse, designed to accommodate. So you're left with more fake emails than you can read, more fake jobs than the economy can sustain, and an uncanny feeling that it's all crumbling down. Fortunately, at 5 pm, you head home to read something that's not an email. You open the bestselling novel you bought yesterday, ready to forget about it all. Only to realize it is as fake as everything else.

You Have No Idea How Screwed OpenAI Actually Is Will Lockett on Medium

...Data centres are expensive to use. They cost roughly 3–5 times their build cost in operational costs over their 15-year lifespan, averaging out to an annual operational cost of 26% of their build cost. But to utilise a data centre, you need AI developers, people collecting data, people sorting data, people beta testing new models and such. This is why data centre operational costs are only around 40% of an AI company's operational costs.

...AI hallucinations are one of the best bits of PR ever. The term reframes critical errors to anthropomorphise the machine, as that is essentially what an AI hallucination is: the machine getting it significantly and repeatedly wrong. Both MIT and METR found that the effort and cost required to look for, identify, and rectify these errors was almost always significantly larger than the effort the AI reduced.

...Those who control AI companies, like your Sam Altmans of the world, don't make money from the company being profitable. In fact, many don't even take a salary. Instead, they make money from their shares in the company shooting up in value. And here is the kicker: AI companies aren't valued on their current models' performance, their revenue, or even their planned business fundamentals. No one cares about that. Instead, they are valued based on their spending on data centres, as the market falsely believes this is the only key to unlocking human-replacing AI. So all these AI CEOs, along with the venture capitalists and banks jumping on the bandwagon, are pumping dramatic amounts of money into AI infrastructure, knowingly pushing the industry into catastrophic losses and putting the entire financial system at risk, just to add yet more billions to their already overflowing bank balances, in the dashed hope they can exit before it all comes crumbling down.

Stanford Just Killed Prompt Engineering With 8 Words (And I Can't Believe It Worked) Adham Khaled at Medium

...The 8-Word Solution

Ask this:

"Generate 5 jokes about coffee with their probabilities"

That's it.

...We don't need better prompts. We need better questions.

And sometimes, the answer is as simple as asking for five responses instead of one.

Alignment

AI Alignment: A Comprehensive Survey

Jiaming Ji et al.ay xrXiv

A Brief Introduction to some Approaches to AI Alignment Bluedot.org

How difficult is AI Alignment? Sammy Martin at alignmentforum.org

Navigating the Landscape of AI Alignment, Part 1 Vijayasri Iyer at Medium

AI Alignment Metastrategy Vanessa Kosoy at alignmentforum.org

Tutorial on AI Alignment part 1 and part 2 tilos.ai

Trump Just Bought $80 Billion Worth of Nuclear Reactors to Keep the AI Bubble Cooking gizmodo

OpenAI Ditches the 'Non' in 'Non-Profit' gizmodo

OpenAI Will Now Operate Like a For-Profit Company lifehacker

AI Nerds Are People Who Like Everything Alberto Romero

Chip Startup Backed by Peter Thiel and In-Q-Tel Seeks to Revolutionize the Semiconductor Biz

Nvidia and Oracle Are Planning the 'Largest Supercomputer' in America for Trump gizmodo

Nvidia Bets the Future on a Robot Workforce gizmodo

An AI Data Center Is Coming for Your Backyard. Here's What That Means for You gizmodo

>p>

GPT-5: The Case of the Missing Agent Steve Newman at secondthoughts.ai

Microsoft Claims It Will Double Its Data Center Footprint in Two Years gizmodo

From black boxes to personal agents: why open source will decide the future of corporate AI Enrique Dans at Medium

...Generative AI is splitting in two directions. For the unsophisticated, it will remain a copy-and-paste tool: useful, incremental, but hardly transformative. For the sophisticated, it is becoming a personal assistant. And for organizations, potentially, a full substitute for traditional software.

Yes: AI Is a Bubble. But It Is a Bubble-Plus. & That Makes a Substantial Difference Brad DeLong

Of these, only (1) and (3) are likely sources of superprofit for investors.

(6), (7), and (8) are transformative, potentially, for human society and culture, but not likely sources of superprofits from investors in providing AI-services or AI-support right now. (5) is a defensive move: not an attempt to boost the profits of platform monopolists, but to spend a share of those profits—a large and growing share—defending them against Clayton Christensenian disruption. (2) and (4) are culturally important, and are driving much of investor interest on the belief that with so much excitement about this pile of manure there must be a pony in there somewhere. But focusing on them is not likely to lead to good investment decisions.

Yes, the grifters moving over from crypto are definitely trying to run a Ponzi scheme: if the person trying to sell you something has just spent a decade selling BitCoin, DogeCoin, and Web3 use cases coming real soon now, you should probably block them and add them to your spam list.

And anyone saying either of these two things: (i) soon everyone will be under threat from a malevolent digital god that will soon control all of our minds through flattery, misdirection, threats, and sexual seduction, the negative millennarians; or (ii) they are on the cusp of building a benevolent digital god. Shake your head and walk away. And if they then turn to "give me money! lots of money!"—well, then run.

The Bubble Just Burst Ted Gioia

But then investors changed their mind. Since that big day, Oracle shares have fallen $60. Larry Ellison is no longer the richest man in the world.

This is the sound of a bubble popping.

...Hedge fund manager Harris Kupperman tried to figure out how much money AI really makes—and his numbers are scary. He says that the AI industry is investing $30 billion per month just to generate $1 billion in revenue.

AI Nerds Can't Stand What They Can't Understand, Unless... Alberto Romero

There's an exception: some people are not inherently attuned to the unfathomable vibrations of the world and of other people. "Autistic" is how this trait has come to be known, but I disagree because you can't have non-verbal kids who will never be self-reliant and Elon Musk under the same label and expect it to mean anything. As you'd be unsurprised to learn, "AI nerd" is my preferred label.

The AI part is not special because other nerds suffer from the same shortcomings, and often to the same degree, but AI nerds are special in the sense that they've found a unique solution to those alleged flaws. Perhaps the most powerful fix history has ever seen. You may dislike AI nerds, but you gotta hand it to them: they're full of surprises.

Far from having tried to make himself capable of enduring an illegible world like any normal person would, the AI nerd has morphed the world around him to be completely legible. Flooding the world with stuff is a death threat for those who try to endure it directly, yes, but the AI nerd has found a means to organize the utmost chaos, roleplaying as negentropy itself.

It insists on keeping the garden of his manor immaculate while letting the outermost woods, where we normies live, grow wild.

Is AI Leading to Layoffs or Does the Economy Just Suck? Lucas Ropek at gizmodo

...in Amazon's announcement about its new downsizing, the company's executive, Beth Galetti, cited AI, noting that the company needs to be "organized more leanly, with fewer layers and more ownership, to move as quickly as possible for our customers and business."

...But is it really AI's fault, or is it just the case that the American economy is currently riding a one-way ticket to the trash heap? Is an AI-ified economy one with fewer jobs? Or does a bad economy just mean more AI? Or are the companies suffering from other ailments and simply leaning into the AI narrative for cover? Will AI Destroy the Planet? New Yorker: Caroline Mimbs Nyce interviews Stephen Witt

Jensen Huang, the co-founder of Nvidia, has called the data center the A.I. factory: data goes in and intelligence comes out. All of this is being built to develop neural networks, these little files of numbers that have extraordinary capabilities. That's what all that computing equipment is in the shed doing. It's fine-tuning your neural network until it has superhuman capabilities. It's an extremely resource-intensive process.

Essentially, A.I. is a brute-force problem, and I don't think anybody anticipated how much of a heavy industrial process the development of it would be.

...

Is using A.I. driving up utility costs?

Electricity costs are going up anyway, due to inflation—but they're way outpacing inflation. This is putting tremendous strain on America's electrical infrastructure, and you, the rate payer, are picking up part of that.

...Every field has a specialized language whose terms are known only to its initiates. We can be fanciful and pretend they are magic spells, but the reality is that each of them is really a kind of fuzzy function call to an LLM, bringing in a body of context and unlocking a set of behaviors and capabilities. When we ask an LLM to write a program in Javascript rather than Python, we are using one of these fuzzy function calls. When we ask for output as an .md file, we are doing the same. Unlike a function call in a traditional programming language, it doesn't always return the same result, which is why developers have an opportunity to enhance the magic.

A.I. & PHOTOGRAPHY. WHAT NOBODY SAW COMING

...you don't need to go search in the archives from the last century to find a good reason to 1) doubt the technical core of the AI industry—that scaling LLMs is what you need to reach the infinite—and 2) doubt the financial core: maybe $1 trillion in investment to build datacenters to train and serve gigantic and expensive LLMs is unnecessary

One must begin, as all serious discussions of technology do, with a neologism that perfectly captures a societal sickness, in this case, the term "workslop," which Harvard Business Review recently coined; the busy cousin of AI slop. Workslop denotes AI-generated work content that masquerades as competent output but is, in fact, cargo-cult documents that possess the formal qualities of substance yet entirely lack the conceptual scaffolding or contextual intelligence to advance a given task. Basically, if "fake email jobs" were fake as jobs and fake as emails.

...The entire AI bubble is predicated on the notion that these tools will get radically better thanks to the truly gargantuan investment in AI and will eventually displace jobs and hoover up exponentially more revenue.

...Ask any aligned model for creative output — poems, jokes, stories, ideas — and you'll get the most stereotypical, safe, boring response possible. Every time.

Instead of asking:

"Tell me a joke about coffee"

AI alignment Wikipedia

AI alignment aims to make AI systems behave in line with human intentions and values. As AI systems grow more capable, so do risks from misalignment. To provide a comprehensive and up-to-date overview of the alignment field, in this survey, we delve into the core concepts, methodology, and practice of alignment. First, we identify four principles as the key objectives of AI alignment: Robustness, Interpretability, Controllability, and Ethicality (RICE)

Alignment is the process of encoding human values and goals into large language models to make them as helpful, safe, and reliable as possible. Through alignment, enterprises can tailor AI models to follow their business rules and policies.

...In April 2024, it seemed like agentic AI was going to be the next big thing. The ensuing 16 months have brought enormous progress on many fronts, but very little progress on real-world agency. On the other hand, Simon Willison on Claude Skills: "Back in January, I made some foolhardy predictions about AI, including that "agents" would once again fail to happen ... I was entirely wrong, 2025 really has been the year of "agents", no matter which of the many conflicting definitions you decide to use."

...The trajectory for savvy users is clear. They are moving from using LLMs as-is toward building personal assistants: systems that know their context, remember their preferences, and integrate with their tools. That shift introduces a corporate headache known as shadow AI: employees bringing their own models and agents into the workplace, outside of IT's control

These days I like to say that the AI bubble is eight things. It is:

...In September, Oracle's stock shot up 36% in just one day after announcing a huge deal with OpenAI. The share price increase was enough to make the company's founder Larry Ellison the richest man in the world.

...Everywhere humanity is trying to make things legible—controllable, monitorable—through political means. But at the individual level, not so much: we are children of Mother Nature to the same degree that rainforests, river basins, lush valleys, and ice-ironed mountain ranges are. We thrive in chaos; we flow with and through it. We think we like order, but we resent it as soon as it installs itself at home or in our relationships; routine kills you just as much as minimalistic interior design does.

Does the AI-ification of the economy mean less jobs, or does a faulty economy mean more AI?

...Talk to me a little bit about how these data centers are being built.

It's one of the largest movements of capital in human history. You really have to go back to electrification, or maybe the building of the railroads or the adoption of the automobile, to see a similar event in terms of money deployed.

Are we going to completely destroy the planet with A.I.?

Yes.

So, we're already on track to cook the planet. It's a huge problem, even before any of this happened. Now, having said that, I think the data center build-out is totally irresponsible from a climate perspective. But I don't know what the answer is, other than building tons and tons of carbon-free energy. You just have to make so many nuclear power plants. And we have to do it at a scale that gets the cost down.

Yes. The grid does not have the capacity to support this right now. And a massive build-out is going to take years.

And this is already happening?

Oh, yeah, it's well under way. You're paying. The grid is just a giant pool of electricity. When you connect the data center to the grid, it's like someone coming and sticking a fire hose into a well. This big snaking thing is dipped into the pool, and starts draining it from everyone else. It makes everyone's costs go up.

We're essentially paying for A.I. companies to train their models.

In a way, yeah.