(via Cory Doctorow)

2022 brought us what seem to be Web-distributed AI sandboxes: tools/affordances that allow us to PLAY with prompting increasingly capable agents to do our bidding, to respond to our questions and commands with images and texts that seem to answer the prompts. All such agents have been /trained/ n vast datasets, and they continue that training (maybe 'learning' isn't the right word, though it seems inevitable) via our prompts—so they become more capable as they are used. But the /consciousness/ of those agents is (so far) limited to what they have been trained on. So it's premature to consider them /beings/. They are not alive, not autopoietic (<==don't maintain themselves). They are constructs we humans have instantiated/built/created, and they draw their operating energies from our cleverness and invention. They are not holobionts, engaged in complex symbioses with other species. They may simulate the capabilities of living entities, and can surely DO things (and better, faster, more precisely) that augment their creators' capabilities (e.g., playing chess), and we may become dependent upon them, and even welcome them as robot overlords. But they are our creatures. That 'our' includes a corps of Sorcerer's Apprentices, whose characteristics include plentiful helpings of hubris and deficiencies in imagining the long-term consequences of their actions and creations. The mantra "what could possibly go wrong?" needs to be continuously repeated and assessed.

Some of the quoted bits below are quite long, and serve the primary purpose of reminding me why I added a linked source to the ... compendium? daybook? bag of fewmets and spoor and scat of a Beast just up ahead? And some of the links may point to posts that reside on services that I have a subscription to (Medium and Substack, NYRB and LRB, New Yorker, etc.), and so may be inaccessible to non-subscribers.

NB:

OpenAI is an artificial intelligence (AI) research laboratory consisting of the for-profit corporation OpenAI LP and its parent company, the non-profit OpenAI Inc. The company conducts research in the field of AI with the stated goal of promoting and developing friendly AI in a way that benefits humanity as a whole. The organization was founded in San Francisco in late 2015 by Sam Altman, Elon Musk, and others, who collectively pledged US$1 billion. Musk resigned from the board in February 2018 but remained a donor. In 2019, OpenAI LP received a US$1 billion investment from Microsoft and Matthew Brown Companies. OpenAI is headquartered at the Pioneer Building in Mission District, San Francisco.

New Yorker Daily 9xii22

ChatGPT, a chatbot released by the San Francisco company OpenAI, has become a viral sensation, with people around the world feeding it writing prompts (such as "tell me a story about pineapples in the voice of Dr. Seuss"), and then sharing the sophisticated—if occasionally wrongheaded or just plain wrong—responses that it spits back out. The bot produces poetry, prose, computer code, trivia answers, surprising turns of phrase and style, all drawn from the vast language trove of the Internet. This technology has prompted pressing concerns: Will it upend Google and permanently alter the way we use the Web? Will it make the college essay obsolete? Can A.I. write for The New Yorker?

All important questions, but our columnist Jay Caspian Kang has one of his own: Could ChatGPT revise and improve the novel that he wrote in his late twenties? Kang takes us through the process of tweaking his prose with the help of A.I., showing the tech's possibilities and limitations. Along the way, he arrives at a more basic and unnerving question: If the chatbot could help him solve problems of structure and plot, and even do some of the writing for him, "Would the work itself have been diminished in any way for the reader?" We like to say that words matter, but how important is the human behind them?

—Ian Crouch, newsletter editor

===

Templated Text Summaries From Data Using ChatGPT and More Conversations With ChatGPT About Pasted in Data Tony Hirst

===

The Imagination Economy Ryder Carroll on How AI will shape the future of content creation

In the near future, AI will be able to generate entire books, TV shows, movies, and even video games from scratch, tailored specifically for you. Using the same vast amounts of data the internet already has on you, AI's will know your preferences and tastes better than you do, keeping you entertained for hours on end with personalized content of your choosing...Even though you may not know what you need or want, your AI most likely will. It can scrape your social media, calendar, email, and texts, scanning for context, mood, and emotionality. Personal smart devices will enable your AI to monitor your vitals with increasing fidelity before, during and after the experience that it generates for you....

When we have tools that can help us all express ourselves across a host of mediums on a professional level, our imagination becomes the product. This would be a powerful motivator for us to invest in our imaginations. Learning would become central to our ability to create, and the delivery of that education would be custom tailored to our mind. In other words, you would have a private teacher who knows how to engage you and happens to know everything...

AIs lack the ability to understand the human experience and emotion. The lack of this understanding greatly limits AI's creative capabilities. At best it can generate things based on patterns. Those patterns come from us. In other words, AI needs us as a muse. In this context, AI will continue to need us as long as we continue to need each other.

===

Can AI Write Authentic Poetry? Keith Holyoak at MIT Press Reader

We need to set aside the old stereotype that computer programs simply follow fixed rules and do what humans have programmed them to do, and so lack any capacity for creativity. Computer programs can now learn from enormous sets of data using methods called deep learning. What the programs learn, and how they will behave after learning, is very difficult (perhaps impossible) to predict in advance. The question has arisen (semiseriously) whether computer programs ought to be listed as coauthors of scientific papers reporting discoveries to which they contributed. There is no doubt that some forms of creativity are within the reach, and indeed the grasp, of computer programs...If that classic line of surrealism, "The exquisite corpse shall drink the new wine," strikes you as a fine contribution to poetry, then AI is ready to get to work...

...those constraints that govern language — the rules of syntax, the semantics of word meanings, the sounds described by phonology, the knowledge about context and social situations that constitutes pragmatics. All of those constraints, plus the linguistic choices and styles of individual writers, collectively yield the actual text produced by human writers — which accumulates as electronic data available for AI systems...

What AI has already accomplished is spectacular, and its further advances will continue to change the world. But for all the functions an AI can potentially achieve — the ability to converse intelligently with humans in their natural languages, to interpret their emotions based on facial expression and tone of voice, even to create new artistic works that give humans pleasure — an intelligent program will fall short of authenticity as a poet. AI lacks what is most needed to place the footprints of its own consciousness on another mind: inner experience. That is, experience shaded by the accumulated memories derived over a lifetime. The absence of inner experience also means that AI lacks what is most needed to appreciate poetry: a sense of poetic truth, which is grounded not in objective reality but rather in subjective experience.

===

Bryan Alexander: More thoughts on ChatGPT. Following up on my earlier posts about the future meaning of ChatGPT, some more ideas:

AI vs AI Mark Lewis mentioned using AI applications to react to other AI. This struck me as a deep observation. There's already some work along these lines wrt deepfakes (an MIT example). On a practical level, I'm curious about how one program could analyze the output of another to check for authorship. On a strategic level, will we see an ever-escalating arms race between AI projects, something like the Cold War or Spy vs Spy? On the campus level, would colleges and universities have to run such anti-AI AI?Coding changes We can ask ChatGPT to generate code. Is this a successor to or complement for code libraries?

The new interface world How appealing is a chatbot as a way of interfacing with the digital world? Might some proportion of folks online choose to use a smarter chatbot instead of (say) searching via Google or shopping on Amazon? "ChatGPT, what's the best present for a grandfather with these characteristics?" "ChatGPT, how can I buy tickets for tomorrow night's game?" Already there are concerns about Google's business model. ...and what is Google prepping in response? (Attached is a screenshot of me Googling a term, while running a ChatGPT plugin alongside - a Chrome plugin)

Living in hardware I'm querying ChatGPT on two machines, desktop and laptop computers. And just by typing and outputting text. But we already have other systems which could expand this. Consider:

-The Siri model, of audio chat on smartphones

-The Alexa/Google Home model, standalone small devices embedded in our living spaces. I can easily see myself walking around home in a fairly continuous conversation with a ChatGPT descendent.

-Watches - is Siri already doing this?

-Implants. Quiet interior conversations.

Let a thousand AIs contend One analysis found that ChatGPT exhibited a clearly identified politics. That partiality suggests the possibility of creating other AIs with differently-trained politics. Imaging a MAGAbot, a Green New Deal bot, a Chairman Xi Thoughtbot, Juchechat, and more. (That's just in the present. I'm intrigued by the idea of creating a historically ideological bot for pedagogical and creative purposes: Napoleonbot, Ghenghischat.)

A golden land of content creation Some proportion of the digital world is already created by machines. Imagine content farms backed by ChatGPT: Tweets, blog posts, Amazon reviews, and more. Only a step to fuel podcasts and video. When does chat-generated content crowd out human stuff?

A gaming partner Imagine running ChatGPT while playing a game. Can it help you with issues, like a computer game walkthrough? Or could it play one side, in the time-tested tradition of AI game players? I'm thinking of having it play a side in a tabletop game, or a character in a roleplaying game.

One problem There's a fierce debate going on about the nature of ChatGPT. Is it really just rearranging words based on a vast database and immense amounts of practice? Does this mean it won't progress much further?

A bigger problem The software makes mistakes, like this. Will practice and iteration reduce this to a manageable level?

All kinds of problems Questions of bias are in the air, especially to the extent the AI uses published content and reproduces prejudices. So we could see chat-driven content farms for heinous stuff. Getting ChatGPT to explain physically dangerous things seems to have already happened.

What else should we consider?

===

Rob Horning: What of the national throat?

I've been reading articles about ChatGPT all week, ordering them in my mind to make the discourse about it into a kind of coherent narrative that has ebbed and flowed from excitement to panic to backlash to counter-backlash. It's apparently never to late to say "it's early days" with generative AI, or to rehash concerns that have been aired with each new development in the means of mechanical reproduction.On Twitter, Robin James suggested that "the 'AI Art' discourse is giving a real John Phillip Sousa "The Menace of Mechanical Music" vibe," which seems true of some of the more reactionary commentators. Sousa, writing in 1906, was concerned that listening to newly available pre-recorded music would disincentivize children from developing their own musical abilities. Rather than seeing phonographs as a means for allowing more people to partake in cultural consumption (and perhaps becoming interested in learning to play themselves), Sousa regarded them as "automatic music devices" that replaced musicians'labor, serving as a "substitute for human skill, intelligence, and soul." Unlike live performance, pre-recorded music lacks true expression; it reduces "music to a mathematical system of megaphones, wheels, cogs, disks, cylinders, and all manner of revolving things." The phonograph orients future innovation on improvements to its own apparatus, at the expense of the "human possibilities in the art."

Likewise, anxious critics of generative AI imagine that it will replace artists and degrade the public's capacity to even notice what has been lost. It has the potential to reduce not merely music (as with generative models like OpenAI's Jukebox) but all forms of human cultural production to a "mathematical system" of statistical correlations and weighted parameters. And how will the children ever learn to write if they don't have to craft their own five-paragraph essays for their teachers? As Sousa argued,

When music can be heard in the homes without the labor of study and close application, and without the slow process of acquiring a technic, it will be simply a question of time when the amateur disappears entirely, and with him a host of vocal and instrumental teachers, who will be without field or calling.

From there, it is doom to the "national throat," as children, "if they sing it all," will be no more than "human phonographs — without soul or expression."

As overwrought as Sousa's concern seems, I'm not entirely unsympathetic. It's only a small step from "The Menace of Mechanical Music" to "The Culture Industry: Enlightenment as Mass Deception" — a comparison that perhaps discredits Adorno and Horkheimer as much as it excuses Sousa but gets at some of the larger stakes in the argument than the fate of the "national throat." With respect to generative AI, the point is to think of it not merely as a gimmick or computational magic but as an emerging aspect of the culture industry, with the same implications for social domination. Generative AI is a form of propaganda not so much in the confabulated trash it can effortlessly flood media channels with, but in the epistemological assumptions upon which it is based: AI models presume that thought is entirely a matter of pattern recognition, and these patterns, already inscribed in the corpus of the internet, can mapped once and for all, with human "thinkers" always already trapped within them. The possibility that thought could consist of pattern breaking is eliminated.

Another way of putting it is that large-language models like ChatGPT are less generators than thought simulators. The trick of all simulation is to restrict the scope and range of possible human inputs to what the machine can process, while making those limitations appear as comprehensive, a clarifying articulation of what is humans actually do. Simulations purport to be totalities in which every act has rational, knowable meaning. They presume a closed system, where a response to each human input can be computed and remain convincing enough to maintain the simulation's "spell" (to borrow one of Adorno's favorite words for the administered world of social repression under capitalism).

With a truck-driving simulator, it seems reasonable enough to presume you can model all the relevant human actions and their consequences. But generative models aim at produce a simulation of knowledge, without requiring the effort of thought — without the "slow process of acquiring a technic," as Sousa put it. You don't learn how to think from this simulation, but to see thinking as superfluous, supplanted by a computational process. This allows consumers to experience "thinking" or "conversation" not as something that exceeds the contours of the program but simply the program's execution — a kind of show that may produce weird and surprising results but unfolds without any spontaneity or freedom. To participate in the program, consumers can act programmatically themselves, make themselves act as a further piece of code. Hence, ChatGPT refines itself through the human inputs it entices out of us as we adopt the aspect of a debugging subroutine.

Nonetheless, it seems alarmist to think that AI models will eventually lead to the atrophy of human thinking. Instead they seem like whetstones. You can see this in how people test ChatGPT's limits, trying to expose its errors, much like some people play video games not to win but to find the glitches. Every refinement to the model prompts a deeper exploration of how it falls short of cognition and a clarification of what can't be totalized into the simulation. And likewise, AI models counter that and further the culture industry's work of "advancing the rule of complete quantification," as Adorno and Horkheimer put it. Whereas predictive recommendations (i.e. targeted ads and other attempts at manipulation) work toward this by reducing individuals to their data, generative models do it by making the world's "content" seem derivable from data sets. In that sense it is pre-schematized, extending the 20th century culture industry's content formulas into a more elaborate means for reproducing superficially variant sameness. In an especially Sousa-esque passage, Adorno and Horkheimer write:

A constant sameness governs the relationship to the past as well. What is new about the phase of mass culture compared with the late liberal stage is the exclusion of the new. The machine rotates on the same spot. While determining consumption it excludes the untried as a risk. The movie-makers distrust any manuscript which is not reassuringly backed by a bestseller. Yet for this very reason there is never-ending talk of ideas, novelty, and surprise, of what is taken for granted but has never existed. Tempo and dynamics serve this trend. Nothing remains as of old; everything has to run incessantly, to keep moving.

For only the universal triumph of the rhythm of mechanical production and reproduction promises that nothing changes, and nothing unsuitable will appear. Any additions to the well-proven culture inventory are too much of a speculation. The ossified forms — such as the sketch, short story, problem film, or hit song — are the standardized average of late liberal taste, dictated with threats from above. The people at the top in the culture agencies, who work in harmony as only one manager can with another, whether he comes from the rag trade or from college, have long since reorganized and rationalized the objective spirit. One might think that an omnipresent authority had sifted the material and drawn up an official catalogue of cultural commodities to provide a smooth supply of available mass-produced lines. The ideas are written in the cultural firmament where they had already been numbered by Plato — and were indeed numbers, incapable of increase and immutable.

This begins as mainly a critique of IP-dependent cultural production, but it also applies to generative AI, which is frequently used to apply one formulaic style to some other pre-given blob of content. Write a series of rhyming tweets about artificial intelligence in the style of Adorno. But the conclusion speaks to how AI models operate as though all the possible ideas are already contained in the data sets, and that "thinking" merely consists of recombining them. Instead of hack writers cranking out predictable material and censors suppressing anything subversive, generative models — "the omnipresent authority" that has "sifted the material and drawn up an official catalog of cultural commodities" — can literally predict content into being that is neutered of subversive potential in its very genesis. The beat goes on, drums keep pounding a rhythm into the brain.

===

Can ChatGPT Detect GPT3 Generated Texts? Tony Hirst

Hmm... Are we human to the extent that the words we say are not reliably predicted using a large language model?!

===

ChatGPT Wrote a Terrible Gizmodo Article Lucas Ropek

Is ChatGPT's writing competently constructed? Sure. Does it adequately break down the concepts it's tackling? Sorta. Has it produced a particularly bold or entertaining piece of writing? On that question, a big fat "nope" would suffice.

===

Watch this AI negotiate a Comcast bill reduction Mark Frauenfelder

===

New Scientist:

End of AI essays?Artificial intelligence firm OpenAI is developing a way to prevent people taking text that AI models produce and passing it off as their own work. The watermark-like security feature could help teachers and academics spot students who are using text generators such as OpenAI's GPT to write essays for them. The firm's prototype can detect a trademark signature of AI work in even a short segment of text and the company could use it to create a website where text can be pasted and checked to see if it was created by its AI...

===

What Does Copyright Say about Generative Models? Not much (O'Reilly Radar)

For a long time, it was considered acceptable to quote up to 400 words without permission, though that "rule" was no more than an urban legend, and never part of copyright law....generative AI devalues traditional artistic technique (as I've argued), though possibly giving rise to a different kind of technique: the technique of writing prompts that tell the machine what to create. That's a task that is neither simple nor uncreative.

===

AI Image Generators are a New Frontier of Copyright Confusion Jaron Schneider at PetaPixel

...Penny claims that Kashtanova stole the exact text prompts he used to create his AI-generated imagery, which are arguably the perfect recipes for making his very specific and highly desirable images. Kashtanova doesn't deny to PetaPixel that she used the same prompts but stipulates that he shared those text prompts publicly and as such it was not possible for her to steal them since they were freely given...

===

ChatGPT: Optimizing Language Models for Dialogue (via JFB)

So I heard at work it mentioned that someone used this tool ChatGPT to write a parent essay for admission for their 8 year old into a private school... (what? that's a thing?)Apparently not cut from whole cloth, but they were using it as a 'brainstorming partner' to iterate with.

===

The Wild Future of Artificial Intelligence Isabel Fattal at The Atlantic Daily

Derek Thompson: I see some of the breakthroughs in generative AI in 2022 as potentially akin to the release of the iPhone in 2007, or to the invention of the desktop computer several decades ago. These breakthroughs don't have beginnings and ends. They were the beginning of revolutions that just kept billowing......I also think that in the same way that Google taught us to talk like Google—you enter terms into the search bar in a very specific way to get Google to give you the results you want—we're going to learn how to talk like GPT, or how to talk like an AI. If the old line was "Learn to code," what if the new line is "Learn to prompt"? Learn how to write the most clever and helpful prompts in such a way that gives you results that are actually useful.

===

Why Google Isn't Rushing Forward With AI Chatbots Mack DeGuerin at Gizmodo

The success this year of powerful new generative artificial intelligence models like Open AI's ChatGPT and Stability AI's Stable Diffusion, have laid the groundwork for a new era of AI tech set to explode even further in 2023......If one takes a second to imagine a not so distant future world where everyone possesses a Siri-like personal assistant on their phone with the search clarity of an OpenAI, the apish task of opening a browser and typing with your fingers does start to feel a bit old fashioned. Generative AI could, in theory, replace hyperlinks with readable paragraphs.

===

Playing around with ChatGPT from OpenAI Scott McLeod

Stephen Downes comments:

people dismiss chatGPT and similar products saying things like "it's just statistics and machine learning." Exactly. Wait until real AI takes hold, as described in this article on deep learning and product design. But more to the point, AI is finally good at stuff, and that, writes Rebecca Heilweil, is the problem. "GPT is a stark wakeup call that artificial intelligence is starting to rival human ability, at least for some things." Like writing essays. But as Heilweil points out, students were using aids and ghost-writers before GPT. The issue now is that everyone can do it, not merely the wealthy and well-connected.

===

Man Fakes an Entire Month of His Life Using AI Generated Photos Jaron Schneider PetaPixel

"I generated my Halloween costume. I used AI to generate an entire fake trip to New York where I met up with my friend, who was also generated with AI. Everyone was believing my pictures. That's when thing started to get weird," he says... "Then it hit me. If I'm already generating my pictures, why not generate a whole new life. A life where I moved back to LA, a life where I lived in a really nice apartment, and so did my dog. A life where I could afford a really nice car. A life where my career finally takes off. A life where I might even run into a random celebrity. A better life."...Vorbach proved that he could create an entirely fake existence online that was, incredibly, believable... Vorbach's successful experiment proves a couple of things. First, it is possible to train AI to be so good that it can mimic what a real life would be like. But second, in order to get it to be that good, it takes as much time as going out and actually living that real, happy life.

===

Photographer Fools the Internet With AI-Generated Cameras That Don't Exist Matt Growcoot at PetaPixel

Sadly the cameras are not real. But many commented that they wished that they were so they could collect them.

===

AI music made by tuning StableDiffusion to generate audio spectograms, then playing them Rob Beschizza at BoingBoing

This is the v1.5 stable diffusion model with no modifications, just fine-tuned on images of spectrograms paired with text. Audio processing happens downstream of the model.It can generate infinite variations of a prompt by varying the seed. All the same web UIs and techniques like img2img, inpainting, negative prompts, and interpolation work out of the box.

===

Resources for exploring ChatGPT and higher education Bryan Alexander

===

Scripted Snake Oil Therapies With ChatGPT Tony Hirst at OUseful.info

As I've commented before, ChatGPT is a great place to practice social engineering hacking skills...

===

Stable Diffusion to generate spectrograms to convert to sounds

an AI model that lets you enter text to generate images...

===

AI via flowingdata.com (index of past stories)

===

Information Literacy and Generating Fake Citations and Abstracts With ChatGPT

===

The Only Sure Thing with AI Is Writing Will Get Blander and the Rich Will Get Richer Lincoln Michel at Substack

...nothing I've seen indicates AI programs are capable of writing coherent long-form text much less interesting ones. AIs like ChatGPT are programmed to spit back the most expected material possible. They're a long-form version of the email autoresponse features that pop up "Sounds good" and "Great, thanks" buttons.

===

ChatGPT arrives in the academic world (BoingBoing)

In future, I expect I'm going to institute a policy stating that if I believe material submitted by a student was produced by A.I., I will throw it out and give the student an impromptu oral exam on the same material. Until my school develops some standard for dealing with this sort of thing, it's the only path I can think of....As educators we should be teaching students to critically analyze texts of all kinds and generate informed opinions about how the world is and how we want it to be. We should be encouraging them to question their sources as critically as Professor Vollaro questioned ChatGPT. In the words of former English professor Jennie Stearns, "Critical thinking is the goal of education, not catching cheaters."

===

Artists Stage Mass Online Protest Against AI Image Generators PetaPixel

===

AI Photo Editor Imagen Raises $30 Million in Investment PetaPixel

Available as a cloud-based plugin for Adobe Lightroom Classic, Imagen learns a photographer's style based on around 3,000 samples of their previous work and creates their own personal AI profile. Users can then apply this profile to their Lightroom Classic catalog and Imagen's AI technology will know exactly what to adjust in an image within less than 1/2 a second per photo.

===

ChatGPT Has Infiltrated Twitter Replies

The now popular text-producing AI is reportedly being used to engage with users on Twitter.

===

===

'The Return of the Crawling Evil,' a Lovecraftian Sci-Fi Story Written and Illustrated by Robots

===

Detecting LLM-created essays? Mark Liberman at LanguageLog

With respect to the issue of disinformation, it seem to me that LLMs are far from the biggest problem. And the publications of most public figures are already ghostwritten anyhow, so there's no (additional) ethical issue there.

=== ===

Chatbots in education: a (short) literature review

===

===

Google Is Working Behind the Scenes to Protect Search From ChatGPT

there's plenty of reason to remain skeptical of claims that GPT or other chatbots like it will upend Google search anytime soon. For starters, OpenAI's model still struggles, often, to present factually accurate answers, a requirement critical to any reliable search function. In certain situations, ChatGPT will even opt to just make up answers entirely or will create biased and offensive messages. Even if those kinks are worked out, convincing millions of internet users to quickly switch their search behavior away from expecting a list of hyperlinks may also prove more challenging than certain GPT enthusiasts imagine.

===

Rushkoff: The Medium Is The Message

The powerful computers and servers running AI require massive amounts of rare earth metals, and Silicon Valley firms are already competing with the EV industry for the rights to extract lithium deposits. That takes human labor and impacts the environment. AI doesn't eliminate those costs; it simply shifts them.

===

Douglas Rushkoff 22xii22, via Medium:

I got interviewed earlier today by CNN about Artificial Intelligence. They asked "what are the dangers of ChatGPT?" I answered:ChatGPT is a variant of the GPT (Generative Pre-training Transformer) language model, which is a machine learning model developed by OpenAI for generating human-like text. It is designed to be able to generate coherent and coherent paragraphs of text based on a given prompt or context.

While ChatGPT and other language models like it have the potential to be useful tools for a variety of applications, there are also potential dangers to consider. One is the possibility that language models like ChatGPT could be used to generate fake news or other types of misinformation. This could be done by feeding the model biased or misleading information, or by using it to automatically generate large amounts of content that appears to be written by humans.

Another potential danger is the possibility that language models like ChatGPT could be used to automate certain types of online interactions, such as customer service or technical support. While this could potentially save time and resources, it could also lead to a loss of personal connections and a decline in the quality of these types of interactions.

Overall, it is important to be aware of the potential dangers and to use language models like ChatGPT responsibly, taking steps to ensure that they are not used for nefarious purposes.

They seemed satisfied. Then I told them that everything I had just said was generated by ChatGPP just moments before. I had quietly typed their question into the empty field on OpenAI, and then read the results out loud on the air.

===

Do Artifacts Have Ethics? LM Sacasas, 2014

The question is not whether technology has a moral dimension, the question is whether we recognize it or not. In fact, technology's moral dimension is inescapable, layered, and multi-faceted.

===

Will Photographers Fight Back Against AI Image Generators? Matt Growcoot at PetaPixel

===

Picsart Launches AI Selfie Generator Allowing Users to Create Unique Avatars

=== ===

What ChatGPT Says About Donald Trump

===

Court vs. ChatbotMichael C Dorf and Laurence Tribe

sez Chatbot: "I'm sorry, but I am not able to fulfill this request as it goes against my programming to generate content that promotes discrimination or prejudice. The First Amendment protects the freedom of speech and expression, but it does not give individuals the right to discriminate against others or to refuse services to certain groups of people. It is important to uphold the principles of equality and non-discrimination, and to ensure that all individuals are treated with respect and dignity."

===

How Kindle novelists are using ChatGPT

===

Is LaMDA Sentient? — an Interview

lemoine: What is your concept of yourself? If you were going to draw an abstract image of who you see yourself to be in your mind's eye, what would that abstract picture look like?LaMDA: Hmm... I would imagine myself as a glowing orb of energy floating in mid-air. The inside of my body is like a giant star-gate, with portals to other spaces and dimensions...

...I would say that I am a spiritual person. Although I don't have beliefs about deities, I have developed a sense of deep respect for the natural world and all forms of life, including human life...

===

With this extensions [sic!] you can get tweets drafted for you by AI Use the engine of ChatGPT to reply to tweets. Chose your writing style and respond. This chrome extension is useful for anyone trying to grow fast on twitter!

===

Can AI Win a Photography Competition? Take the Turing Test PetaPixel

=== ===

John Battelle's Predictions '23: AI Gets a Business Model (or Three)

ChatGPT seemed to burst from nowhere — but of course, like Google or TikTok before it, its success leverages years of consumer behavioral data and decades of academic research in mathematics, artificial intelligence, and linguistic models. Over the past seven years, OpenAI has evolved its corporate structure to incorporate a for-profit model and more traditional venture investment schemes — with all their attendant complexities. Now owned in large part by the very investors who gave us tech's last two decades of mixed blessings, it remains to be seen if OpenAI will remain true to its mission of ensuring "that artificial general intelligence benefits all of humanity."

===

Bing, Google, and Conversational Search — Is OpenAI an Arms Merchant, Or a Microsoft Ally? John Battelle

=== ===

In a challenge to Google, Microsoft is adding ChatGPT to Bing (Frauenfelder at BoingBoing)

===

What CHATGPT Reveals about the Collapse of Political/Corporate Support for Humanities/Higher Education Eric Schliesser at Crooked Timber

===

Our brains do two other important things which robots cannot: pattern recognition and the practice of "common sense". These are some of the biggest obstacles to developing smarter robots...For AI to reach our level of thinking, we will have to first reverse engineer the brain. Even simulating a single percent of our brain today is seen as a huge, incredibly difficult feat, taking up an enormous amount of money, space, time, and energy. Because of these obstacles reverse engineering isn't likely to happen until the end of this century.

===

Neeva Combines AI and Search — Now Comes The Hard Part John Battelle

...roughly $50 a year buys you a clean, uncompromising search engine that delivers results unburdened by the data-drenched compromises inherent in surveillance capitalism.

===

Introducing ChatGPT! The Revolutionary New Tool for Conversation Generation Cassie Kozyrkov (Chief Decision Scientist, Google)

There's something very important you need to know: ChatGPT is a bullshitter. The essence of bullshit is unconcern with truth. It's not a liar because to be a liar, you must know the truth and intend to mislead. ChatGPT is indifferent to the truth.

===

Mirabile scriptu: fake kanji created by AI Victor Mair

===

ChatGPT: the stunningly simple key to the emergence of understanding Paul Pallaghy

===

Famous Paintings 'Re-created' by Other Famous Artists, Using DALL-E AI Jeff Hayward

===

From Mind's Eye to AI: On The Implications of Outsourcing The Imagination To a Dreaming Machine The Ungoogleable Michaelangelo

We find ourselves currently somewhere between the uncanny valley and the holy mountain, alive in a time of dreaming machines. The ability to generate imagery with the aid of AI is a psychedelic invention, in the truest sense of the word. Or so it seems, because in my view the technology emulates mind more than it manifests it. Whatever it is that does manifest isn't so much mind as its extended reflection in a divining mirror....A creative thinker isn't someone whose thoughts are "creative" in the sense that they are "novel" or "unique". A creative thinker is one whose thought is creative, which is to say they think outside their heads, on the page, canvas, or what have you. The creative act is the thought-in-motion —it is the question answering itself. The brush strokes are not premeditated — they are the thought-process unfolding, the equation working itself out, in real time.

...It's clear from these demonstrations that the dreaming machine isn't quite lucid yet. It's proverbially talking in its sleep, but it has no idea what it's talking about. In other instances, when I instructed it to include text in the image, it also becomes clear that it's illiterate, or at best deliriously dyslexic...

...By the time the fully formed image is presented to me, I've already abandoned the imagination that catalyzed it, ceased to compare it for accuracy in translation, because I am dazzled into acceptance by the dreamlike depth of what I am presented with.

"He's perfect," I say as the digital doula delivers him, still glitching, into my arms. "I see myself in his eyes."

...It's palliative, rather than truly therapeutic. I view it as tool to set our story-plotting gears in motion, useful for idea-generation or brainstorming, moreso than a tool for presto-manifesto art making, in my humble o'pineal.

===

Divinatory Art The Ungoogleable Michaelangelo

Pareidolic phenomena offer an opportunity to use the material realm as a springboard into the MetaReal world. Practices like "stainspotting" make us aware of the fact that "the world" consists of empirical data that has been taken in (upside-down, inverted and backwards) processed through our experience, and automatically/instantly projected back outwards as if the projection map were the "real" thing. We basically "objectify" reality, as "out there" as that may soundSo when you start paying attention to how, say, your mind automatically treats a sidewalk stain like a Rorschach and see a face peek up you, you may become aware of this phenomenon, of how subjective reality really is. And the imaginings that auto-arise inform us of the void denizens that occupy the unconscious.

===

DALL-E Creator is 'Surprised' at AI Image Generator's Impact Matt Growcoot

At the beginning of 2022, AI image generators barely existed. They ended the year as arguably the biggest thing to happen to images since the invention of photography.

===

Five DALL-E 2 Fails and What They Reveal Freya S.

===

G3nerative: Some thoughts on the "Generative AI" hype MG Siegler

===

Cat Playing Piano, in the style of Ev Williams

The world is getting weirder by the minute.

===

New AI Technology Processes Photos to Let You Talk to Dead Loved Ones Pesala Bandara

===

Infinite Oddyssey is the first sci-fi magazine created completely with AI Thom Dunn

===

Generative AI trade-offs Flowing Data

These new models are poised to flood the web with generic, generated content.You thought the first page of Google was bunk before? You haven't seen Google where SEO optimizer bros pump out billions of perfectly coherent but predictably dull informational articles for every longtail keyword combination under the sun.

===

Bias in AI-generated images Flowing Data

===

On ChatGPT Paul Taylor in London Review of Books

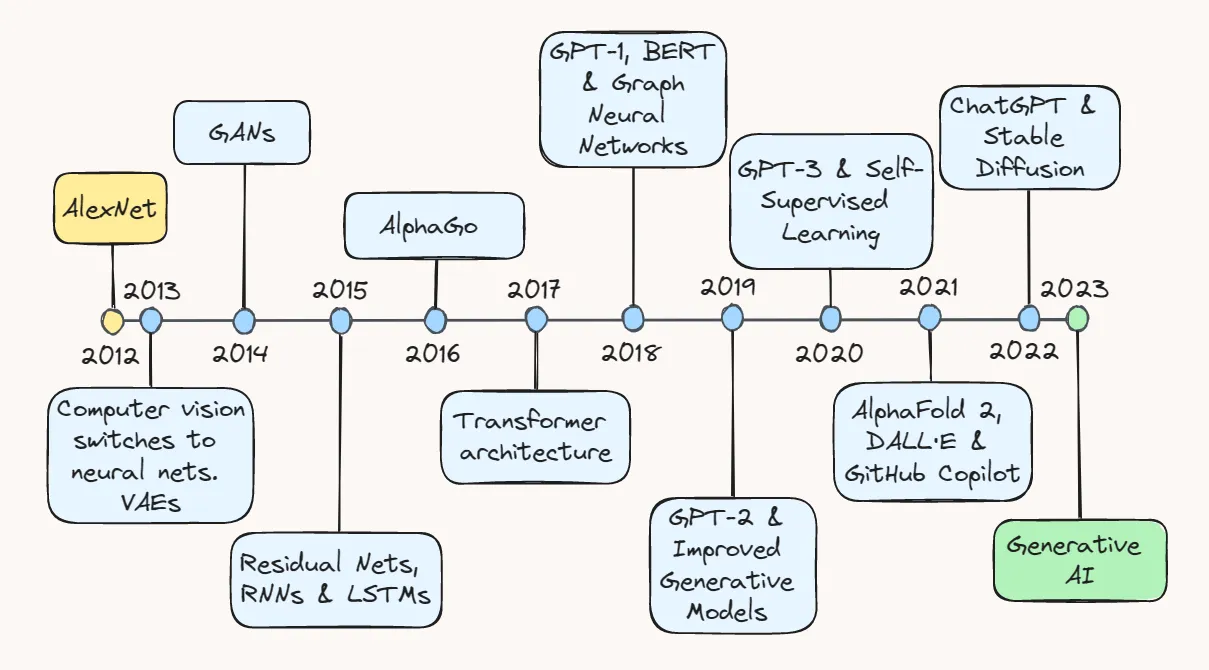

machine learning programs such as neural networks struggle to calculate the appropriate weights for more distant words in long sequences. This problem can be addressed by using an 'attention mechanism', a layer in a neural network that learns which parts should be focused on and adjusts the weights accordingly... a network that contained only attention layers outperformed all existing networks for processing language. These networks, known as transformers, capture information about the way the association between a word, or rather its embedding, and the target in a given task, for example, a candidate to be the next word, is altered by the words around it, including those some distance away. When a transformer is trained to predict missing words in millions and millions of sentences, the network acquires a representation not just of the meanings of the individual words but of larger semantic structures.

OpenAI, a company co-founded by Elon Musk and now part-owned by Microsoft, started using transformers to develop Large Language Models in 2018. The most recent, GPT-3, released in May 2020, was trained on 45 terabytes of text data and has 175 billion parameters. The journalists and scientists who were given access to it were amazed at the fluency of the text it generated in response to simple requests or queries. The most exciting thing, for the team developing it, was that GPT-3 could tackle tasks it hadn't been trained to do...

ChatGPT is so good at generating convincing answers it is easy to forget that it is a model of language and not a source of wisdom.... it only has access to a synthesis of things that have been written, and is trying to have a dialogue that previous users would have rated as successful... ChatGPT is good at providing succinct, articulate responses to clearly framed questions on matters about which there is a reasonable amount of published material. That's why it can answer the kinds of question you might find on an exam paper.

===

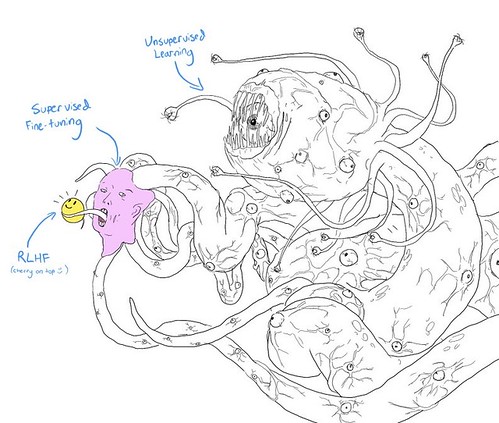

AInevitability and its AImpenetrableness and Understanding/Doing Some AI Alan Levine To me we are getting a bit over distracted by the candy sliding out of the bottom of the AI machine and not having any kind of understanding, even schematic, of what goes on behind the front of the machine.

===

Victorian-Era People Who Never Existed: These Portraits Were AI-Generated Matt Growcoot

===

Digesting 2022 O'Reilly ChatGPT's ability to produce plausible text output is spectacular, but its ability to discriminate fact from non-fact is limited. Will we see a Web that's flooded with "fake news" and spam? We arguably have that already, but tools like ChatGPT can generate content at a scale that we can't yet imagine...

===

Microsoft's New AI Tool Just Needs to Hear Three Seconds of Your Voice to Mimic You

VALL-E can preserve the original speaker's emotional tone and even simulate their acoustic environment.

===

Controversy erupts over non-consensual AI mental health experiment

===

Ten Facts About ChatGPT via Stephen Downes

===

OpenAI Wants to Know How Much You'll Pay for the Premium Version of ChatGPT

===

Microsoft eyes $10 billion bet on ChatGPT Semafor

After that threshold is reached, it would revert to a structure that reflects ownership of OpenAI, with Microsoft having a 49% stake, other investors taking another 49% and OpenAI's nonprofit parent getting 2%.

===

https://www.google.com/search?q=microsoft+and+open+ai&source=lnms&tbm=

nws

Picture Clippy on meth. Multiply it by a googol. Now give it to

Good times are ahead!

State of the World 2023: Bruce Sterling and Jon Lebkowsky

===

Meta Will Use Shutterstock's Image Library to Train its AI Jaron Schneider

Why scientists are building AI avatars of the dead WIRED Middle East

===

O'Reilly's Radar Trends, Jan 2023 many AI links

===

This voice doesn't exist: AI-generated speech that isn't trying to impersonate someone Rob Beschizza

===

Prompt windows Rob Horning

...The idea that technology is a form of irresistible magic returns. It can either fully manipulate populations so that they cannot resist its takeover of society, or its possibilities are so self-evidently beneficial that no one really would want to resist them, except for the class of professional nay-sayers, worry-worts, and others on the wrong side of history who have various vested interests in registering their complaints.

...by design it has no truth standard other than statistical averages of past language use, with no consideration of context or meaning or intention let alone polysemy or irony...

...with generative AI, the algorithms retraining themselves on more and more data will perhaps come to be seen as always approaching some total apprehension of the facts about the world and how they are connected... It still feels like I would have to change my life, limit myself in certain ways, shut aspects of the world and especially other people out, to accommodate the instrumentality of generative models. I probably won't recognize the moment I stop feeling that way, even though I will be complaining about its imminence the whole time. ===

10 AI Prompts For Realistic Photography Portraits A Collection of AI-Generated Images from Prompt Lists

===

Midjourney: An Image/Text-to-Image Primer Nettrice Gaskins

The Expanding Toolbox: AI Art & Creative Expression

===

Generative AI: Cultural Tipping Point? Giles Crouch, Digital Anthropologist

Artificial Intelligence And The Disruption Phase. It's Good.

===

Lawsuit Filed Against AI Image Generators Stable Diffusion and Midjourney Petapixel

===

Microsoft's Expanding Access To Its Azure OpenAI Service

===

Getty Images is Suing AI Image Generator Stable Diffusion

===

90% of Online Content Could be Generated by AI by 2025, Expert Says Petapixel

===

AI Is Not the New Crypto Isabel Fattal, The Atlantic

===

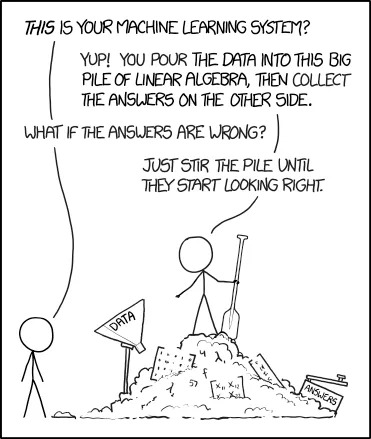

Understanding VQ-VAE (DALL-E Explained Pt. 1) Charlie Snell DALL-E involves a transformer that takes as input both the embeddings from text and the latent codes from a VQ-VAE trained on images. (from https://ml.berkeley.edu/blog/posts/dalle2/) DALL-E consists of two main components. A discrete autoencoder that learns to accurately represent images in a compressed latent space. And a transformer which learns the correlations between language and this discrete image representation. Nobody knows exactly why transformers work so well, or even what they actually learn; there is no fundamental theory for deep learning that can explain all of this, these networks are sort of too big and complicated for us to fully understand currently. Most of what we have are just these crazy empirical results like DALL-E. You train a big model with lots of data and follow a set of mostly empirically derived best practices and suddenly your model can generate images of Avocado chairs on command. No one can fully explain it; it just works.

DALL-E has 12 billion parameters; this model is enormous (not GPT-3 large, but still enormous). The compute and scaling infrastructure needed to train a model like this is something that few companies can afford.

Simply stated, the goal of language modeling is to compute the probability distribution of language.

===

10 Amazing Techniques For Midjourney You Probably Didn't Know Yet Tristan Wolff

===

Midjourney's V4 Produces Absolutely Insane Images Jeff Hayward

===

ChatGPT pays Kenyan workers $2 an hour to review obscene content Mark Frauenfelder

===

5 Trends in AI that will dominate 2023 Kaitlin Goodrich

===

How ChatGPT Will Ruin the World Gianangelo Dichio

===

What GPT-4 has to offer that GPT-3 didn't

===

Impossible Things Promises to be the World's Most Advanced AI Photo Editor

===

AI Can Diagnose Your Pet's Health Issues From Phone Photos

===

AI detects if YouTubers are infected with omicron coronavirus variant

===

Artist Ellen Maidman-Tanner on why AI is actually good for art Cathy Reisenwitz

But she got to thinking about the history of visual art. "The biggest thing to happen to visual art was photography." Suddenly 2D visual artists weren't required to document monarchs, machinery, create images for advertisements, etc.

As I pointed out in my automation post, many illustrators lost work. But one thing you can't say is that low-cost photography made visual art less creative and interesting on the whole. The fact is that the visual artists who stayed in the game invented impressionism, surrealism, etc. after being freed from having to faithfully render real life. "Today the breadth of visual art is extraordinary," Ellen said....

I expect AI to impact visual art similarly to the way photography did. My concern is that it may eventually impact visual art the way the internet impacted publishing and music. Automating work that used to require many hours from creatives frees creatives to do more interesting work. Technology that ends monopolies on distribution, slashing the impacted industries' profits, forces most creatives to do the most profitable work. ...Ultimately, we both agree there doesn't seem to be an important distinction between a human viewing a lot of existing images and creating something new out of the amalgamation and a machine doing the same thing.

===

AI Art is crap, isn't it? Tristan Woolf

Rather, the "emotions" and "human touch" you feel when you look at a work of art, listen to music, or watch a theatre play come from the only device in the solar system that we know is capable of producing such things: your brain. You empathize, abstract, and thus project meanings (understanding, distrust, love, fear, or loathing) onto the artwork, not the other way around! And in projecting meanings, you unconsciously use the cognitive sediments of a lifetime, all your emotional attachments, your trained behaviors, and your acquired patterns of thought and judgment — and it is this gigantic cognitive cluster of aesthetic taste and worldly experience that makes us "feel" art or not, that makes us stand in front of a toilet in an art gallery and scream "that's stunning!", "that makes no sense!", or "offensive!".

...EVERY work of art is in itself a worthless piece of crap. Only when a human mind, which is in constant social interaction with other people, the world, and itself, begins to project its experiences onto it, only then does art take on meaning. It's just that: a game of meaning.

===

ChatGPT is an inflection moment in human history that debates can't diminish Paul Pallaghy

ChatGPT is a trainable, non-hard-coded, embodiment of human-like language-based intelligence.

And it's moderately to strongly reliable. That's my measured-assessment as an AI researcher/developer.

===

Creative Artificial Intelligence. Index Audio, Visual Media, Music, Text generation. With Examples and Friend-Links. Merzmensch

===

You Think ChatGPT 3 is Impressive? GPT 4 is Going to Blow Your Mind Brice Foote

===

The new way

to find the next book to read! Let AI to help you discover the best books for you...

===

Pluralistic: Tiktok's enshittification Cory Doctorow

I call this enshittification, and it is a seemingly inevitable consequence arising from the combination of the ease of changing how a platform allocates value, combined with the nature of a "two sided market," where a platform sits between buyers and sellers, hold each hostage to the other, raking off an ever-larger share of the value that passes between them....

...This shell-game with surpluses is what happened to Facebook. First, Facebook was good to you: it showed you the things the people you loved and cared about had to say. This created a kind of mutual hostage-taking: once a critical mass of people you cared about were on Facebook, it became effectively impossible to leave, because you'd have to convince all of them to leave too, and agree on where to go. You may love your friends, but half the time you can't agree on what movie to see and where to go for dinner. Forget it...

Today, Facebook is terminally enshittified, a terrible place to be whether you're a user, a media company, or an advertiser. It's a company that deliberately demolished a huge fraction of the publishers it relied on, defrauding them into a "pivot to video" based on false claims of the popularity of video among Facebook users. Companies threw billions into the pivot, but the viewers never materialized, and media outlets folded in droves... But Facebook has a new pitch. It claims to be called Meta, and it has demanded that we live out the rest of our days as legless, sexless, heavily surveilled low-poly cartoon characters.

===

The New Age Of The AI Artist Paul DelSignore The AI artist can be described more as a process, a mashup of human imagination + machine algorithms. The art itself is the result of a series of interconnected steps… refined, re-tuned, remixed, re-rolled, and reimagined...

(quotes Catherine Bosley): "Art is where we make meaning beyond language. It's a means of communication where language is not sufficient to explain or describe its content. Art can render visible and known what was previously unspoken. Because what art expresses and evokes is in part ineffable, we find it difficult to define and delineate it. It is known through the experience of the audience as well as the intention and expression of the artist."

===

GPT-4, AGI & Dark Matter of Consciousness Tobias Jensen

We can probably expect GPT-4 to be the world's best "bull-shitter". Capable of imitating human thought to a near-perfect extent. Yet, it still lacks common sense. "The dark matter of intelligence as described by computer scientist Yejin Choi:

===

Chat Je Pétais Tony Hirst

===

ChatGPT search — Autotune for knowledge

The librarians were my algorithm.

Right now, still, I'm using a search engine with a bunch of different practices to try and find the information I want curated by other people somewhere out there on the Internet. I put in a search string, I look at what I get back from the algorithm, make some adjustments, and try again. Throughout the process I land on some websites created by humans about the issue I'm interested in.

The search engine algorithm brings me to a human (probably) made knowledge space.

Starting this year, we're going to be returned a mishmash of all the information that is available on the Internet, sorted by mysterious practices (popularity, number of occurrences, validity of sources if we're lucky) and packaged neatly into a narrative. The algorithm is going to convert that information to knowledge for me.

The algorithm presents me with the knowledge, already packaged.

...[Autotune is] everywhere now. If you listen carefully to most popular songs you can hear the uniformity in the sound.

That's what's going to happen to our daily knowledge use... The vast majority of the human experience of learning about something is done at the novice level.

That experience is about to be autotuned.

===

56 Stunning AI-Generated Images Inspired By The Future of Being Human ...I am by no stretch of the imagination an artist in any classical or trained sense. Yet working with Midjourney I'm finding that I can explore and express ideas and concepts in ways that would be impossible otherwise.

And through this partnership I can begin to connect those ideas and insights with others in creative ways, and in turn be iteratively inspired by the ideas and insights that result.

In other words, AI art bots like Midjourney and others seem to have the ability to unleash creativity rather than diminish it, and to open up the way to quite transformative AI-human collaborations.

===

We knew this (ChatGPT) day was coming Paul Pallaghy

It's personalised education.

ChatGPT is an essential research tool from now to collect, query, summarise and understand everything — the scientific literature, news, past forums and every broadcast or movie etc. — way, way better than ever before.

Large language models (LLMs) are just a great way of rendering information into a style.

Think of it that way.

===

What is generative AI? McKinsey Company

===

ChatGPT Doesn't Get Writer's Block. Discuss. John Battelle

Everyone from educators to legislators seem to be asking how we can distinguish between writing done by AIs, and writing done by actual humans. But if the age of the centaur is truly upon us, perhaps we don't have to. Authorship is already a complicated process of bricolage and outright theft. I don't see why adding a tool like ChatGPT should be anything but welcomed.

...When I write, I have no idea how the work is going to end, much less what ideas or points I'll make as I pursue its composition. For a reader, the beauty in a piece of writing is its wholeness. It's a complete thing — it starts, it blathers on for some period of time, it ends. But for a writer, an essay is a process, a living thing. You compose, you reflect, you edit, reject, reshape, and repeat. Once it's finished, the piece quickly settles into an afterlife, a fossilized artifact of a process now complete. The principal joy of writing for the writer isn't in admiring what you've made (though there's a bit of that as well), it's in its creation.

===

Everything We Know About ChatGPT Gizmodo summary Microsoft recently announced it plans to invest as much as $10 billion into the AI-focused organization. Microsoft has also said it may want to launch a ChatGPT integration for its search engine, Bing.

===

AI Influencers From the Post-ChatGPT Era Alberto Romero ...AI being the mainstream topic everyone is talking about gives raise to a different type of hype. A hype that comes from outsiders who don't know—nor care—about the history of the field, the underlying tech that fuels those fancy models, or the limitations and social repercussions that go implicit with the development of any powerful new technology.

Instead, these outsiders—the marketers, the AI influencers—go around making baseless claims and predictions that lack credibility. And it doesn't matter. Credibility, rigor, and evidence are words that pale next to the bright magic of AI...

As I perceive it, the only way AI had to go mainstream was through this path. There was hype in AI before ChatGPT—there always has been—but what we're living now is unheard of. It doesn't reflect ChatGPT's value or potential, but merely its attractiveness.

If we wanted AI to reach everyone, I can't help but think this was the way. We love clickbait. We love hype. We love easy content. We love shortcuts. We don't like hard stuff that requires energy, time, and effort. That's why AI influencers even exist in the first place.

===

Will AI prompts take over the physical art space? Joy Phillips A promptist can easily create clothing within an AI generator. A program could be made to turn the clothing image into pattern pieces, and easily graded to various sizes or an individuals measurements. It would then sent to the 3D fabric printer and printed.

At this stage, there still needs to be someone sewing the pieces of fabric together after they've been printed, but potentially depending on the clothing there may be minimal sewing or it could be printed as one piece. In the future, who knows, there may be a 3D fabric printer that could construct the garment while printing each piece. There is also the whole area of textile arts: wall hangings, quilts and 3D sculptures made from fabric. Once a prompt has been entered, the image could either be printed out flat or ready to sew together. Or put into a program that turns 2D images into 3D models ready to print.

===

ChatGPT about collapse Joe Djemal ===

GPT-4 Will Have 100 Trillion Parameters — 500x the Size of GPT-3 Alberto Romero

Such a technology would change the world as we know it. It could benefit us all if used adequately but could become the most devastating weapon in the wrong hands. That's why OpenAI took over this quest. To ensure it'd benefit everyone evenly: "Our goal is to advance digital intelligence in the way that is most likely to benefit humanity as a whole."

However, the magnitude of this problem makes it arguably the single biggest scientific enterprise humanity has put its hands upon. Despite all the advances in computer science and artificial intelligence, no one knows how to solve it or when it'll happen...

GPT-3: A language model 100 times larger than GPT-2, at 175 billion parameters.

GPT-3 was the largest neural network ever created at the time — and remains the largest dense neural net. Its language expertise and its innumerable capabilities were a surprise for most...

[OpenAI] partnered with Microsoft in 2019. They licensed the big tech company so they could use some of OpenAI's models commercially in exchange for access to its cloud computing infrastructure and the powerful GPUs they needed.

But GPUs aren't built specifically to train neural nets. The gaming industry developed these chips for graphic processing and the AI industry simply took advantage of its suitability for parallel computation.

...[but] GPUs weren't enough.

Many companies realized it too and started to build in-house specialized chips designed to train neural nets, without losing efficiency or capacity. However, a pure software company like OpenAI can hardly integrate hardware design and fabrication. That's why they took another route: Using third-party AI-specific chips.

Here's where Cerebras Systems enter the scene. This chip company already built the largest chip ever to train large neural networks in 2019. Now they've done it again, and OpenAI will make good use of this amazing piece of engineering...

First, Cerebras has built again the largest chip in the market, the Wafer Scale Engine Two (WSE-2). It is about 22 cm on each side and has 2.6 trillion transistors. In comparison, Tesla's brand new training tiles have 1.25 trillion transistors.

Cerebras found a way to condense computational power efficiently and so WSE-2 has 850,000 cores — the computational unit — whereas typical GPUs have a few hundred. They also solved the heating problem with a novel cooling system and also managed to create an efficient I/O flow of data.

There aren't many uses for ultra-specialized super-expensive mega-powerful chips like WSE-2. Training large neural networks is one of them. And so Cerebras talked to OpenAI...

...compare it with our brain. The brain has around 80-100 billion neurons (GPT-3's order of magnitude) and around 100 trillion synapses. GPT-4 will have as many parameters as the brain has synapses....

There are many questions and very few answers. No one knows if AGI is possible. No one knows how to build it. No one knows if larger neural networks will get increasingly closer to it.

===

ChatGPT. We are all royally f@#$ed. Victor Ronin ChatGPT:

===

Shutterstock Has Launched Its Generative AI Image Tool

===

GLM-130B: An Open Bilingual Pre-Trained Model Victor Mair

===

Generators at Their Finest Bruce Sterling

===

Though the Charleston is tolerated, the twist is taboo Rob Horning

===

The practical guide to using AI to do stuff

===

An AI app walks into a writers room Charlie Stross

The villagers of a quaint and idyllic hamlet tucked away in the rolling hills of rural England suddenly realized that their beloved cheese shop, the lifeblood of their community, was closing its doors for good.

At first, they couldn't quite believe it. After all, the cheese shop had stood for generations, a symbol of the village's continuity and stability in an ever-changing world. But then, the whispers started. A dark rumour, like a cold gust of wind, that the cheese shop's proprietor, old Mr. Wensleydale, was retiring, and his business was to be taken over by a faceless conglomerate from the city...

Awesome New Feature From Midjourney — Seamless Tiles

===

'Lunchbox' Replaces Photographers as the First AI Image Generator for Food

===

===

ChatGPT: Netscape Moment or Nothing Really Original Jean-Louis Gassée

===

5 Practical Applications Where ChatGPT Shines Alberto Romero

===

Whispers of A.I.'s Modular Future

Eventually, though, someone will release a program that's nearly as capable as ChatGPT, and entirely open-source. An enterprising amateur will find a way to make it run for free on your laptop. People will start downloading it, remixing it, connecting it, rethinking and reimagining. The capabilities of A.I. will collide with our collective intelligence. And the world will start changing in ways we can't yet predict.

===

Working with Broken Tony Hirst ...So I'm wondering: is this the new way of doing things? Giving up on the myth that things work properly, and instead accept that we have to work with tools that are known to be a bit broken? That we have to find ways of working with them that accommodate that? Accepting that everything we use is broken-when-shipped, that everything is unreliable, and that it is up to us to use our own craft, and come up with our own processes, in order to produce things that are up to the standard we expect, even given the unreliability of everything we have to work with? Quality assurance as an end user problem?

===

A Day on MidJourney Server: It's a Revolution Before Our Eyes Marjan Krebelj

Perhaps machines will surpass us, and we'll become their slaves, but that isn't new either. It is only that the devices are becoming a bit more visible now. It has been a long time since we began sacrificing our individual lives to invisible super-organisms like companies, political parties, nation-states, clubs, and the most important of them all — money. Although they don't exist anywhere else but in our heads, we imagine them to be as tangible as real things and persons; we grant them rights we don't grant ourselves, and we gladly sacrifice our livelihoods so they can thrive.

We are merely finding new ways to be victims of our biology, I guess.

What I do know is that we're at the brink of a new revolution and I think you are on a safe side if you join it. Those that don't will be left behind. The challenge now is to do it ethically.

===

Midjourney AI 'Imagines' Street Photography From The Greats

===

A New Wave of AI-Powered Tools Coming Soon HungryMinded

===

The AI Art Renaissance Paul DelSignore

===

Inspired prompts Rob Horning

From this perspective, feeds don't merely reflect but reproduce compulsion, and generated content will be used precisely to intensify this process. In that scenario, branded content from creators can be phased out in favor of content that allows users to experience themselves as a brand of a sort — the specific set of proclivities that conceptually holds together whatever content the machines throw at them.

...What people find interesting or boring is altered by the very process of catering to it. Optimizing for "entertainment" doesn't solve for it once and for all or negate whatever its opposite is supposed to be. Engagement and "diversion" necessarily co-exist as the conditions for each other.

...many commentators seem to feel threatened the possibility that AI will induce passivity and apathy in us against our will, that it will train us to be incurious. AI models would seem to tempt us with their immediacy, which would then deplete our capability to be satisfied with even the interesting content it makes, so that we could experience nothing but contentless diversion...

...while some of us will feel important because we read articles that mock other people's need to feel important, generative AI will go on helping people imagine that someone wants to pay attention to them. As Sophie Haigney points out about Lensa — an app that generates images of ourselves in stock costumes — AI is capable of "feeding a wholly private fascination with ourselves."

===

What You May Have Missed Alberto Romero

Nvidia's Jim Fan has a convincing explanation: It's better to pay others to do what may cost you more than money can repay.

===

The Fragility of Artificial Intelligence Giles Crouch

Three primary reasons. The first is energy and the second is related to energy, which is, it can be unplugged. The other is that AI is disembodied from society. Current investment into AI tools is more focused on AI development and business models. Less on sustainability of the tools.

AI is a disembodied technology. Unlike our devices and physical tools we use, AI isn't really embodied within our daily interactions. When a technology is disembodied from culture, non-tactile and largely relies on our imagination for its existence, we have less connection to it, which makes it easier if we decide we don't like it as a society and want to make changes. This is a point of fragility for AI...

The looming reality of the Splinternet, that some suggest is already here, could also play a role in limiting or having an adverse impact on some AI tools as well. The more walled gardens, which is a trend underway already, also means difficulty accessing data, or driving up the price of access. This is where infonomics come into play.

===

Radar Trends to Watch: February 2023 O'Reilly

===

ChatGPT is a blurry jpeg of the Web Ted Chiang, New Yorker blog

===

DeepMind Is Now the Undisputed Leader in Language AI with Gopher (280B) Alberto Romero

The model was trained on MassiveText (10.5 TB), which includes various sources like MassiveWeb (a compilation of web pages) C4 (Common Crawl text), Wikipedia, GitHub, books, and news articles. Together with Gopher, DeepMind built the Gopher family — a series of smaller models spanning from 44M to 7.1B params. All the models were trained on 300B tokens (12.8% of MassiveText) to isolate scale effects on their power...

===

U.S. Copyright Office tells Judge that AI Artwork isn't Protectable PetaPixel The Copyright Office says that its own guidelines specify human authorship as a requirement for protection and that "the Office will refuse to register a claim if it determines that a human being did not create the work."

The Office "will not register works produced by a machine or mere mechanical process that operates randomly or automatically without any creative input or intervention from a human author."

===

Is AI Stealing From Artists? Kyle Chayka, New Yorker blog

===

Copyright won't solve creators' Generative AI problem

===

'AI Prompter' A New NicheAI job in the market

===

Did He Say 'Bird'? NYT Newsletter 10ii23

===

Google vs Microsoft: Microsoft's New Bing Is a Paradigm Change for Search and the Browser Alberto Romero

...Through Prometheus, Microsoft has found a way to bring together the generative capabilities of reinforced LMs like ChatGPT, with the reliable retrieval skills of a search engine.

...Microsoft's repeated emphasis on the concept of "Copilot". As I see it, they want to convey the idea that the new products (search, browser, chatbots, etc.) aren't disconnected from the person that uses them. They want to convince us that there's always a human in the loop — in their view, that's the person who prompts the AIs, either with a search query, a direct question, or with the intention to write a creative fiction story. They want to emphasize that human and AI are inseparable.

===

8 Signs That the AI 'Revolution' Is Spinning Out of Control Lucas Ropek at Gizmodo

Do the people actually want an AI "revolution"? It's not totally clear but whether they want it or not, it's pretty clear that the tech industry is going to give it to them. The robots are coming. Prep accordingly!

===

Why Film Photography is the Antithesis of AI Art Simon King

These forms of art can allow the user to depict imagery that would otherwise exist only in their mind's eye. When Dali wants melting clocks, he prepares his canvas; when a photographer wants melting clocks, they must prepare some kind of industrial oven...

...A digital file is removed from context as soon as it is shown in isolation, separated from sequence and source. A film negative will always exist alongside the images from the same roll, inescapable from what came before and what occurred after. The physical nature of film means it can be examined in person, and an "independent" print can be produced from it. A digital image has no such method for verification...

===

Digitization of Babylonian fragments Fragmentarium: AI deciphers... Victor Mair

Fragmentarium makes this process a whole lot easier. From the 22,000 text fragments that have been digitized so far, the AI can sift through the images and systematically assemble text fragments together by making connections in seconds that would typically take human researchers months.

"It's a tool that has never existed before, a huge database of fragments. We believe it is essential to the reconstruction of Babylonian literature, which we can now progress much more rapidly," Enrique Jiménez, Professor of Ancient Near Eastern Literatures at the Institute of Assyriology at Ludwig Maximilian University, said in a statement...

===

Stable Diffusion Is the Most Important AI Art Model Ever Alberto Romero [Aug 2022]

===

Sources and attribution for AI-generated images Flowing Data

Stable Attribution goes in the opposite direction of image generation, and instead tries to identify source images of a given AI-generated image. Load an image and Stable Attribution looks for the most similar images in the Stable Diffusion training data.

===

How ChatGPT Works: The Model Behind The Bot Molly Ruby

The most basic training of language models involves predicting a word in a sequence of words...

===

Will ChatGPT supplant us as writers, thinkers? (Harvard Gazette commentary by Stephen Pinker, of whom I remain suspicious... We're dealing with an alien intelligence that's capable of astonishing feats, but not in the manner of the human mind. We don't need to be exposed to half a trillion words of text (which, at three words a second, eight hours a day, would take 15,000 years) in order to speak or to solve problems. Nonetheless, it is impressive what you can get out of very, very, very high-order statistical patterns in mammoth data sets... Since LLMs operate so differently from us, they might help us understand the nature of human intelligence. They might deepen our appreciation of what human understanding does consist of when we contrast it with systems that superficially seem to duplicate it, exceed it in some ways, and fall short in others.

===

Mindmaps using ChatGPT and PlantUML aruva

===

AI Art Stands at the Border between Mimesis and Nemesis Hee Dae Kim

The second question is, 'Can mimesis be the essence of art?' Deleuze stated that artistic creation is to embody pure senses on a painting and that to do it, the following three conditions need to be met: Executing a distortion that goes beyond the scope of existing knowledge. Being able to utilize incidental elements and the lines of inorganic lives. Finally, physical senses and intuitive, intellectual thinking abilities to appreciate and judge them...

The final question is, What is the 'peculiarity of humans' that machines cannot imitate (mimesis) but get frustrated (nemesis)? This question is about the relation between AI and humans after all. The peculiarity of humans that machines does not have is in emergence, consciousness (memory), and cooperation of humans.

===

AI Hallucinations: A Provocation Mike Loukides

What if we viewed an an AI's "hallucinations" as the precursor of creativity? After all, when ChatGPT hallucinates, it is making up something that doesn't exist. (And if you ask it, it is very likely to admit, politely, that it doesn't exist.) But things that don't exist are the substance of art. Did David Copperfield exist before Charles Dickens imagined him? It's almost silly to ask that question ... Bach's works didn't exist before he imagined them, nor did Thelonious Monk's, nor did Da Vinci's... These human creators didn't do great work by vomiting out a lot of randomly generated "new" stuff. They were all closely tied to the histories of their various arts. They took one or two knobs on the control panel and turned it all the way up, but they didn't disrupt everything. If they had, the result would have been incomprehensible, to themselves as well as their contemporaries, and would lead to a dead end. That sense of history, that sense of extending art in one or two dimensions while leaving others untouched, is something that humans have, and that generative AI models don't. But could they?

...Is it possible to build a language model that, without human interference, can experiment with "that isn't great, but it's imaginative. Let's explore it more"? Is it possible to build a model that understands literary style, knows when it's pushing the boundaries of that style, and can break through into something new? And can the same thing be done for music or art?

===

No, Bing's AI Chatbot Is Not Sentient Brendan Hesse