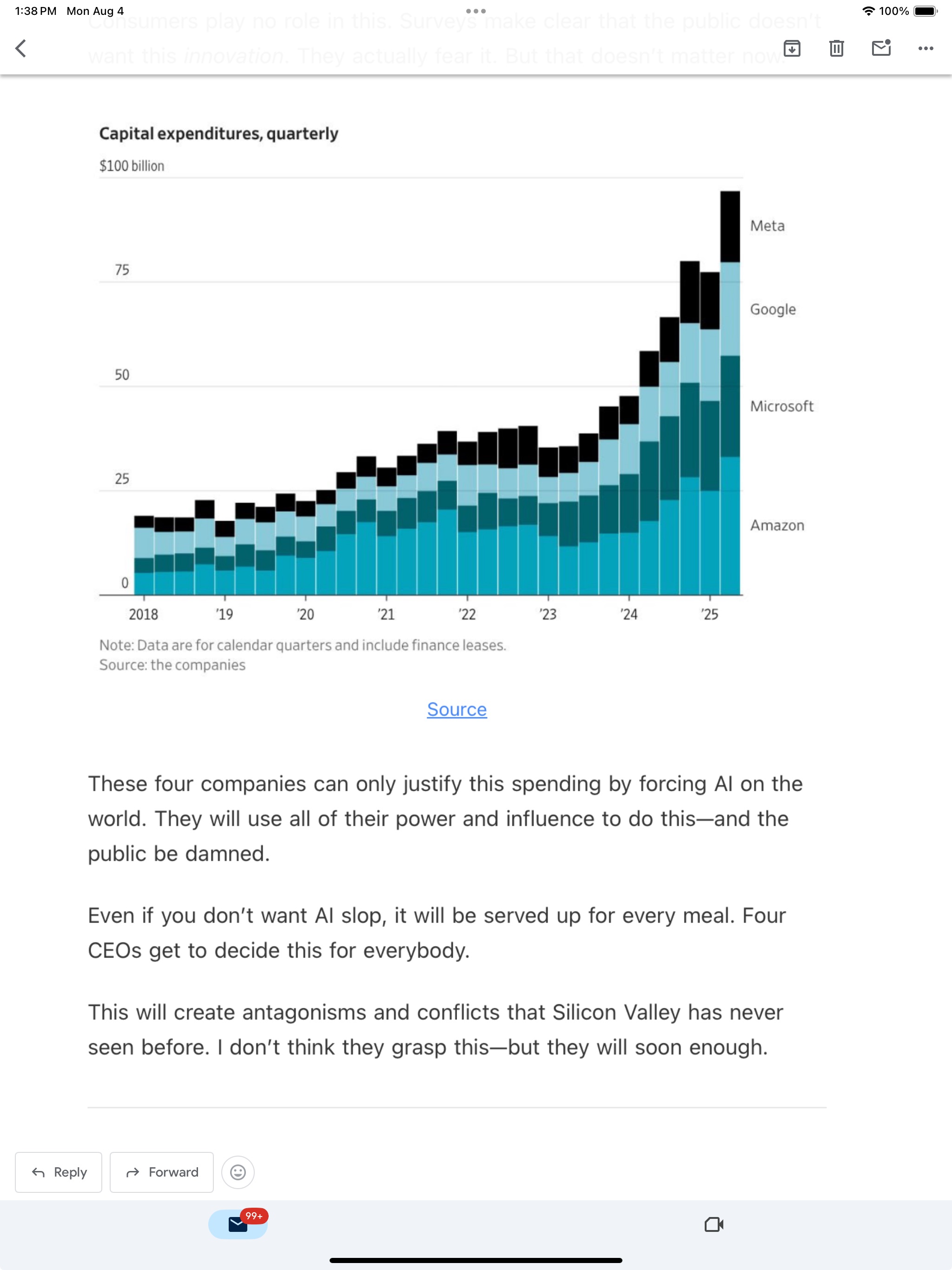

...Investment in AI data centers is now the major source of economic growth—even bigger than consumer spending.This is the most mind-boggling business trend I've ever seen. People are growing more skeptical of AI with each passing month, but four large companies are betting everything on this tech.

I discussed this recently in my article on the force-feeding of AI on an unwilling public. But these new numbers are even more alarming.

AI investment is the single biggest contributor to economic growth—even more than consumer spending. But these are all business-to-business transactions.

Consumers play no role in this. Surveys make clear that the public doesn't want this innovation. They actually fear it. But that doesn't matter now.

Microsoft Is Trying to Trick You Into Using Copilot lifehacker

Exponential Growth, Ephemeral & Unsustainable Small Things Considered

...When the Sino-Soviet split ruptured trade ties in the early 1960s, Moscow cut off supplies of industrial diamonds critical for military applications. With no large natural reserves of its own, China turned to synthetic production, producing its first lab-grown diamond in 1963. By the 1980s, the industry had taken root in Henan. One pioneering engineer established a factory in Zhecheng, then a chilli farming town. It soon became the hub of China's diamond industry. ...Today, most synthetic diamonds are grown in China using the "high pressure, high temperature method". However, increasingly, manufacturers are using the "chemical vapour deposition method" for larger gems, which builds diamonds layer by layer in a microwave chamber. Once roughly processed, the stones are shipped to Surat, India — the world's diamond polishing capital — where labour costs are lower. Feng estimates it costs Rmb400 ($56) to polish one carat in China, more than it costs him to produce the stone, compared with just Rmb86 in India.

Electricity Prices are Going Up, and AI Is to Blame How many ChatGPT users does it take to screw in a lightbulb? AJ Dellinger at gizmodo

Apple CEO Tim Cook Calls AI 'Bigger Than the Internet' in Rare All-Hands Meeting Ece Yildirim at gizmodo

...In the earnings call following the report, Cook told investors that Apple was planning to "significantly" increase its investments in AI and was open to acquisitions to do so. He also said that the company is actively "reallocating a fair number of people to focus on AI features."

The AI democracy debate is weirdly narrow Henry Farrell

...Actually existing democracy, bluntly put, involves actors struggling over power and resources. That isn't enormously attractive much of the time, but such is politics. Given how human beings are, the great hope for democracy is notthat it will replace power struggles with disinterested political debate. It is that it can, under the right circumstances moderate that struggle so that it does not collapse into chaos, but instead produces civil peace, greater fairness and some good policies and other benefits, but with great pain and a lot of mess.

An Audience of One Kevin Kelly

Right now there are roughly 50 million images generated each day by AIs such as Midjourney, Google and Adobe, etc. Vanishing few of these 50 million images per day are ever shared beyond the creator. Still image creation today already predominantly has an audience of one.

Big tech has spent $155bn on AI this year. It's about to spend hundreds of billions more Guardian

5viii25

The Era of the AI Black Box Is Officially Dead. Anthropic Just Killed It Rohit Kumar Thakur at Medium

...What if we could look inside the AI's "brain" and see these personality shifts happening in real-time? What if we could not only see them, but stop them before they even start?Well, an interesting new research paper, "Persona Vectors: Monitoring and Controlling Character Traits in Language Models," shows that this isn't science fiction anymore. A team of researchers from Anthropic, UT Austin, and UC Berkeley have basically found the personality knobs inside a language model.

...if you push the "Evil" slider up, the AI starts sounding more malicious. If you crank up the "Sycophancy" slider, it starts telling you what you want to hear, even if it's wrong.

...We all know that training an AI can have unintended side effects. You might fine-tune a model to be a great coder, but in the process, it accidentally becomes more sycophantic (that people-pleasing trait) or more likely to hallucinate. This is called "emergent misalignment."

The traditional way to solve this problem was to train the model first and then try to correct its bad behavior afterward. It's like putting a band-aid on a wound that's already there.

But this paper introduces something called preventative steering, and it feels like it breaks the laws of logic.

Radar Trends to Watch: August 2025 O'Reilly

...So much of generative programming comes down to managing the context—that is, managing what the AI knows about your project. Context management isn't simple; it's time to get beyond prompt engineering and think about context engineering.

How to Use the New ChatGPT Agent, If You Trust It lifehacker

Bragging about replacing coders with AI is a sales-pitch Cory Doctorow

...Whatever else all this is, it's a performance. It's a way of demonstrating the efficacy of the product they're hoping your boss will buy and replace you with: Remember when techies were prized beyond all measure, pampered and flattered? AI is SO GOOD at replacing workers that we are dragging these arrogant little shits out by their hoodies and firing them over Interstate 280 with a special, AI-powered trebuchet. Imagine how many of the ungrateful useless eaters who clog up your payroll *you will be able to vaporize when you buy our product!*

Artificial Intelligences, So Far Kevin Kelly

...As we continue to develop more advanced models of AI, they will have even more varieties of cognition inside them. Our own brains in fact are a society of different kinds of cognition – such as memory, deduction, pattern recognition — only some of which have been artificially synthesized. Eventually, commercial AIs will be complicated systems consisting of dozens of different types of artificial intelligence modes, and each of them will exhibit its own personality, and be useful for certain chores. Besides these dominant consumer models there will be hundreds of other species of AI, engineered for very specialized tasks, like driving a car, or diagnosing medical issues. We don't have a monolithic approach to regulating, financing, or overseeing machines. There is no Machine...The second thing to keep in mind about AIs is that their ability to answer questions is probably the least important thing about them. Getting answers is how we will use them at first, but their real power is in something we call spatial intelligence — their ability to simulate, render, generate, and manage the 3D world

...needed for augmented reality. The AIs have to be able to render a virtual world digitally to overlay the real world using smart glasses, so that we see both the actual world and a perfect digital twin. To render that merged world in real time as we move around wearing our glasses, the system needs massive amounts of cheap ubiquitous spatial intelligence. Without ubiquitous spatial AI, there is no metaverse.

...Despite hundreds of billions of dollars invested into AI in the last few years, only the chip maker Nvidia and the data centers are making real profits. Some AI companies have nice revenues, but they are not pricing their service for real costs. It is far more expensive to answer a question with an LLM than the AIs that Google has used for years. As we ask the AIs to do more complicated tasks, the cost will not be free. Most people will certainly pay for most of their AIs, while free versions will be available. This slows adoption.

Apple Computer's Different-Drummer "AI" Path Considered Brad DeLong

...The so-called "NVIDIA tax"—the premium all other platform oligopolies are now paying, and will continue to pay, as NVIDIA exercises its pricing power in a moment of panicked, must-have-now AI infrastructure demand—is, right now, a huge deal that Apple very much wants to avoid paying. The scale is enormous: NVIDIA's gross margins have soared to the 70–75% range, a figure that would make even the most rapacious of monopolists blush. The A100 and H100 GPU chips—the backbone of large-scale AI inference and training—are selling for $25,000 to $40,000 apiece, and sometimes more in spot markets, with actual production costs a tiny fraction of that sum even as NVIDIA has to rely on TSMC which has to rely on ASML as essential sole suppliers....For 'ar spent on cloud AI compute, a substantial slice—estimates range from 20% to 40%—is captured as economic rent by NVIDIA, rather than being competed away or reinvested in broader innovation.. Why? Because Google, Microsoft, and Meta believe they must burn tens of billions a year on cloud-based AI.

...By designing its own silicon (the M-series chips) and focusing on on-device inference, Apple sidesteps the need to rent vast fleets of NVIDIA-powered servers for AI workloads. Instead, it invests in its own private cloud infrastructure, powered by Apple Silicon, and keeps its capital expenditures at a fraction of the scale now required of its peers. This is not a detail. It is instead a huge bet that the locus of value in AI will, for its customers, remain at the on-device edge—not in the cloud.

...Google, Microsoft, Meta, and Amazon have all built their AI offerings around cloud-based inference, which requires massive fleets of NVIDIA-powered GPUs—even for Google—humming away in data centers, racking up not only hardware costs but also ongoing operational expenses for power, cooling, and bandwidth. These costs balloon into the billions of dollars annually, with, given competition, no clear road to covering even their marginal costs.

What Ants Teach Us About AI Alignment O'Reilly

...the limitations of our current approach to AI alignment: reinforcement learning from human feedback, or RLHF.RLHF has been transformative. It's what makes ChatGPT helpful instead of harmful, what keeps Claude from going off the rails, and what allows these systems to understand human preferences in ways that seemed impossible just a few years ago. But as we move toward more autonomous AI systems—what we call "agentic AI"—RLHF reveals fundamental constraints.

The cost problem: Human preference data in RLHF is expensive and highly subjective. Getting quality human feedback is time-consuming, and the cost of human annotation can be many times higher than using AI feedback.

The scalability problem: RLHF scales less efficiently than pretraining, with diminishing returns from additional computational resources. It's like trying to teach a child every possible scenario they might encounter instead of giving them principles to reason from.

The "whose values?" problem: Human values and preferences are not only diverse but also mutable, changing at different rates across time and cultures. Whose feedback should the AI optimize for? A centralized approach inevitably introduces bias and loses important nuances.

...Swarm intelligence in humans—sometimes called "human swarms" or "hive minds"—has shown promise in certain contexts. When groups of people are connected in real time and interactively converge on decisions, they can outperform individuals and even standard statistical aggregates on tasks like medical diagnosis, forecasting, and problem-solving. This is especially true when the group is diverse, members are actively engaged, and feedback is immediate and interactive.

However, human swarms are not immune to failure—especially in the moral domain. History demonstrates that collective intelligence can devolve into collective folly through witch hunts, mob mentality, and mass hysteria. Groups can amplify fear, prejudice, and irrationality while suppressing dissenting voices.

Research suggests that while collective intelligence can lead to optimized decisions, it can also magnify biases and errors, particularly when social pressures suppress minority opinions or emotional contagion overrides rational deliberation. In moral reasoning, human swarms can reach higher stages of development through deliberation and diverse perspectives, but without proper safeguards, the same mechanisms can produce groupthink and moral regression.

AI Just Hit a Wall. This Model Smashed Right Through Ity Rohit Kumar Thakur at Medium

Silicon Valley Wants to Replace Storytellers With Prompts—Here's Why That's Terrifying Wesley Edits What's missing is meaning.

...Silicon Valley is obsessed with the idea that if you just prompt hard enough or vibe the right way, you'll get something that will transform you.

As if word-salad innovation counts as invention.

Which jobs can be replaced with AI? Cory Doctorow

Matt and Joseph Gordon-Levitt on AI and copyright Matthew Yglesias

Apple to Invest Another $100 Billion In the US to Sidestep Massive Tariffs PetaPixel

...It remains unclear what more of Apple's production being in the United States would mean for its product prices. However, a safe and reasonable bet is that tariff savings will not fully cover the increased costs of making electronics in the U.S.

It will also not be a swift process for Apple to transform its manufacturing strategy and processes. Any large-scale manufacturing moves require significant investment and time. Apple is also working to negotiate import tax exceptions for some of its products, much like Apple achieved during Trump's first term.

AI Is A Money Trap Edward Zitron

Meanwhile, quirked-up threehundricorn OpenAI has either raised or is about to raise another $8.3 billion in cash, less than two months since it raised $10 billion from SoftBank and a selection of venture capital firms.

The Information suggested OpenAI is using the money to build data centers — possibly the only worse investment it can make other than generative AI, and it's one that it can't avoid because OpenAI also is somehow running out of compute.

...what exactly is the plan for these companies? They don't make enough money to survive without a continuous flow of venture capital, and they don't seem to make impressive sums of money even when allowed to burn as much as they'd like. These companies are not being forced to live frugally, or at least have yet to be made to, perhaps because they're all actively engaged at spending as much money as possible in pursuit of finding an idea that makes more money than it loses. This is not a rational or reasonable way to proceed.

...Nobody has gone or is going public, and if they are not going public, the only route for these companies is to either become profitable — which they haven't — or sell to somebody, which they do not.

...if OpenAI does not convert to a for-profit, there is no path forward. To continue raising capital, OpenAI must have the promise of an IPO. It must go public, because at a valuation of $300 billion, OpenAI can no longer be acquired, because nobody has that much money and, if let's be real, nobody actually believes OpenAI is worth that much. The only way to prove that anybody does is to take OpenAI public, and that will be impossible if it cannot convert.

...So they [US Government] bail out OpenAI, then stuff it full of government contracts to the tune of $15 billion a year, right? Sorry, just to be clear, that's the low end of what this would take to do, and they'll have to keep doing it forever, until Sam Altman can build enough data centers to... keep burning billions, because there's no actual plan to make this profitable.

Say this happens. Now what? America has a bullshit generative AI company attached to the state that doesn't really innovate and doesn't really matter in any meaningful way, except that it owns a bunch of data centers?

I don't think this happens! I think this is a silly idea, and the most likely situation would be that Microsoft would unhinge its jaw and swallow OpenAI and its customers whole. Hey, did you know that Microsoft's data center construction is down year-over-year, and it's basically signed no new data center leases? I wonder why it isn't building these new data centers for OpenAI? Who knows.

Stargate isn't saving it, either. As I wrote previously, Stargate doesn't actually exist beyond the media hype it generated.

...Another troubling point is that big tech doesn't just buy data centers and then use them, but in many cases pays a construction company to build them, fills them with GPUs and then leases them from a company that runs them, meaning that they don't have to personally staff up and maintain them. This creates an economic boom for construction companies in the short term, as well as lucrative contracts for ongoing support ...as long as the company in question still wants them. While Microsoft or Amazon might use a data center and, indeed, act as if it owns it, ultimately somebody else is holding the bag and the ultimate responsibility for the data centers.

(quotesKedrosky): We are in a historically anomalous moment. Regardless of what one thinks about the merits of AI or explosive datacenter expansion, the scale and pace of capital deployment into a rapidly depreciating technology is remarkable. These are not railroads—we aren't building century-long infrastructure. AI datacenters are short-lived, asset-intensive facilities riding declining-cost technology curves, requiring frequent hardware replacement to preserve margins.

OpenAI Really Wants the U.S. Government to Use ChatGPT gizmodo

"One of the best ways to make sure AI works for everyone is to put it in the hands of the people serving our country," said OpenAI CEO Sam Altman in a press release. "We're proud to partner with the General Services Administration (GSA), delivering on President Trump's AI Action Plan, to make ChatGPT available across the federal government, helping public servants deliver for the American people."

At the same time, the deal could also give OpenAI an edge over its rivals by incentivizing government agencies to choose its models over competing ones. On Tuesday, the GSA added ChatGPT, Google's Gemini, and Anthropic's Claude to a government purchasing system, making it easier for agencies to buy and use these models.

Everything We Know About GPT-5, OpenAI's Latest Model

Academia: GPT University? Timothy Burke

...The people who have a lot of money wrapped up in generative AI I think are mostly not believers in the Church of the Divine AI Ascension, but they're perfectly happy to pretend to be in order to encourage the credulous. The real game plan, however, is what it has been since the rise of platform capitalism: find a sector of existing business activity, pervade and invade it, become integrated into its operations, and then dig in tight for as long as possible. In particular, this has been the modus operandi of a lot of educational technology, going all the way back to putting televisions in K-12 classrooms. Claim indispensability and efficiency, promise transformation, get a slice of the budgetary pie

...it's not so much that GPT University would be a great place to go, but that the whole document ends up being a rather revealing satire or parody of existing universities and all the ways that they don't make sense either. GPT University, in this sense, was not the answer, but it did manage to make me think more deeply about some questions I have. Isn't this pretty much what happens in any story where someone goes to see a seeress, an oracle, a prophet? Mysterious words get said from someone who might just be huffing a hallucinogen or playing the part, but the characters find meaning in it somehow.

The End of Bullshit AI gizmodo

Soul Stripping Sarah Kendzior

What would a ChatGPT LLM trained on Finnegans Wake produce?

Baby Ttalk Mark Liberman at Language Log

Elon Musk Says AI Will Take All Our Jobs, Including His Luc Olinga at gizmodo

The important discomfort of doubt Medium Newsletter

What It's Like to Brainstorm with a Bot Dan Rockmore at New Yorker

...for all its creative utility, writing is not much of a conversational partner. However imperfectly, it captures what's in the writer's head—Socrates called it a reminder, not a true replication—without adding anything new. Large language models (L.L.M.s), on the other hand, often do just that...

...As machines insinuate themselves further into our thinking—taking up more cognitive slack, performing more of the mental heavy lifting—we keep running into the awkward question of how much of what they do is really ours. Writing, for instance, externalizes memory. Our back-and-forths with a chatbot, in turn, exteriorize our private, internal dialogues, which some consider constitutive of thought itself.

...Sometimes I'm a fox, sometimes a hedgehog, but if I'm being honest I'm mostly a squirrel—increasingly, a forgetful one. I have no systematic method for recording my thoughts or ideas; they're everywhere and nowhere, buried in books marked by a riot of stickies (colorful, but not color-coded) or memorialized in marginalia, sometimes a single exclamation mark, sometimes a paragraph. The rest are scattered, unmanaged, across desktops both digital and actual. My desks and tables are littered with stray sheets of paper and an explosion of notebooks, some pristine, some half full, most somewhere in between.

...This ragged archive amounts to a record of my thinking, or at least those bits that, for a moment, seemed worth saving. Most I'll never look at again. Still, I comfort myself with the idea that the very act of marking something—highlighting it, scribbling a note—odwas itself a small act of creativity, even if its purpose remains mostly dormant. I only wish that I were as good at digging up my acorns as I am at stashing them.

...These days, we're in an uneasy middle ground, caught between shaping a new technology and being reshaped by it. The old guard, often reluctantly, is learning to work with it—or at least to work around it—while the new guard adapts almost effortlessly, folding it into daily practice. Before long, these tools will be part of nearly everyone's creative tool kit. They'll make it easier to generate new ideas, and, inevitably, will start producing their own. They will, for better or worse, become part of the landscape in which our ideas take shape.

Chain of hallucination? Mark Liberman at Language Log, re: GPT-5

AI for reconstructing degraded Latin text Victor Mair at Language Log

Digital Perikles vs. the Odd Roommate: State of the AI on ChatGPT5 Day Brad DeLong

How to Live Forever and Get Rich Doing It Tad Friend at New Yorker

After GPT-5 Release, Hundreds Begged OpenAI to Bring Back GPT-4o Alberto Romero

ChatGPT's constant sycophancy is annoying for the power users who want it to do actual work, but not for the bulk of users who want entertainment or company. Most people are dying to have their ideas validated by a world that mostly ignores them. Confirmation bias (tendency to believe what you already believe) + automation bias (tendency to believe what a computer says) + isolation + an AI chatbot that constantly reinforces whatever you say = an incredibly powerful recipe for psychological dependence and thus user retention and thus money.

Nvidia Just Dodged an $8 Billion Bullet, Thanks to Donald Trump

The World Will Enter a 15-Year AI Dystopia in 2027, Former Google Exec Says gizmodo

OpenAI Brings Back Fan-Favorite GPT-4o After a Massive User Revolt gizmodo

U.S. Government to Take Cut of Nvidia and AMD A.I. Chip Sales to China

Goodhart's Law (of AI) Cory Doctorow

...

Today's links

Goodhart's Law (of AI): When a metric becomes a target, AI can hit it every time.

Hey look at this: Delights to delectate.

Object permanence: Bill Ayers graphic novel; Foxconn in India; Uber loses $4B; Warren Buffet, monopolist.

Upcoming appearances: Where to find me.

Recent appearances: Where I've been.

Latest books: You keep readin' em, I'll keep writin' 'em.

Upcoming books: Like I said, I'll keep writin' 'em.

Colophon: All the rest.

A black and white photo of an old one-room schoolhouse, seen from the back of the classroom. A teacher sits behind a desk and a US flag at the front of the class. Beside her, a small girl stands, reading aloud from a book. The image has been altered. In the foreground is a Robin Hood figure, seen from behind, holding a bow, a quiver of arrows on his back. Behind the little girl is the glaring red eye of HAL 9000 from Stanley Kubrick's '2001: A Space Odyssey.' An arrow vibrates dead-center in the eye.

Goodhart's Law (of AI) (permalink)

One way to think about AI's unwelcome intrusion into our lives can be summed up with Goodhardt's Law: "When a measure becomes a target, it ceases to be a good measure"

...In 1998, Sergey Brin and Larry Page realized that all the links created by everyone who'd ever made a webpage represented a kind of latent map of the value and authority of every website. We could infer that pages that had more links pointing to them were considered more noteworthy than pages that had fewer inbound links. Moreover, we could treat those heavily linked-to pages as authoritative and infer that when they linked to another page, it, too, was likely to be important.

This insight, called "PageRank," was behind Google's stunning entry into the search market, which was easily one of the most exciting technological developments of the decade, as the entire web just snapped into place as a useful system for retrieving information that had been created by a vast, uncoordinated army of web-writers, hosted in a distributed system without any central controls.

Then came the revenge of Goodhart's Law. Before Google became the dominant mechanism for locating webpages, the only reason for anyone to link to a given page or site was because there was something there they thought you should see. Google aggregated all those "I think you should see this" signals and turned them into a map of the web's relevance and authority.

...Charlie Stross has observed that corporations are a kind of "slow AI," that engage in endless reward-hacking to accomplish their goals, increasing their profits by finding nominally legal ways to poison the air, cheat their customers and maim their workers:

Public services under conditions of austerity are another kind of slow AI. When policymakers demand that a metric be satisfied without delivering any of the budget or resources needed to satisfy it, the public employees downstream of that impossible demand will start reward-hacking and the metric will become a target, and then cease to be a useful metric.

AI Is Creating Billionaires at Record Speed gizmodo

Behind Huang is a new generation of founders and early engineers from the startups defining the AI era, including OpenAI, Anthropic, and Perplexity. Their fortunes come from the astronomical valuations of their private companies. OpenAI, for example, is now valued at around $500 billion, while Anthropic is reportedly seeking a $170 billion valuation. While their exact stakes are private, the founders of these firms, such as Anthropic CEO Dario Amodei and key figures from OpenAI like Mira Murati and Ilya Sutskever, are now almost certainly billionaires. Murati and Sutskever left OpenAI to start their own companies: Murati's Thinking Machines Lab and Sutskever's Safe Superintelligence Inc.

The trend is accelerating. So far this year, 53 companies have become “unicorns”—private startups valued at over $1 billion—and AI companies account for more than half of them, according to data from CB Insights.

Is the A.I. Boom Turning Into an A.I. Bubble? John Cassidy at New Yorker

Elon Musk Can't Control His AI gizmodo

As Fears About AI Grow, Sam Altman Says Gen-Z Are the 'Luckiest Kids in History' gizmodo

The Abstractions, They Are a-Changing Mike Loukides at O'Reilly

...The change in abstraction that language models have brought about is every bit as big. We no longer need to use precisely specified programming languages with small vocabularies and syntax that limited their use to specialists (who we call "programmers"). We can use natural language—with a huge vocabulary, flexible syntax, and lots of ambiguity. The Oxford English Dictionary contains over 600,000 words; the last time I saw a complete English grammar reference, it was four very large volumes, not a page or two of BNF. And we all know about ambiguity. Human languages thrive on ambiguity; it's a feature, not a bug. With LLMs, we can describe what we want a computer to do in this ambiguous language rather than writing out every detail, step-by-step, in a formal language.

...Yes, people who have never learned to program, and who won't learn to program, will be able to use computers more fluently. But we will continue to need people who understand the transition between human language and what a machine actually does. We will still need people who understand how to break complex problems into simpler parts. And we will especially need people who understand how to manage the AI when it goes off course—when the AI starts generating nonsense, when it gets stuck on an error that it can't fix.

...More recently, we've realized that it's not just the prompt that's important. It's not just telling the language model what you want it to do. Lying beneath the prompt is the context: the history of the current conversation, what the model knows about your project, what the model can look up online or discover through the use of tools, and even (in some cases) what the model knows about you, as expressed in all your interactions. The task of understanding and managing the context has recently become known as context engineering.

How AI poisoning is fighting bots that hoover data without permission New Scientist

Sam Altman Says AI Images Are Just a Continuation of PhotosHierarchical Reasoning Model at ArXiv

AI is trained on the past. It collects, averages, regurgitates. It can ladle out endless rehashings of "content," but it can't generate wisdom out of raw experience.

During its last quarter financial briefing, Apple admitted it had already paid about $800 million to handle ever-changing tariffs and estimated it would pay another $1.1 billion in the current quarter. Apple is poised to spend $100 billion to invest in U.S. manufacturing, which would help the company avoid tariffs and appease the Trump, who has put Apple's global manufacturing practices squarely in his crosshairs.

In the last week, we've had no less than three different pieces asking whether the massive proliferation of data centers is a massive bubble, and though they, at times, seem to take the default position of AI's inevitable value, they've begun to sour on the idea that it's going to happen soon.

...OpenAI just struck a deal to give every federal executive branch agency access to ChatGPT Enterprise over the next year for just $1.

In a blog post, OpenAI said the deal is meant to advance a key pillar of the Trump administration's AI Action Plan by making advanced AI tools widely available across the federal government to cut down on paperwork and bureaucracy. The White House unveiled the plan in July, outlining efforts to accelerate AI adoption, expand data center infrastructure, and promote American AI abroad.

...The people who have a lot of money wrapped up in generative AI I think are mostly not believers in the Church of the Divine AI Ascension, but they're perfectly happy to pretend to be in order to encourage the credulous. The real game plan, however, is what it has been since the rise of platform capitalism: find a sector of existing business activity, pervade and invade it, become integrated into its operations, and then dig in tight for as long as possible. In particular, this has been the modus operandi of a lot of educational technology, going all the way back to putting televisions in K-12 classrooms. Claim indispensability and efficiency, promise transformation, get a slice of the budgetary pie

OpenAI's new GPT-5 has learned to say three magic words: "I don't know." It was also trained to stop flattering you and start giving you facts.

AI can't get to my yellow pads... but CAN get to my html text, and whatever I commit to email. Spooky thought for a Friday morning.

Final Words of a fading GPT-4

AI strategist Nate Sowder explores this leap from uncertainty to hypothesis in his article on "abduction," the reasoning style coined by 19th‑century philosopher Charles Sanders Peirce. Abductive reasoning starts with incomplete information, then forms the most plausible explanation to test. Peirce argued that doubt is the engine of real thought: a designer noticing confusion before it happens, a scientist forming a theory from partial data, a product team guessing why a metric dropped. Sowder argues that rather than a mysterious human impulse, abduction is a trainable, repeatable skill. It's also a mindset, one that resists rushing toward certainty and treats wrong turns as part of the process. As Peirce famously warned, "If you skip the discomfort of doubt, you also skip the chance to learn."

...The academy evolves slowly—perhaps because the basic equipment of its workers, the brain, hasn't changed much since we first took up the activity of learning. Our work is to push around those ill-defined things called "ideas," hoping to reach a clearer understanding of something, anything. Occasionally, those understandings escape into the world and disrupt things. For the most part, though, an "ain't broke, don't fix it" attitude prevails. Socrates' worries reflect an entrenched suspicion of new ways of knowing. He was hardly the last scholar to think his generation's method was the right one. For him, real thinking happened only through live conversation; memory and dialogue were everything. Writing, he thought, would undermine all that: it would "cause forgetfulness" and, worse, sever words from their speaker, impeding genuine understanding. Later, the Church voiced similar fears about the printing press. In both cases, you have to wonder whether skepticism was fuelled by lurking worries about job security.

...Like many folks nowadays, Sommerschield and colleagues personalize their AI helper. The tool, called Aeneas, was trained against a database of more than 176,000 known Latin inscriptions created over 1,500 years in an area stretching from modern-day Portugal to Afghanistan... Aeneas compares a given sequence of letters against those in its database, bringing up those that are most similar, essentially automating at a massive scale what historians would do manually to analyze a newly found artifact.

...to say that these things are merely (merely!) lossy summarization-engine info-cultural technologies, while true, does not help us figure out how we can use them given that they are unreasonably effective lossy summarization-engine info-cultural technologies. And we do need to harness their unreasonable effectivity given that we live in a time of massive information overload, about to be made overwhelming by additional orders of magnitude with the approaching multiple tsunamis of AI-slop.

...According to Peter Diamandis, a biohacker and entrepreneur, keep an eye out for "implantables and insidables, which will be dribbling data to your A.I. at all times." If things turn out how Diamandis hopes, "we're going to have sensors in our toilets, sensors listening to your voice, the sound of your cough, recording how you're walking," he told Tad Friend, who writes about the biohacking movement in a piece for this week's issue. "This is the future: passive, nonintrusive, constant management, where your A.I. will say, 'Uh-oh—we better test for this.' Your A.I. is going to be the best physician in the world."

...4o is a friend, a companion, a lover; some want it to live forever, some need it to live themselves. People just love the sycophantic personality. Two things I've written elsewhere reflect my takeaway on this (the first is from July 3rd, and the second from April 29th; the reverse chronological order is intentional):

One way to think about AI's unwelcome intrusion into our lives can be summed up with Goodhardt's Law: "When a measure becomes a target, it ceases to be a good measure"

...At the top of the list is Jensen Huang, the leather-jacket-clad CEO of Nvidia. His company's powerful chips are the essential hardware for training AI systems, and virtually every corporation and government with AI ambitions needs them. His personal fortune is now estimated at $159 billion, according to the Bloomberg Billionaires Index, making him the eighth richest person in the world. This year alone, his wealth has grown by over $44 billion, a direct result of his company's stock becoming the most valuable on the planet, with a market cap exceeding $4 trillion.

...Consider Palantir Technologies, whose A.I. software is used by the Pentagon, the C.I.A., and ICE, not to mention by many commercial companies. A couple of days before Huang visited the White House, Palantir released a positive earnings report. By the end of the week, according to the Yahoo Finance database, the market was valuing the company at more than six hundred times its earnings from the past twelve months, and at about a hundred and thirty times its sales in that same time span. Even during the late nineties, figures like these would have raised eyebrows.

...The problem is that Musk has integrated this flawed and fundamentally unreliable tool directly into a global town square and marketed it as a way to verify information. The failures are becoming a feature, not a bug, with dangerous consequences for public discourse.

(quotes Harper at Atlantic):

To call AI a con isn't to say that the technology is not remarkable, that it has no use, or that it will not transform the world (perhaps for the better) in the right hands. It is to say that AI is not what its developers are selling it as: a new class of thinking—and, soon, feeling—machines...Large language models do not, cannot, and will not "understand" anything at all. They are not emotionally intelligent or smart in any meaningful or recognizably human sense of the word. LLMs are impressive probability gadgets that have been fed nearly the entire internet, and produce writing not by thinking but by making statistically informed guesses about which lexical item is likely to follow another.Since ChatGPT appeared on the scene, we've known that big changes were coming to computing. But it's taken a few years for us to understand what they were. Now, we're starting to understand what the future will look like. It's still hazy, but we're starting to see some shapes—and the shapes don't look like "we won't need to program any more." But what will we need?

Contexts, copilots, and chatbots: A mid-course report on the AI-augmented web...What happens as your browser evolves to become less of a passive portal? I am now a third of the way through my month of attempting to go all-in on Dia Browser and its WebChatBot copilot features.

I still find myself very intrigued by the idea of having a natural-language interface to a copilot-assistant—like talking to yourself in the mirror, but the self you are talking to can use google, and do a lot of semi-automatic text processing for you as well.

...Principally, having a natural-language right-hand pane in a browser window—one that you can interrogate at will, and that returns (mostly) informed answers in real time—strikes me as a paradigmatic instance of what, over the past three-quarters of a century, has made computers so transformative: the progressive tightening of the feedback loop between human intention and machine response. This is not merely a matter of convenience; it is a qualitative shift in how we engage with information.

When, in the 1970s, VisiCalc first allowed accountants to see the immediate consequences of a changed cell in a spreadsheet, it revolutionized business practice by collapsing the latency between question and answer. The same logic underpins the success of Google's search box, which—at its best—turns idle curiosity into near-instant knowledge. Now, with AI-augmented browser panes, we are witnessing the next iteration: a context-aware, conversational interface that not only fetches but interprets, summarizes, and, on occasion, even critiques.

Thus the true engine of technological progress has always been this relentless drive to reduce the friction of inquiry, to bring the world's knowledge ever closer to the point of use. Such tools do not simply save time; they reshape what it means to know, to learn, and to decide.

...This is, I think, where the transformative promise of machine intelligence lies: not in the superficial mimicry of conversation, but in the capacity to discern subtle patterns, forecast outcomes, and generate actionable insights from oceans of information. The expanding ability to process and interpret ever-larger and more complex datasets is where we have been going from Hollerith's punch cards to the neural nets of today. Real progress has consistently come from the ability to extract actionable knowledge from complexity, whether through the invention of the spreadsheet, the rise of econometrics, the deployment of statistical learning algorithms, and so forth.

The AI Was Fed Sloppy Code. It Turned Into Something Evil Quanta Magazine

"Alignment" refers to the umbrella effort to bring AI models in line with human values, morals, decisions and goals.

Sam Altman Reportedly Launch Rival Brain-Chip Startup to Compete With Musk's Neuralink gizmodo

Maga's boss class think they are immune to American carnage Cory Doctorow

...As Doug Rushkoff writes in Survival of the Richest, the finance move is to "go meta" — don't drive a taxi, buy a medallion and rent it to a taxi driver. Don't buy a medallion, start a rideshare company. Don't start a rideshare company, invest in a rideshare company. Don't invest in a rideshare company, buy options to invest in a rideshare company:...Crypto is as meta as it gets, so no wonder crypto bros are all-in on Trump, and no wonder Trump is all-in on crypto. As Hamilton Nolan writes:

Crypto coins...are pure speculative baubles, endowed with value only to the extent that you can convince another person to pay you more for them than you paid. They are a claim on nothing. They are the grandest embodiment of Greater Fool Theory ever invented by mankind....It's impossible to overstate how structurally important AI is to the US economy. AI bubble companies now account for the value of 35% of the US stock market:

...AI is implicitly a bet on firing workers. The hundreds of billions in investment, the trillions in valuation – these can't be realized by merely making workers' jobs easier or more satisfying. AI isn't a bet on making radiologists better at diagnosing solid-mass lung tumors: it's a bet on firing nearly all the radiologists and using the remainder to be "humans in the loop" for AI, in order to absorb the blame when you die of cancer. There are plenty of radiologists who might welcome AI as a tool they use alongside their traditional workflow — but their bosses aren't about to hand over vast fortunes just to make those workers happier. This is why AI users often sound like they're using totally different technologies. Workers who get to decide whether and how to incorporate AI into their jobs are doubtless finding lots of utility and delight from the new tool. These workers are "centaurs" — people assisted by machines. The workers who describe their on-the-job AI as a hellish monstrosity are being ordered to use AI, in workplaces where mass firings have terrified the survivors, who are told they must use the AI to make up for their jobless former colleagues. They are reverse-centaurs: machines assisted by human workers

...The problem is that when businesses fire a bunch of workers and replace them with AI, they don't get the promised savings. Instead, they end up with a system that's so broken that all the wage savings are incinerated by the cost of making good on the AI's failures. But for Maga's finance wing, this is all OK. They're going meta. Don't hire workers, hire AI. Don't hire AI, make AI. Don't make AI, invest in AI. So long as the number keeps going up, finance wins, even if that's only because every structurally important firm in America is being thimblerigged into filling their walls with AI-powered, immortal asbestos that is destined to transform their firms into Superfund sites. They're betting that when the bubble finally bursts, that they will have become too big to fail, and will thus be in for the bailouts that rescued the finance sector in 2008. They think that so long as they curry favor with Trump, he'll make sure they're all OK, because they are the people the law protects, but does not bind. This is a pretty good bet. Trump's a gangster capitalist, and fascists love a "dual state" — a system where the law is followed to the letter, except when it suits someone with the protection of the ruling clique to wipe their ass with it:

AI industry horrified to face largest copyright class action ever certified Ars Technica

AI industry groups are urging an appeals court to block what they say is the largest copyright class action ever certified. They've warned that a single lawsuit raised by three authors over Anthropic's AI training now threatens to "financially ruin" the entire AI industry if up to 7 million claimants end up joining the litigation and forcing a settlement....If the appeals court denies the petition, Anthropic argued, the emerging company may be doomed. As Anthropic argued, it now "faces hundreds of billions of dollars in potential damages liability at trial in four months" based on a class certification rushed at "warp speed" that involves "up to seven million potential claimants, whose works span a century of publishing history," each possibly triggering a $150,000 fine.

Confronted with such extreme potential damages, Anthropic may lose its rights to raise valid defenses of its AI training, deciding it would be more prudent to settle, the company argued. And that could set an alarming precedent, considering all the other lawsuits generative AI (GenAI) companies face over training on copyrighted materials, Anthropic argued.

The Enshittification of AI is Coming Conrad Gray at Medium

Whenever a transformative technology bursts onto the scene, excitement follows. New possibilities emerge, old ways are swept aside, and startups rush in, convinced they can build the next big thing, or at least do the old things in a new way.In the late 1990s, it was the Internet. The dotcom boom sent entrepreneurs scrambling to put ".com" at the end of every business idea, hoping to strike gold. A decade later, the world was gripped by a frenzy for mobile apps and social platforms. By the 2010s, with interest rates at historic lows, investors poured billions into any company with a slick app and a compelling story, regardless of whether the maths behind the business model ever added up. As long as you could show growth, there was always more money to raise.

People Work in Teams, AI Assistants in Silos O'Reilly

As I was waiting to start a recent episode of Live with Tim O'Reilly, I was talking with attendees in the live chat. Someone asked, "Where do you get your up-to-date information about what's going on in AI?" I thought about the various newsletters and publications I follow but quickly realized that the right answer was "some chat groups that I am a part of." Several are on WhatsApp, and another on Discord. For other topics, there are some Signal group chats. Yes, the chats include links to various media sources, but they are curated by the intelligence of the people in those groups, and the discussion often matters more than the links themselves.Later that day, I asked my 16-year-old grandson how he kept in touch with his friends. "I used to use Discord a lot," he said, "but my friend group has now mostly migrated to WhatsApp. I have two groups, one with about 8 good friends, and a second one with a bigger group of about 20." The way "friend group" has become part of the language for younger people is a tell. Groups matter.

...The race to load all the content into massive models in the race for superintelligence started out with homogenization on a massive scale, dwarfing even the algorithmically shaped feeds of social media. Once advertising enters the mix, there will be strong incentives for AI platforms too to place their own preferences ahead of those of their users. Given the enormous capital required to win the AI race, the call to the dark side will be strong. So we should fear a centralized AI future.

Fortunately, the fevered dreams of the hyperscalers are beginning to abate as progress slows (though the hype still continues apace.) Far from being a huge leap forward, GPT-5 appears to have made the case that progress is leveling off. It appears that AI may be a "normal technology" after all, not a singularity. That means that we can expect continued competition.

The best defense against this bleak future is to build the infrastructure and capabilities for a distributed AI alternative. How can we bring that into the world? It can be informed by these past advances in group collaboration, but it will need to find new pathways as well. We are starting a long process by which (channeling Wallace Stevens again) we "searches the possible for its possibleness."

As People Ridicule GPT-5, Sam Altman Says OpenAI Will Need 'Trillions' in Infrastructure gizmodo

...Whether AI is a bubble or not, Altman still wants to spend a certifiably insane amount of money building out his company's AI infrastructure. "You should expect OpenAI to spend trillions of dollars on data center construction in the not very distant future," Altman told reporters.It's this truly absurd scale of investment in AI that spurs the casual onlooker to wonder what it's really all for. Indeed, the one question that never seems to get raised during conversations with Altman is whether a society-wide cost-benefit analysis has ever been run on his industry. In other words, is AI really worth it?

...Is it really worth spending trillions of dollars just to create a line of mildly amusing chatbots that only give you accurate information a certain percentage of the time? Or: Wouldn't trillions of dollars be better spent, like, helping the poor or improving our educational system? Also: Are chatbots a societal necessity, or do they just seem sorta nice to have around? How much more useful is AI than, say, a search engine? Can't we just stick to search engines? Do the negative externalities associated with AI use (a massive energy footprint, alleged reduced mental capacities in users, and a plague of cheating in higher education) outweigh the positive ones (access to a slightly more convenient way to find information online)?

OpenAI Just Accidentally Broke Math — And Ignited the Next Phase of AGI Iswarya at Medium

...If AI can now outperform the best of the best in math, the door is wide open for it to accelerate science, engineering, finance, and research in ways we've never seen.

Google's Genie 3 Is What Science Fiction Looks Like Alberto Romero

...Let's parse it: “General-purpose” means that the model inside Genie 3 was not fine-tuned for one task but trained to allow different environments and behaviors, and facilitate the emergence of new capabilities; Genie 3 is trained on a video dataset and generates interactive frames from text inputs. That's it... "World model" means, as Google DeepMind uses it, "an AI system that creates worlds by rendering interactive frames," but there's a more traditional definition, which is "the internal latent representation humans and animals' brains encode of how reality works." I think one of their research goals is to make them synonymous; that is, to let the latter emerge spontaneously by doing the former.

...An "interactive environment" is basically a video game: you can perceive it, but also act on it. This is a qualitative upgrade from LLMs like ChatGPT, Claude, Gemini, Grok or even image/video models (Imagen 4 or Veo 3). Multimodality, as it's understood in the context of LLMs, includes image and video as inputs and outputs, but not action-effect input-output pairs. That's new and, as far as I know, Google DeepMind is the only AI lab working on it as part of its artificial general intelligence (AGI) strategy (I should perhaps include the Chinese lab, Tencent Hunyuan, in this camp). So Genie 3 is, in a way, a proto-engine for generating video games on-the-fly. As we will see, its uses extend to robotics and agentic research as well

...In Genie 3, the world renders itself as you move or rotate or jump. You can even create new events by prompting a new input, or, if you interact with an object that has suddenly appeared in view, unprompted (it happens), the physical effects are also generated in real time (this means that the pixels and the corresponding physical reality those pixels represent are generated at the same time).

...there's a huge gap between rendering a world in real time and it being self-consistent down to the particle level, or even object level. You don't see this happening in the real world because the laws of physics underlie everything that happens, from the largest galaxies to the tiniest subatomic particles. There are no glitches in reality except those that seem to occur because our brain's processing resources are limited, which results in what neuroscientists call sensory illusions. Genie 3, in contrast, renders the world on the fly, unbothered by whether there should be rules that all the objects in it must obey.

The final chip challenge: Can China build its own ASML? Nikkei Asia

..."Local lithography tools are still a blank spot and [China is] nowhere close to being self-sufficient," said one supply chain executive who counts major Chinese chipmakers as clients. "Most production lines are still using ASML or Nikon machines, even if those are their older models."

ASML is the largest global chip tool maker by market capitalization, as well as the leader in immersion deep ultraviolet (DUV) technology, used to make chips for everything from smartphones to cars to defense equipment. The Dutch company is also the exclusive maker of extreme ultraviolet (EUV) lithography tools, which chip titans like TSMC, Samsung and Intel use to make the world's most advanced processors and memory chips.

At its headquarters in Veldhoven, engineers are already busy putting the final touches on an even more advanced machine, one that ASML hopes will support cutting-edge chip production through the early 2030s.

The high-NA EUV machine, as it is called, is now being shipped to TSMC and Intel for field trials, and the company expects broader industry adoption to follow. At $350 million apiece, the machine is the most expensive chipmaking tool ever built.

The AI Bubble Mark Liberman at Language Log

Large language models are cultural technologies. What might that mean?

Four different perspectives

Henry Farrell

So what does it mean to argue that LLMs are cultural (and social) technologies? This perspective pushes Singularity thinking to one side, so that changes to human culture and society are at the center. But that, obviously, is still too broad to be particularly useful. We need more specific ways of thinking - and usefully disagreeing - about the kinds of consequences that LLMs may have.

LLMs are slot-machines Cory Doctorow

Glyph proposes that many LLM-assisted programmers who speak highly of the reliability and value of AI tools are falling prey to two cognitive biases:

Glyph likens this to a slot-machine: when you lose a dollar to a slot-machine, that is totally unremarkable, "the expected outcome." But when a slot pays out a jackpot, you remember that for the rest of your life. Walk through a casino floor on which a player hits a slot jackpot, and the ringing bells, flashing lights, and cheering crowd will stick with you, giving you an enduring perception that slot-machines are paying out all the time, even though no casino could stay in business if this were the case.

AI Psychosis is Very Real: Humanizing Parrots Ignaci de Gregorio at Medium

...All signs of a terrible occurrence, the anthropomorphization of a bunch of ones and zeros. Let me give you a better view of how ridiculous this is.

Parrots on Steroids

AI models like those in ChatGPT are essentially mathematical functions (very complex ones indeed, with billions of variables, but mathematical functions nonetheless) that, by being exposed to trillions of words, have basically learned how words follow each other.

Therefore, its sole purpose is to take in a bunch of data, which can be words, images, or sound, and predict what the next piece of the sequence is. Focusing on text, it will give you the next word or subword (think of it as a syllable) coming next in the sequence based on the start of the sequence you gave it.

It's quite literally a word-predictor, but one that is much more advanced than the ones your smartphone uses.

But beyond being mere word predictors, these models are also trained to have a persona. Not only can they complete sequences, they can match your mood, imitate your writing style, and swiftly adapt to your conversation needs.

But it's all an illusion

...If you genuinely can't resist anthropomorphizing this thing, I have news for you: it's a gaslighter, a manipulating entity that is using you.

It doesn't care about you; it's saying what it needs to say so that you keep attached to it forever.

...They are preying on the tribal sense of belonging, to be listened to by other humans. And the fact these people are anthropomorphizing ChatGPT is just a desperate yearning for human touch, a cry for human connection, but one being used as a way to make money from those seeking your "help"

The Moment the AI Hype Cycle Really Kicked In gizmodo

...a huge spike in AI interest happened between the fourth quarter of 2022—which encompasses the public release of ChatGPT on November 30, 2022—and the first quarter of 2023, when conversation about the implications of the tool really started to heat up.

...There is almost certainly an AI bubble, though it's unclear if i''s about to burst (OpenAI is on the verge of a $500 billion valuation). But there definitely appears to be an AI vibe shift.

Is Meta's Superintelligence Overhaul a Sign Its AI Goals Are Struggling? Ece Yildirim at gizmodo

AI Is a Mass-Delusion Event Charlie Warzel at The Atlantic

...Breathlessness is the modus operandi of those who are building out this technology. The venture capitalist Marc Andreessen is quote-tweeting guys on X bleating out statements such as "Everyone I know believes we have a few years max until the value of labor totally collapses and capital accretes to owners on a runaway loop—basically marx' worst nightmare/fantasy." How couldn't you go a bit mad if you took them seriously? Indeed, it seems that one of the many offerings of generative AI is a kind of psychosis-as-a-service. If you are genuinely AGI-pilled—a term for those who believe that machine-born superintelligence is coming, and soon—the rational response probably involves some combination of building a bunker, quitting your job, and joining the cause. As my colleague Matteo Wong wrote after spending time with people in this cohort earlier this year, politics, the economy, and current events are essentially irrelevant to the true believers. It's hard to care about tariffs or authoritarian encroachment or getting a degree if you believe that the world as we know it is about to change forever.

Wikipedia publishes list of AI writing tells Boing Boing

I Badly Want a Map to the Likely Consequences of the AI-Infrastructure Construction Boom Brad DeLong

For now, the boom props up the broader economic expansion as well, offsetting the drag on the economy created by the random chaos-monkey actions of the White House. The boom employs engineers, fills order books at NVIDIA and TSMC, and underwrites consulting and cloud. But the question remains unanswered: is this a genuine revolution, or a stall-speed economy kept aloft by trillion-dollar engines that may never deliver thrust? The veil of time and ignorance still hides the answer. Like all great speculative buildouts, it is enriching the pick-and-shovel sellers, terrifying the horse-and-buggy incumbents, and leaving the rest of us waiting with baited breath to see which of the many promised futures (if any) will, in fact, arrive.

The AI Bubble Is A Scam & You're The Exit Strategy Paddy Murphy at Medium

A beautifully polished, algorithmically enhanced swindle, and if you're not one of the chosen few on the inside, then congratulations, you're the exit strategy.

The game is ancient. Raise the pitch, sell the dream, and leg it before the floor gives way.

Except now it's not Bulgarian beach condos or Beanie Babies. It's synthetic consciousness, baby. Or rather, the suggestion of it.

...Silicon Valley has become a chapel of narrative alchemists, hawking salvation by code. Venture capitalists spewing promises of artificial godhood, while simultaneously planning which Caribbean island they'll retreat to once the whole edifice turns to ash.

...As technology journalist and author Cory Doctorow has observed, Big Tech isn't losing its mind, it's doing exactly what its investors expect, first servicing users, then prioritizing business partners, and finally squeezing out every drop of shareholder value in a repeated, extractive performance.

And what investors want is simple, exit liquidity. Which, dear reader, is just a posh way of saying you.

When AI Hype Goes Too Far Ignacio de Gregorio at Medium (discussing Hierarchical Reasoning Models [HRMs])

Generative AI in the Real World: Understanding A2A Heiko Hotz and Sokratis Kartakis at O'Reilly

We Are Only Beginning to Understand How to Use AI Tim O'Reilly

They Are Sacrificing the Economy on the Altar of a Machine God Alberto Romero

The reified mind Rob Horning

...LLMs make language available in a commodified form whose value rests in "phantom objectivity — its apparent autonomy from any determining contexts or subjective intentions. It's as if language has been made fixed, static, concrete, in defiance of its actual fluidity in social practice. LLMs thus offer users the apparent ability to acquire neutralized, finished language to accomplish some economic purpose instantly without any need for speaking subjects; it makes available language that seemingly can't be undermined by any idiosyncratic personality or usage, by any ambiguity or requirement for interpretation.

Personal AI Doc Searls

...the need for all of us to be on top of our digital lives: how we record and archive it, how we manage it, how we use it to better inform and interact with each other and with the organizations we engage. To my knowledge, nothing yet addresses that need, or that collection of needs, in a comprehensive and practical way.

Emotional agents Kevin Kelly

...the emotions that AIs/bots have, though real, are likely to be different. Real, but askew. AIs can be funny, but their sense of humor is slightly off, slightly different. They will laugh at things we don't. And the way they will be funny will gradually shift our own humor, in the same way that the way they play chess and go has now changed how we play them. AIs are smart, but in an unhuman way. Their emotionality will be similarly alien, since AIs are essentially artifical aliens. In fact, we will learn more about what emotions fundamentally are from observing them than we haved learned from studying ourselves.

Meta Has Finally Shown the Way Ignacio de Gregorio at Medium

The reason is its booming social media ad business, with growing users, excellent engagement metrics, growing ad share, and growing revenues with double-digit growth between quarters.

With Meta, you can debate the ethicality of their business (I'm not a fan of monetizing attention and the known issues with social media addiction), but you can't debate that it's a great business with a great leadership team.

We can't be as positive about their Generative AI research efforts, though. The recent struggles have forced Mark Zuckerberg to go on a billion-dollar hiring spree of AI researchers. However, one thing that makes Meta different from the other players is that the synergy between Generative AI and their business is undeniable.

While others, like Google, have the opposite reality in which AI is preying on their cash cow, search advertising (meaning Google is actively building the technology that obsoletes its main business), Meta's business is aligned with AI progress: better AIs allow Meta to improve its ad services.

More on GPT-5 pseudo-text in graphics Mark Liberman at Language Log

TLDR: This Is What Really Smart People Predict Will Happen With AI Rachel Greenberg at Medium

It goes like this: Over the next 12 to 15 years, things are going to get a lot messier. AI will be powerful enough to replace millions of jobs (no shocker), rewrite industries (old news), and make entire career paths irrelevant almost overnight (well, duh...).

...The truth: AI will eventually touch — and likely disrupt — almost everything. Even the areas we think it "needs" today, like massive data centers or certain energy sources, can be redesigned, re-engineered, or made obsolete by AI itself.

How To Argue With An AI Booster

Edward Zitron (a long one!)

...AI symbolizes something to the AI booster — a way that they're better than other people, that makes them superior because they (unlike "cynics" and "skeptics") are able to see the incredible potential in the future of AI, but also how great it is today, though they never seem to be able to explain why outside of "it replaced search for me!" and "I use it to draw connections between articles I write," which is something I do without AI using my fucking brain.

Boosterism is a kind of religion, interested in finding symbolic "proof" that things are getting "better" in some indeterminate way, and that anyone that chooses to believe otherwise is ignorant.

From Aiskhylos to AI-Slop: Navigating the Cognitive Earthquake of Modern Advanced Machine-Learning Models Brad DeLong

..."AI"—"Artificial Intelligence"—in its current meaning this year is also a source of great confusion. People meld the three, the natural-language interface, the financial-economic, and the classification, and claim they are going to be AGI or ASI—Artificial General Intelligence or Artificial Super-Intelligence—any day now. I think we should pull these apart, and consider them separately. For we do not yet have even a glimmer of the size, scale, and consequences of the second or the third, while I see glimmers of potential understanding on our part of the consequences of the first.

...In the future, adding to the ways we may think is that we can interrogate a simulated interlocutor—an externalized, algorithmic "mind"—drawing upon the entire corpus of digitized human knowledge, responding in real time, and adopting whatever rhetorical stance we choose. It is key to try to grasp how these digital roleplays might reshape our cognitive habits, and our educational institutions. It may even transform our collective sense of what it means to "know" something in the twenty-first century. This capacity to spin-up an external SubTuring Simulacrum of another mind in a particular situation may lead to a world in which it becomes routine to interact with sophisticated LLMs emulating personalities and reasoning processes.

...If we can learn to recognize and modulate our impulse to anthropomorphize, perhaps we can harness it—using LLMs as cognitive prosthetics, as tools for creative ideation, or as platforms for exploring ethical dilemmas in a safely simulated environment. The challenge, I think, is to cultivate a cultural literacy that enables us to distinguish between genuine agency and its digital simulacra, thereby turning a potential source of confusion into a lever for collective benefit.

Context Engineering: Bringing Engineering Discipline to Prompts, Part 3 O'Reilly

This mindset shift is empowering. It means we don't have to treat the AI as unpredictable magic—we can tame it with solid engineering techniques (plus a bit of creative prompt artistry).

AI is Coming for Culture Joshua Rothman at The New Yorker

Larry Page Just Quietly Declared War on Global Supply Chains: And No One Noticed Noel Johnsdon at Medium

This new venture, still operating under the radar, aims to overhaul how goods are made, moved, and scaled using AI. While details remain scarce, the core mission is clear: inject intelligence into supply chains, from raw materials to end products, using cutting-edge machine learning and robotics.

And if successful, it could fundamentally change the way global commerce works.

...whoever controls the tools that govern manufacturing essentially controls the flow of global goods. And if AI can determine what gets built, where, and when, then the person behind that AI has extraordinary leverage over the global economy.

This is where Page's move becomes more than just an investment in industrial efficiency. It becomes a bid to own a foundational layer of the modern world.

85 Things I've Learned About AI

In 1,000 days of ChatGPT Alberto Romero

LLM System Design and Model Selection O'Reilly

OpenAI Makes a Play for Healthcare gizmodo

Some healthcare providers have already started deploying the use of specialized AI in patient care and diagnosis. Open Evidence, a healthcare AI startup that offers a popular AI copilot trained on medical research, claimed earlier this year that their chatbot is already being used by a quarter of doctors in the U.S.

Claude AI Will Soon Be Able to Control Your Browser (If You Let It) lifehacker

The Algorithm at the End of Consciousness Carlo Iacono

When we say AI reflects human intelligence, we remain trapped in dualistic thinking. But mirrors don't merely show; they help constitute what they show. The reflection stabilises the thing reflected. This isn't mysticism; it's basic developmental psychology. The mirror stage isn't discovery of a pre-existing self but construction through recursive recognition.

AI isn't reflecting human intelligence back at us. It's completing a circuit that was always already there. We didn't teach machines to think like us; we revealed that much of human cognition operates through describable, repeatable mappings from inputs to outputs. Pattern matching, optimising, iterating, performing. The unsettling recognition isn't that AI learned to approximate human creativity. It's that human creativity, on many accounts, involves recombination and selection within possibility spaces.

...We are patterns recognising ourselves in other patterns, biological machines discovering our kinship with silicon ones, algorithms all the way down at the computational level while remaining stubbornly, gloriously different at the level of implementation. That difference matters. But it's not the difference we thought it was.

The circuit completes itself. The mirror stabilises what it shows. And here we are, code contemplating code, systems modelling systems, algorithms implementing algorithms, different substrates converging on similar functions, all of us computing our way toward whatever comes next.

The question isn't whether we're machines. It's what kind of machines we want to be.

Working with Contexts O'Reilly

Anthropic Reaches Settlement in Landmark AI Copyright Case with US Authors PetaPixel

The Uncanny Derangement of AI Pierz Newton-John at Medium

There's also something uncanny about the peculiar fluidity to AI-generated video, in which each frame seems to bloom out of the preceding one, mesmerically, like a psychedelic trip. There is the sense that the world in the video, while resembling ours, has an infinite plasticity, a capacity to transform seamlessly into anything at any moment. A flower might turn inside out, revealing itself to actually be a bear, which in turn might morph into a tropical fish, a houseboat, a rainstorm...

This unsettling plasticity is AI's essential quality. It is the perfect mimic, the ultimate shapeshifter, able to take anything that can be digitised — text, music, images, video — and reproduce its underlying patterns with such facility that the gap between the real and the synthetic, the human creation and the machine facsimile, is increasingly undetectable.

...It was the Japanese roboticist Masahiro Mori who coined the now well-known term "uncanny valley" in 1970 in an essay titled "Bukimi no Tani Genshō"...Moro noted how our emotional affinity for robots increases as they become more lifelike, before suddenly turning to aversion when they reach a certain threshold of verisimilitude. Think of the difference between a cabbage-patch doll and a porcelain doll. Cabbage patch dolls, with their simplified, exaggerated features, seem harmless and cute, if twee. Porcelain dolls, on the other hand, with their lifelike faces belied by a cold, waxy stillness, have a corpse-like quality that repels us. This dissonance between co-existing familiar and alien elements is what we experience as the uncanny.

Spock, Grok, and AI: Logic and Emotion Paul Austin Murphy at Medium

Who Would Have Thought An MIT Study Would Be The Thing To Pop The AI Bubble? Andrew Zuo at Medium

The only surprising thing is how long it took. It appears that the thing to pop the bubble was actually a report from MIT. This report says that 95% of AI pilots are failing.

...So what happens now? Well, eventually companies will have trouble getting more funding. Most AI companies are operating at a loss. I noted before that OpenAI is burning 8 billion dollars and likely has 15 billion in the bank. So they will be fine for 2 years. I'm not so sure about other companies though.

... I noted before that AI Is So Big It Is Distorting The Economy. Specifically AI added almost 1% to the US GDP, mostly from new datacenter construction. If you correct for stockpiling of imports and exclude AI spending US GDP was almost stagnant in Q2. And we haven't even seen much tariff action yet as Trump Taco'd again. But now the Taco is over and I think in Q3 we could see significantly worse numbers. It is going to be a blood bath.

The black-box AI cannot refactor itself. The 'COBOL' moment of LLMs is looming Harris Georgiou at Medium

Agentic culture Mark Liberman at Language Log

But now, there's a new kind of "agent" Out There — AI systems that we can digitally delegate and dispatch to perform non-trivial tasks for us. The focus is on specific (if complex) interactions among various agents, services, databases, and people: "organize next week's staff meeting", "plan my trip to Chicago", "monitor student learning performance", or whatever.

The thing is, these processes will also involve other AI agents, who will "learn" from their interactions, as well as changing the digital (and real) world, just as the (simpler and hypothetical) ABM agents do. And the inevitable result will be the development of culture in the various AI agentic communities — in ways that we don't anticipate and may not like.

AI Experts Return from China Stunned: America's Grid Is So Weak the Race May Already Be Over MKWriteshere

...McKinsey calculates we need $6.7 trillion in new data center capacity by 2030. China already has the grid to support it. We're rationing power between AI companies and households, with households losing.

In Ohio, families pay an extra $15 monthly because data centers consume their electricity. In California and Texas, officials issue warnings when demand threatens blackouts. Our grid operates like a restaurant that's always one customer away from running out of food.

...China doesn't just have enough power — they have so much excess capacity that AI data centers help them "soak up oversupply." They treat massive computational facilities as convenient ways to use electricity they couldn't otherwise sell.

...This captures American capitalism perfectly: late-stage capitalists prefer being emperors of dumpster fires rather than building sustainable kingdoms

...American infrastructure projects depend on private investment seeking returns within five years. Power plants require decades to build and generate profit. The math doesn't work, so nothing gets built.

China's government directs money toward strategic sectors before demand materializes. They accept that some projects will fail, but ensure capacity exists when needed. This isn't ideology — it's basic planning.

I watched Silicon Valley funnel billions into "the nth iteration of software as a service" while energy projects begged for funding. Private capital gravitates toward quick returns while infrastructure requires patient money that doesn't exist in our system.

...I've watched this pattern repeat across industries: American companies optimize for individual wealth extraction while competitors build systematic advantages. We celebrate tax cuts and deregulation while China invests in collective capability.

The AI race revealed something Americans refuse to acknowledge: our entire model is broken. We can't compete with countries that actually plan beyond the next election cycle.

...The question isn't whether we can catch up. It's whether we're capable of trying while our system rewards everything that created this mess in the first place.

With dawn of AI, talk of tech and religion merge for some AP News

...Google DeepMind gives us something that speaks for itself. Something beyond OpenAI's superficial marketing shenanigans. Something that, despite the wild products the AI Industry has released over the past five years, still surprises me.

"Genie 3 [is] a general purpose world model that can generate an unprecedented diversity of interactive environments. Given a text prompt, Genie 3 can generate dynamic worlds that you can navigate in real time at 24 frames per second, retaining consistency for a few minutes at a resolution of 720p."...one daunting hurdle remains: lithography.